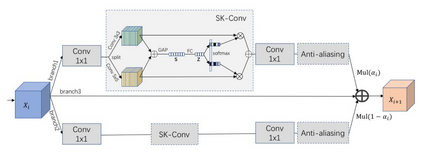

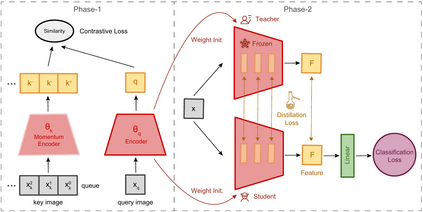

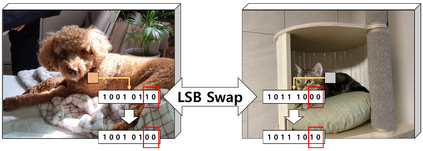

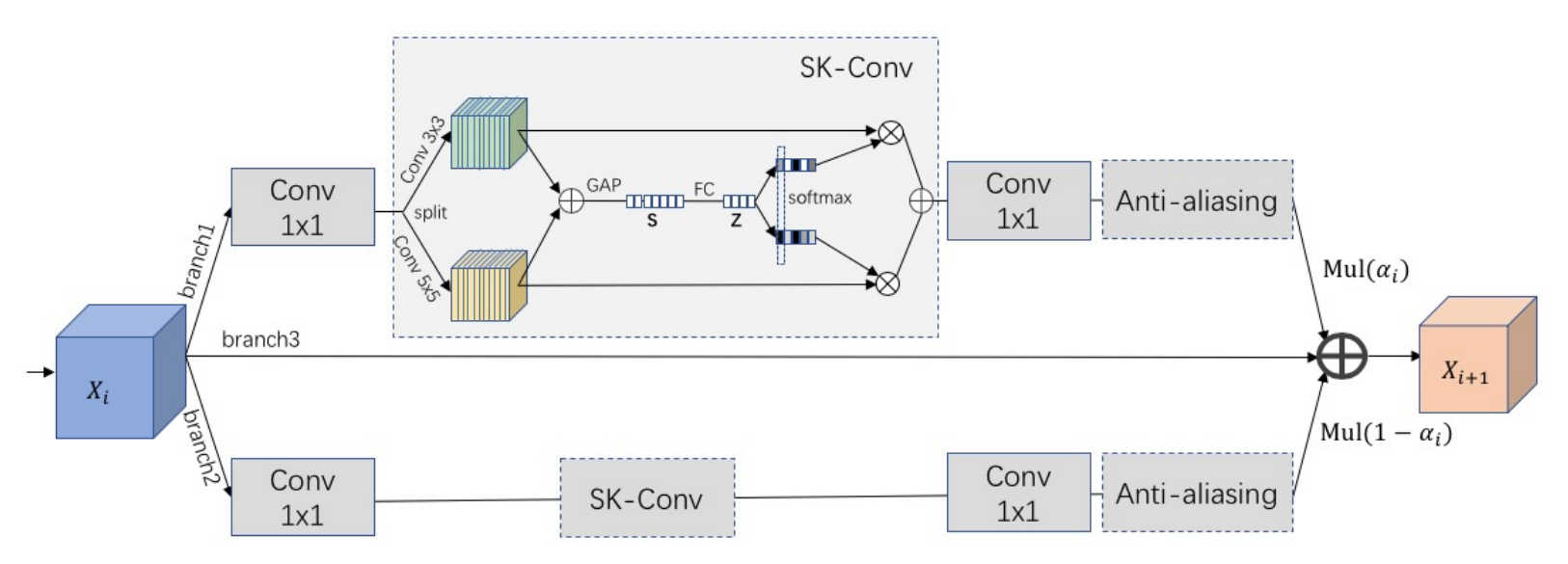

We present the first edition of "VIPriors: Visual Inductive Priors for Data-Efficient Deep Learning" challenges. We offer four data-impaired challenges, where models are trained from scratch, and we reduce the number of training samples to a fraction of the full set. Furthermore, to encourage data efficient solutions, we prohibited the use of pre-trained models and other transfer learning techniques. The majority of top ranking solutions make heavy use of data augmentation, model ensembling, and novel and efficient network architectures to achieve significant performance increases compared to the provided baselines.

翻译:我们提出了第一版“VIPriors:数据高效深层学习的视觉感化前科”挑战。我们提出了四种数据缺陷挑战,即模型从零到零培训,我们把培训样本的数量减少到全套样本的一小部分。此外,为了鼓励数据高效解决方案,我们禁止使用经过培训的模型和其他转让学习技术。大多数最高级解决方案大量使用数据增强、模型组合以及新颖而高效的网络结构,以实现与所提供的基线相比的显著性能提高。