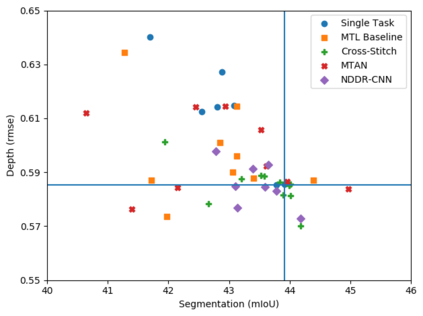

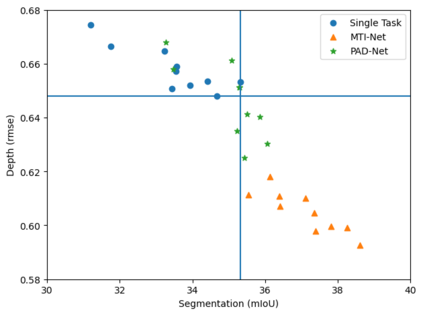

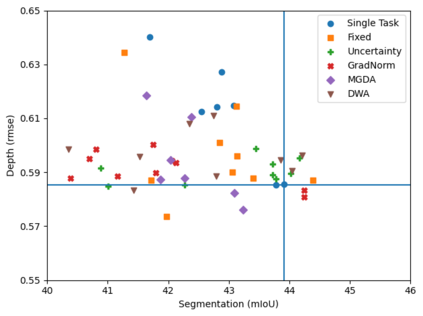

With the advent of deep learning, many dense prediction tasks, i.e. tasks that produce pixel-level predictions, have seen significant performance improvements. The typical approach is to learn these tasks in isolation, that is, a separate neural network is trained for each individual task. Yet, recent multi-task learning (MTL) techniques have shown promising results w.r.t. performance, computations and/or memory footprint, by jointly tackling multiple tasks through a learned shared representation. In this survey, we provide a well-rounded view on state-of-the-art deep learning approaches for MTL in computer vision, explicitly emphasizing on dense prediction tasks. Our contributions concern the following. First, we consider MTL from a network architecture point-of-view. We include an extensive overview and discuss the advantages/disadvantages of recent popular MTL models. Second, we examine various optimization methods to tackle the joint learning of multiple tasks. We summarize the qualitative elements of these works and explore their commonalities and differences. Finally, we provide an extensive experimental evaluation across a variety of dense prediction benchmarks to examine the pros and cons of the different methods, including both architectural and optimization based strategies.

翻译:随着深层次学习的到来,许多密集的预测任务,即产生像素水平预测的任务,都取得了显著的业绩改进。典型的方法是孤立地学习这些任务,即为每项任务培训单独的神经网络。然而,最近的多任务学习(MTL)技术已经显示出令人充满希望的结果,通过共同学习共享代表,共同处理多项任务。在这次调查中,我们对计算机视野中MTL最先进的深层次学习方法提供了全面的看法,明确强调密集的预测任务。我们的贡献涉及以下内容。首先,我们从网络结构观点的角度考虑MTL。我们包括广泛概述和讨论最近流行MTL模型的优势/劣势。第二,我们研究各种优化方法,以解决共同学习多重任务的问题。我们总结了这些工作的质量要素,并探讨了它们的共性和差异。最后,我们提供了对各种密集的预测基准的广泛实验性评估,以审查不同方法的准和最优化,包括基于建筑和最优化的战略。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem