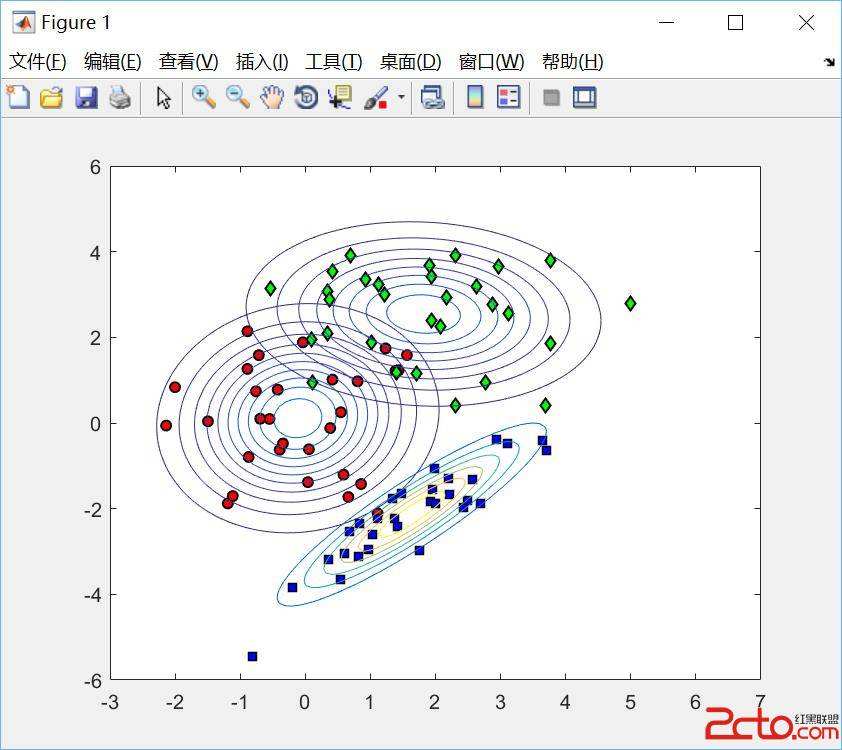

Earlier studies have shown that classification accuracies of Bayesian networks (BNs) obtained by maximizing the conditional log likelihood (CLL) of a class variable, given the feature variables, were higher than those obtained by maximizing the marginal likelihood (ML). However, differences between the performances of the two scores in the earlier studies may be attributed to the fact that they used approximate learning algorithms, not exact ones. This paper compares the classification accuracies of BNs with approximate learning using CLL to those with exact learning using ML. The results demonstrate that the classification accuracies of BNs obtained by maximizing the ML are higher than those obtained by maximizing the CLL for large data. However, the results also demonstrate that the classification accuracies of exact learning BNs using the ML are much worse than those of other methods when the sample size is small and the class variable has numerous parents. To resolve the problem, we propose an exact learning augmented naive Bayes classifier (ANB), which ensures a class variable with no parents. The proposed method is guaranteed to asymptotically estimate the identical class posterior to that of the exactly learned BN. Comparison experiments demonstrated the superior performance of the proposed method.

翻译:先前的研究显示,由于特性变量,通过最大限度地增加一个等级变量的有条件日志概率(CLL)获得的Bayesian网络的分类精度,根据特性变量,比通过最大限度地增加边际可能性(ML)获得的分类精度要高。然而,早期研究中两个得分的性能差异可能归因于它们使用了近似学习算法,而不是精确的算法。本文比较了Besian网络的分类精度,即使用CLLL的近似学习与使用ML的精确学习。结果显示,通过最大限度地提高一个等级变量而获得的BNCL获得的分类精度比通过为大数据最大限度地增加CLLL获得的分类精度要高。但是,结果还表明,使用ML的精确学习BN的精度分率比在样本大小小和等级变量有众多父母时采用的其他方法差得多。为了解决问题,我们建议精确的学习增强天种贝贝的分类精度,这能确保没有父母参与的等级变量。建议的方法保证了对相同的级图像的精确比较方法进行模拟估计。