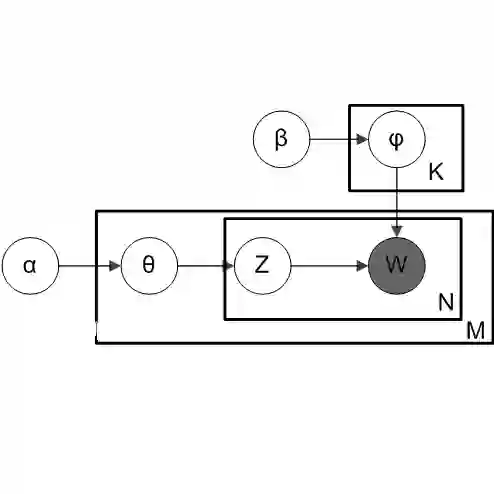

Labeled Latent Dirichlet Allocation (LLDA) is an extension of the standard unsupervised Latent Dirichlet Allocation (LDA) algorithm, to address multi-label learning tasks. Previous work has shown it to perform in par with other state-of-the-art multi-label methods. Nonetheless, with increasing label sets sizes LLDA encounters scalability issues. In this work, we introduce Subset LLDA, a simple variant of the standard LLDA algorithm, that not only can effectively scale up to problems with hundreds of thousands of labels but also improves over the LLDA state-of-the-art. We conduct extensive experiments on eight data sets, with label sets sizes ranging from hundreds to hundreds of thousands, comparing our proposed algorithm with the previously proposed LLDA algorithms (Prior--LDA, Dep--LDA), as well as the state of the art in extreme multi-label classification. The results show a steady advantage of our method over the other LLDA algorithms and competitive results compared to the extreme multi-label classification algorithms.

翻译:LLDA(LLDA)是标准无监督的LABELT Lent Dirichlet分配(LLDA)算法(LLDA)的延伸,用于处理多标签学习任务。先前的工作显示,它与其他最先进的多标签方法相当。尽管如此,LLLDA的标签体积不断提高,LLDA遇到可缩放问题。在这项工作中,我们引入了LLDA 标准算法的一个简单变量,即Subset LLLDA,它不仅可以有效地扩大成百上千个标签的问题,而且可以改进LLDA的状态。我们对八套数据进行了广泛的实验,其标签组大小从几百万到几百万个,将我们提议的LLDA算法与先前提议的LLDA算法(Prior-LDA,Dep-LDA)以及极端多标签分类算法中的艺术状况进行比较。结果显示,我们的方法比其他LLDA算法的算法和与极端多标签分类算法的竞争结果有稳步优势。