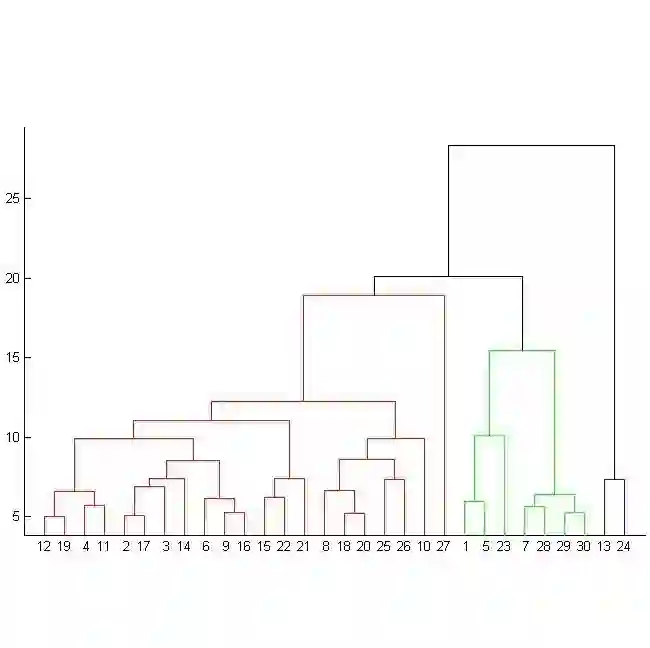

Predictive states for stochastic processes are a nonparametric and interpretable construct with relevance across a multitude of modeling paradigms. Recent progress on the self-supervised reconstruction of predictive states from time-series data focused on the use of reproducing kernel Hilbert spaces. Here, we examine how Wasserstein distances may be used to detect predictive equivalences in symbolic data. We compute Wasserstein distances between distributions over sequences ("predictions"), using a finite-dimensional embedding of sequences based on the Cantor for the underlying geometry. We show that exploratory data analysis using the resulting geometry via hierarchical clustering and dimension reduction provides insight into the temporal structure of processes ranging from the relatively simple (e.g., finite-state hidden Markov models) to the very complex (e.g., infinite-state indexed grammars).

翻译:用于随机过程的预测状态是非参数和可解释的构造,在多种模型模式中具有相关性。最近从侧重于复制内核Hilbert空间的时间序列数据中,在自我监督地重建预测状态方面取得了进展。在这里,我们研究如何利用瓦塞斯坦距离来探测符号数据中的预测等值。我们计算瓦塞斯坦在序列(“频度”)分布之间的距离,使用基于Cantor的基于根基几何的定序的有限尺寸嵌入。我们显示,利用由此得出的几何数据,通过等级组合和维度减少进行探索性数据分析,可以洞察从相对简单的(例如,隐藏的有限状态马尔科夫模型)到非常复杂的(例如,无限状态指数语法)等过程的时间结构。