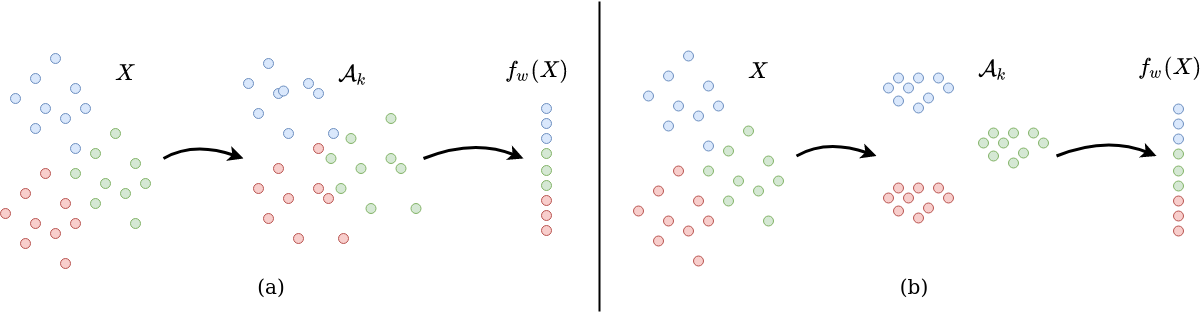

Deep Neural Networks can generalize despite being significantly overparametrized. Recent research has tried to examine this phenomenon from various view points and to provide bounds on the generalization error or measures predictive of the generalization gap based on these viewpoints, such as norm-based, PAC-Bayes based, and margin-based analysis. In this work, we provide an interpretation of generalization from the perspective of quality of internal representations of deep neural networks, based on neuroscientific theories of how the human visual system creates invariant and untangled object representations. Instead of providing theoretical bounds, we demonstrate practical complexity measures which can be computed ad-hoc to uncover generalization behaviour in deep models. We also provide a detailed description of our solution that won the NeurIPS competition on Predicting Generalization in Deep Learning held at NeurIPS 2020. An implementation of our solution is available at https://github.com/parthnatekar/pgdl.

翻译:最近的研究试图从各种观点来研究这一现象,并提供根据这些观点预测普遍化差距的一般化错误或措施的界限,例如基于规范的、基于PAC-Bayes的和基于边际的分析。在这项工作中,我们从深神经网络内部表现质量的角度,根据人类视觉系统如何产生不易和未缠绕的物体表示的神经科学理论,对一般化作出解释。我们没有提供理论界限,而是展示了实际的复杂措施,可以计算出在深模型中发现普遍化行为的方法。我们还详细说明了我们在NeurIPS关于预测在NeurIPS2020年深入学习中普遍化的竞赛中胜出的解决方案。我们解决方案的实施可在https://github.com/parthnatekar/pgdl上查阅。