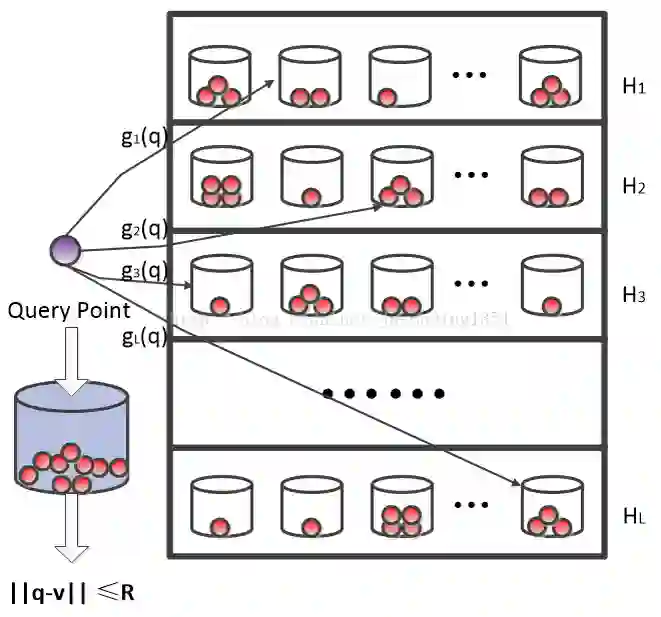

Embedding representation learning via neural networks is at the core foundation of modern similarity based search. While much effort has been put in developing algorithms for learning binary hamming code representations for search efficiency, this still requires a linear scan of the entire dataset per each query and trades off the search accuracy through binarization. To this end, we consider the problem of directly learning a quantizable embedding representation and the sparse binary hash code end-to-end which can be used to construct an efficient hash table not only providing significant search reduction in the number of data but also achieving the state of the art search accuracy outperforming previous state of the art deep metric learning methods. We also show that finding the optimal sparse binary hash code in a mini-batch can be computed exactly in polynomial time by solving a minimum cost flow problem. Our results on Cifar-100 and on ImageNet datasets show the state of the art search accuracy in precision@k and NMI metrics while providing up to 98X and 478X search speedup respectively over exhaustive linear search. The source code is available at https://github.com/maestrojeong/Deep-Hash-Table-ICML18

翻译:通过神经网络进行嵌入式代号学习是现代类似搜索的核心基础。虽然在开发算法以学习二进制模拟代号表达式以提高搜索效率方面已经付出了很大努力,但还需要对每个查询的全数据集进行线性扫描,并通过二进制转换来交换搜索精度。为此,我们认为直接学习一个可量化嵌入代号以及稀疏二进式散散散散散散散散散散散散散分代码端对端到端的问题,可以用来构建一个高效的散列表,不仅能显著减少数据的搜索数量,而且能达到艺术搜索精确度,超过艺术深度计量学习方法以前的状态。我们还表明,通过解决最低成本流问题,可以在多盘时间精确地计算出每个查询的最佳稀少的二进制代号。我们在Cifar-100和图像网络数据集上的结果显示精度@k和NMI 度度的艺术搜索精度,同时提供98X和478X搜索速度,分别超过彻底的线性搜索。源码可在 https://glip-ML18/masmagromagrom。