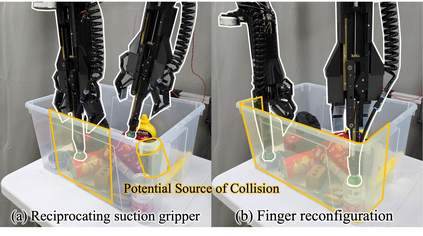

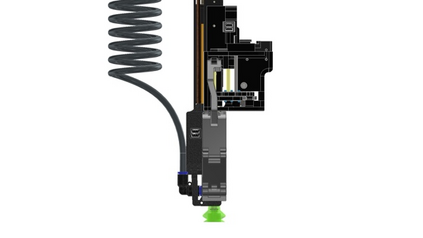

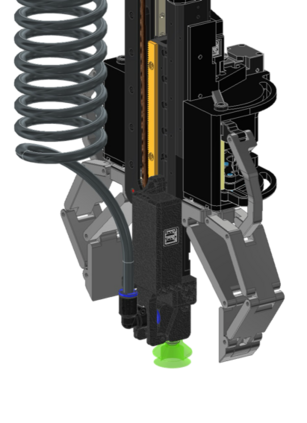

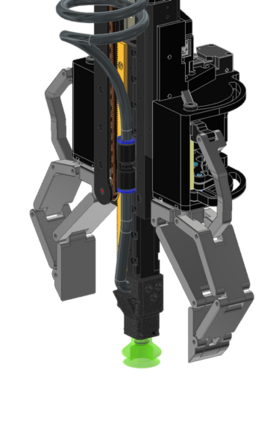

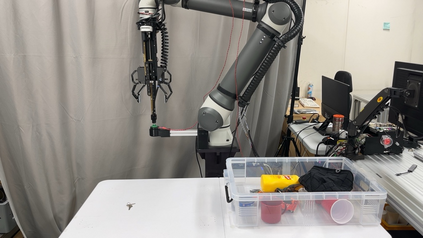

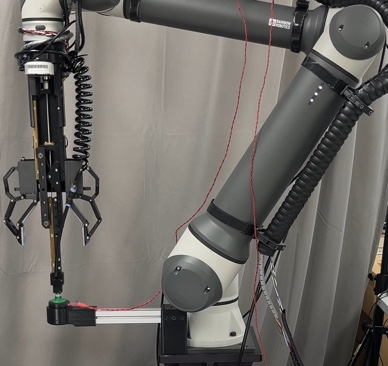

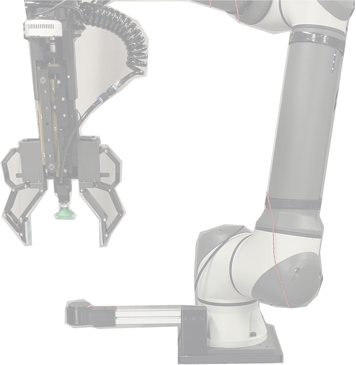

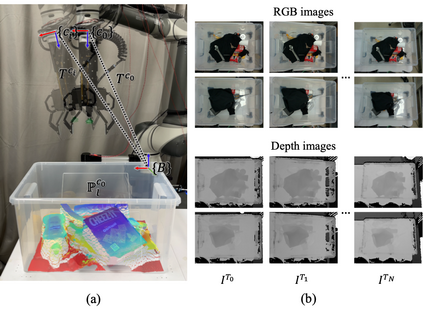

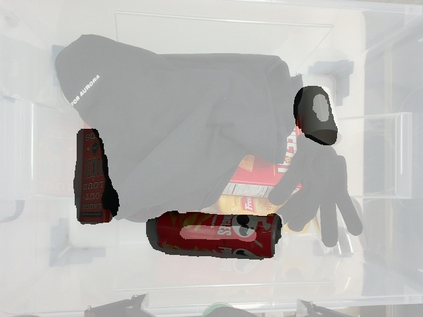

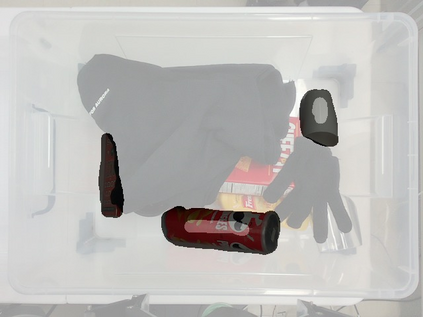

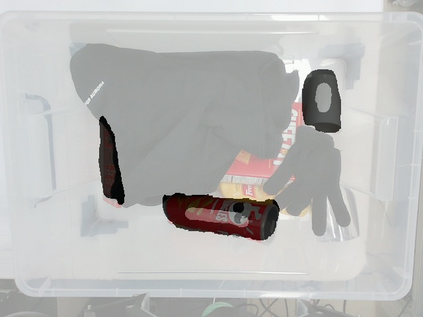

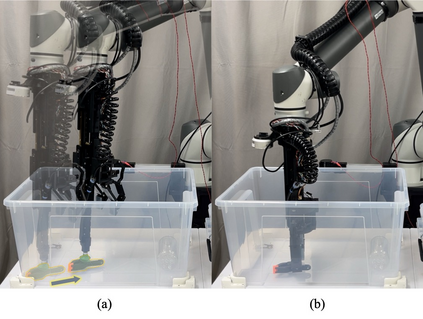

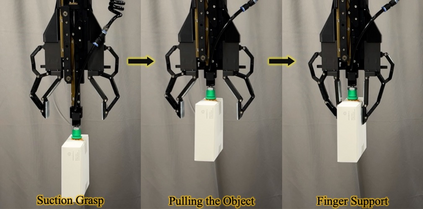

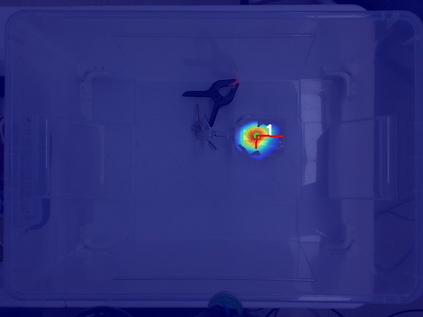

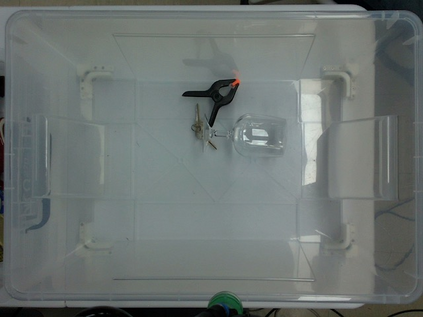

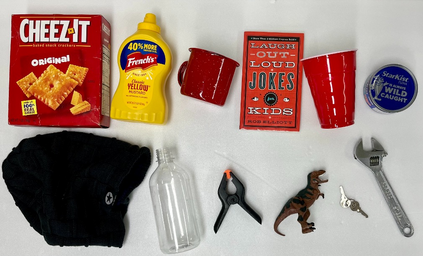

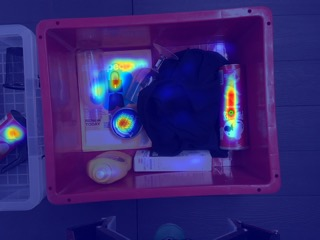

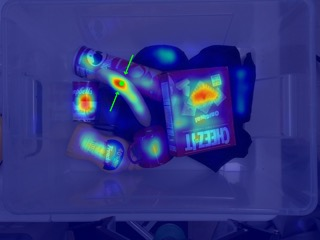

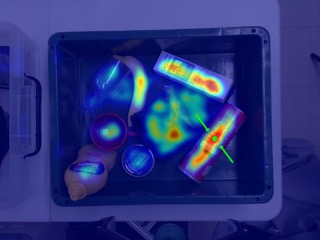

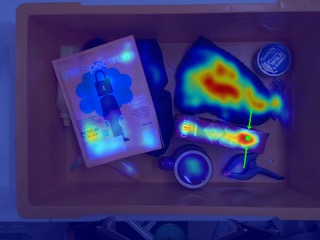

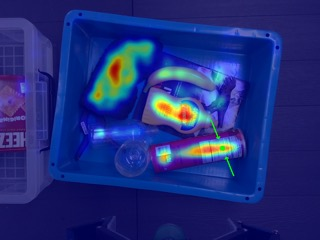

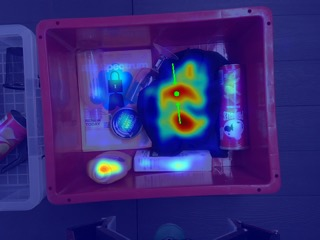

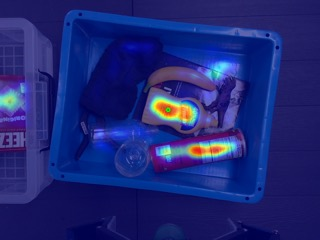

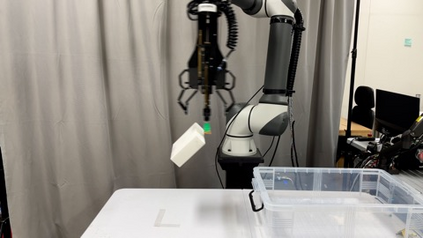

Robotic grasping is an essential capability, playing a critical role in enabling robots to physically interact with their surroundings. Despite extensive research, challenges remain due to the diverse shapes and properties of target objects, inaccuracies in sensing, and potential collisions with the environment. In this work, we propose a method for effectively grasping in cluttered bin-picking environments where these challenges intersect. We utilize a multi-functional gripper that combines both suction and finger grasping to handle a wide range of objects. We also present an active gripper adaptation strategy to minimize collisions between the gripper hardware and the surrounding environment by actively leveraging the reciprocating suction cup and reconfigurable finger motion. To fully utilize the gripper's capabilities, we built a neural network that detects suction and finger grasp points from a single input RGB-D image. This network is trained using a larger-scale synthetic dataset generated from simulation. In addition to this, we propose an efficient approach to constructing a real-world dataset that facilitates grasp point detection on various objects with diverse characteristics. Experiment results show that the proposed method can grasp objects in cluttered bin-picking scenarios and prevent collisions with environmental constraints such as a corner of the bin. Our proposed method demonstrated its effectiveness in the 9th Robotic Grasping and Manipulation Competition (RGMC) held at ICRA 2024.

翻译:暂无翻译