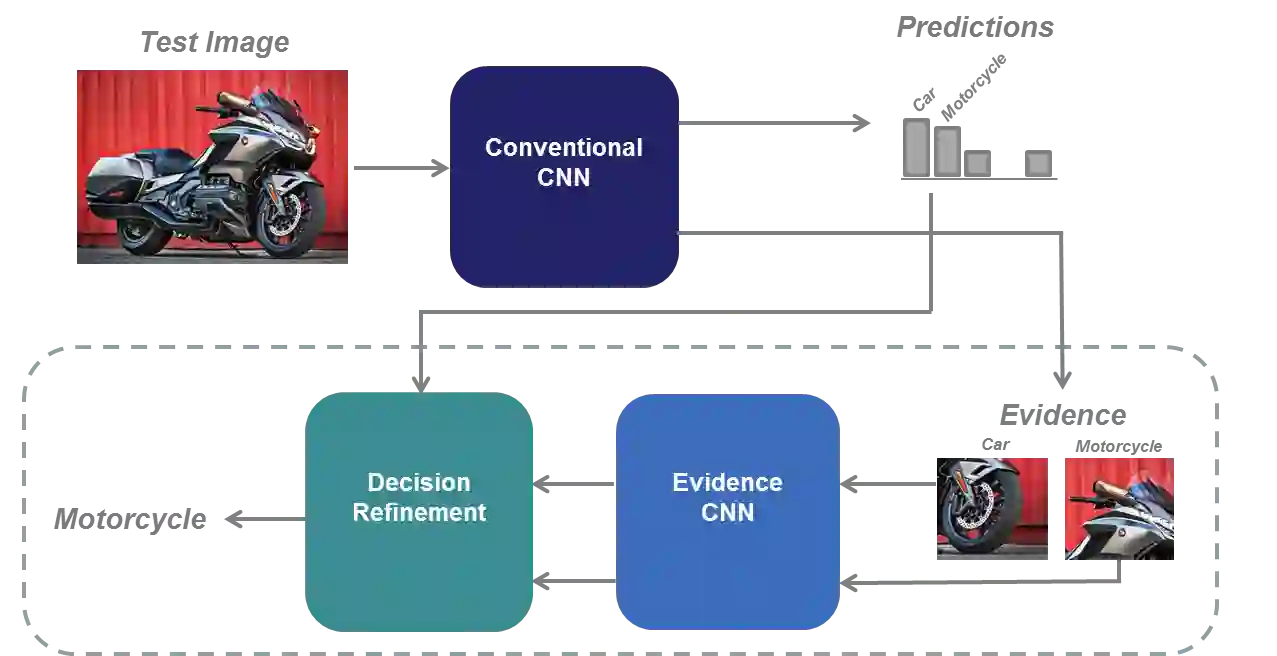

We propose Guided Zoom, an approach that utilizes spatial grounding to make more informed predictions. It does so by making sure the model has "the right reasons" for a prediction, being defined as reasons that are coherent with those used to make similar correct decisions at training time. The reason/evidence upon which a deep neural network makes a prediction is defined to be the spatial grounding, in the pixel space, for a specific class conditional probability in the model output. Guided Zoom questions how reasonable the evidence used to make a prediction is. In state-of-the-art deep single-label classification models, the top-k (k = 2, 3, 4, ...) accuracy is usually significantly higher than the top-1 accuracy. This is more evident in fine-grained datasets, where differences between classes are quite subtle. We show that Guided Zoom results in the refinement of a model's classification accuracy on three finegrained classification datasets. We also explore the complementarity of different grounding techniques, by comparing their ensemble to an adversarial erasing approach that iteratively reveals the next most discriminative evidence.

翻译:我们提出“ 引导缩放”, 这是一种利用空间地面进行更知情的预测的方法。 它通过确保模型有“ 正确的理由” 进行预测, 被定义为与培训时用于作出类似正确决定的理由相一致的理由。 深神经网络作出预测的理由/证据被定义为在像素空间中空间的空间, 用于模型输出中特定等级的有条件概率。 引导 Zom 质疑用于预测的证据有多合理。 在最先进的深度单标签分类模型中, 顶级( k= 2, 3, 4,......) 准确性通常大大高于顶级-1准确性。 在精细的数据集中, 等级差异非常微妙。 我们显示, 引导 Zoom 导致模型三个精细分类数据集的分类精确性得到完善。 我们还探索不同地面技术的互补性, 将其组合比作一个可反复显示下一个最有区别性的证据的对抗性时段。