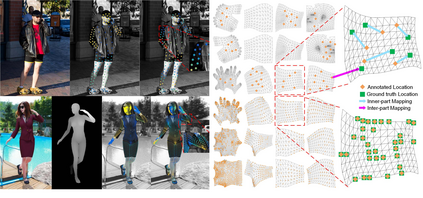

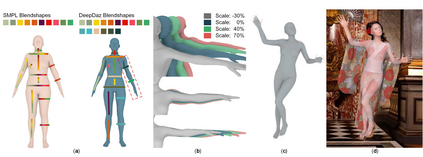

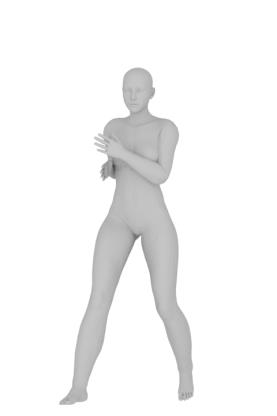

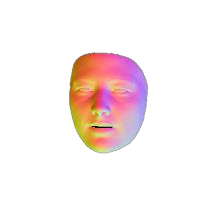

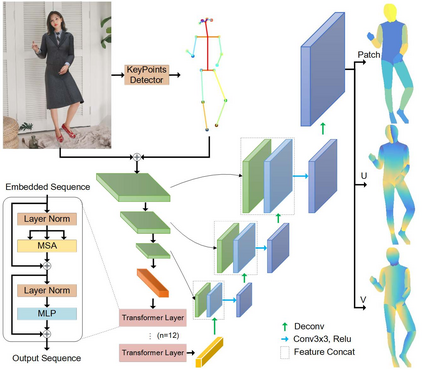

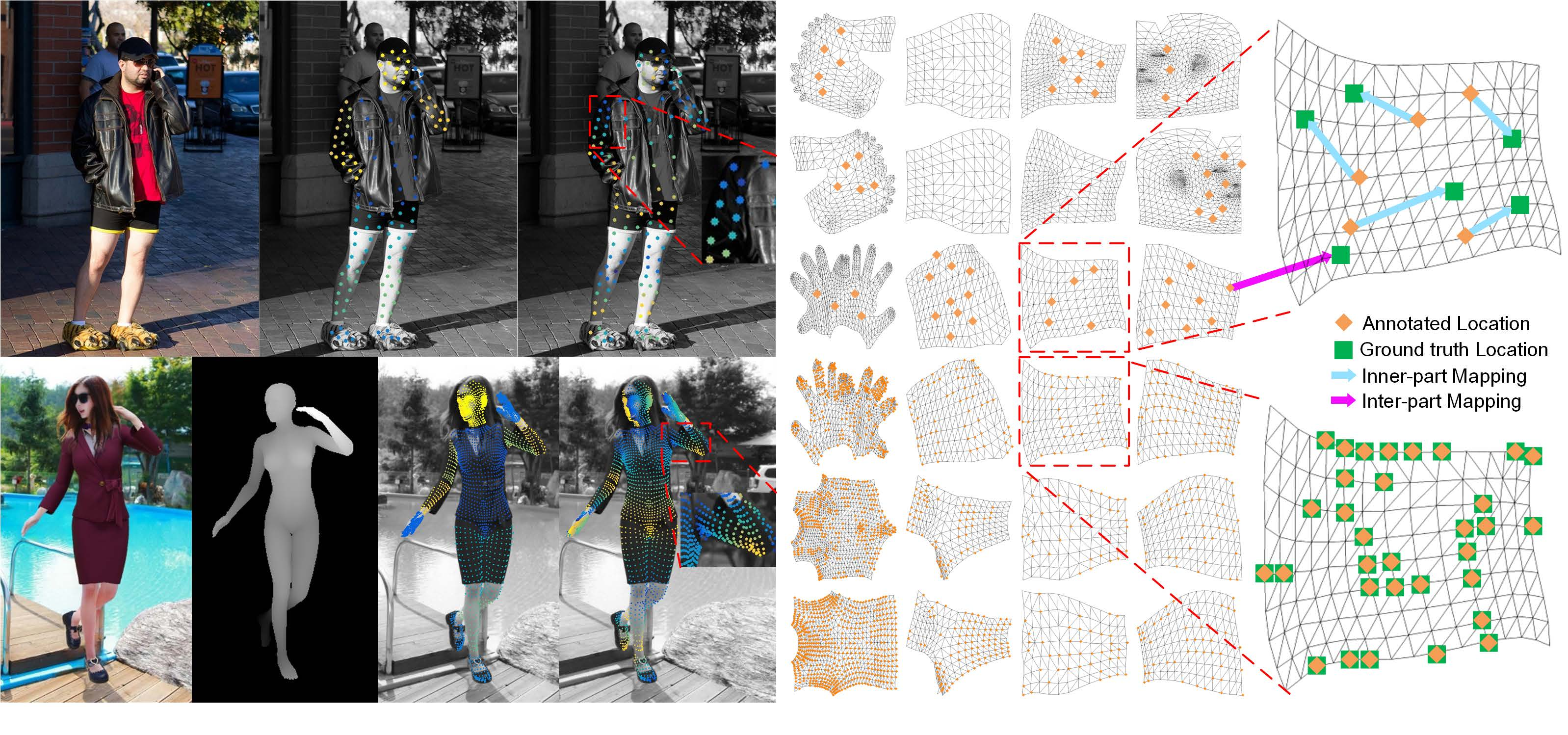

Recovering dense human poses from images plays a critical role in establishing an image-to-surface correspondence between RGB images and the 3D surface of the human body, serving the foundation of rich real-world applications, such as virtual humans, monocular-to-3d reconstruction. However, the popular DensePose-COCO dataset relies on a sophisticated manual annotation system, leading to severe limitations in acquiring the denser and more accurate annotated pose resources. In this work, we introduce a new 3D human-body model with a series of decoupled parameters that could freely control the generation of the body. Furthermore, we build a data generation system based on this decoupling 3D model, and construct an ultra dense synthetic benchmark UltraPose, containing around 1.3 billion corresponding points. Compared to the existing manually annotated DensePose-COCO dataset, the synthetic UltraPose has ultra dense image-to-surface correspondences without annotation cost and error. Our proposed UltraPose provides the largest benchmark and data resources for lifting the model capability in predicting more accurate dense poses. To promote future researches in this field, we also propose a transformer-based method to model the dense correspondence between 2D and 3D worlds. The proposed model trained on synthetic UltraPose can be applied to real-world scenarios, indicating the effectiveness of our benchmark and model.

翻译:从图像中回收稠密的人的图像,从图像中恢复稠密的人的面部,对于在RGB图像和人体的3D表面之间建立图像到表面的对应关系具有关键作用,有助于建立丰富的真实世界应用基础,例如虚拟人类,单子到3D重建。然而,流行的DensePose-CO数据集依赖于一个复杂的人工说明系统,导致在获取较稠密、更准确的附加注释的图像资源方面受到严重限制。在这项工作中,我们引入了一个新的3D人体模型,其中包括一系列可以自由控制身体生成的分解参数。此外,我们根据这个分离的3D模型,建立了一个数据生成系统,并建立了一个超稠密的合成基准UtraPose,其中包含了大约13亿个相应的点。与现有的手动的DensePose-CO数据集相比,合成UtraPose在获取超稠密的图像到地面对面的对应关系上受到严重限制,而没有说明成本和错误。我们提议的UtraPose提供了最大的基准和数据资源,用以提升模型能力来预测更精确的密度的模型配置。为了促进未来对地模型的模型的模型的模型和合成模型的模型,我们还可以提出一种对地研究。