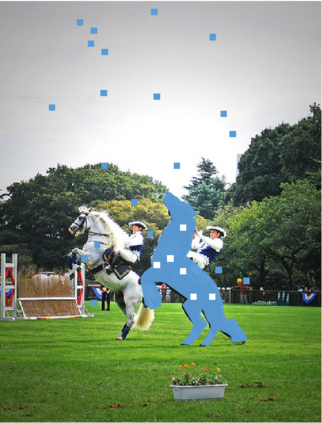

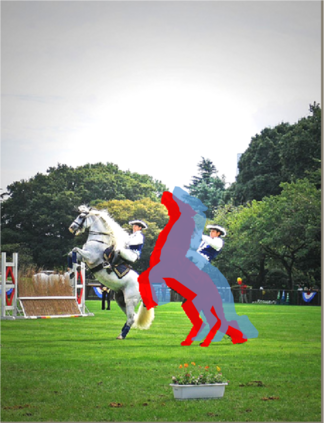

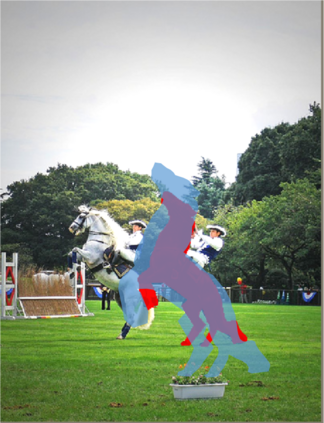

We present a mask-piloted Transformer which improves masked-attention in Mask2Former for image segmentation. The improvement is based on our observation that Mask2Former suffers from inconsistent mask predictions between consecutive decoder layers, which leads to inconsistent optimization goals and low utilization of decoder queries. To address this problem, we propose a mask-piloted training approach, which additionally feeds noised ground-truth masks in masked-attention and trains the model to reconstruct the original ones. Compared with the predicted masks used in mask-attention, the ground-truth masks serve as a pilot and effectively alleviate the negative impact of inaccurate mask predictions in Mask2Former. Based on this technique, our \M achieves a remarkable performance improvement on all three image segmentation tasks (instance, panoptic, and semantic), yielding $+2.3$AP and $+1.6$mIoU on the Cityscapes instance and semantic segmentation tasks with a ResNet-50 backbone. Our method also significantly speeds up the training, outperforming Mask2Former with half of the number of training epochs on ADE20K with both a ResNet-50 and a Swin-L backbones. Moreover, our method only introduces little computation during training and no extra computation during inference. Our code will be released at \url{https://github.com/IDEA-Research/MP-Former}.

翻译:为解决这一问题,我们提出了一个蒙面引导变异器,用于改善蒙面2格式图像分割的蒙面驱动器 。改进是基于我们的观察,即Mask2Former在连续的解码层之间有不一致的蒙面预测,导致优化目标不一致,解码查询利用率低。为解决这一问题,我们提议了一个蒙面引导培训方法,在蒙面保护中为无记名的地面真相面具添加了额外的信息,并培训了重建原始面罩的模式。与在蒙面2格式中使用的预测面罩相比,地面真相面具作为试点,有效地减轻了蒙面2格式不准确预测的负面影响。基于这一技术,我们的M在所有三种图像分割任务(内观、全景和语义)上都取得了显著的绩效改进,在市景场情景中产生了$+2.3美元和$+1.6美元的地面真相面具,在ResNet-50主干线中,我们的方法还大大加快了培训速度,在Mask2Formere中表现了IM2格式的负面影响,在SAIS-deal Fain-deal Freal Freal 期间, 将仅使用我们的培训编号。</s>