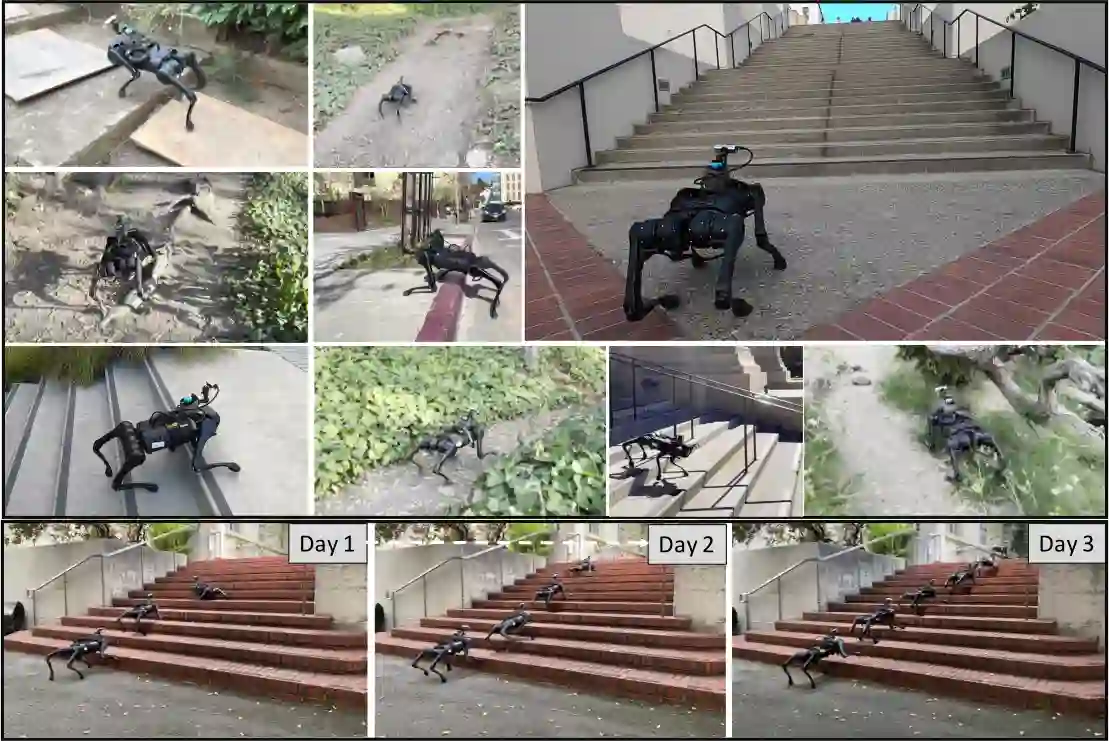

In this work, we show how to learn a visual walking policy that only uses a monocular RGB camera and proprioception. Since simulating RGB is hard, we necessarily have to learn vision in the real world. We start with a blind walking policy trained in simulation. This policy can traverse some terrains in the real world but often struggles since it lacks knowledge of the upcoming geometry. This can be resolved with the use of vision. We train a visual module in the real world to predict the upcoming terrain with our proposed algorithm Cross-Modal Supervision (CMS). CMS uses time-shifted proprioception to supervise vision and allows the policy to continually improve with more real-world experience. We evaluate our vision-based walking policy over a diverse set of terrains including stairs (up to 19cm high), slippery slopes (inclination of 35 degrees), curbs and tall steps (up to 20cm), and complex discrete terrains. We achieve this performance with less than 30 minutes of real-world data. Finally, we show that our policy can adapt to shifts in the visual field with a limited amount of real-world experience. Video results and code at https://antonilo.github.io/vision_locomotion/.

翻译:在这项工作中,我们展示了如何学习只使用单眼 RGB 相机和自行感知的视觉行走政策。 由于模拟 RGB 是困难的, 我们必须在现实世界中学习视觉。 我们从模拟训练的盲目行走政策开始。 这个政策可以在现实世界中穿越一些地形,但由于缺乏对即将到来的几何学的知识而常常挣扎。 这可以通过使用视觉来解决。 我们在现实世界中训练了一个视觉模块,用我们提议的算法跨模式监督(CMS)来预测即将到来的地形。 CMS 使用时间变换的自我感知来监督视觉,并允许政策以更真实世界的经验不断改进。 我们评估了我们基于视觉的行走政策, 包括一系列不同的地形, 包括楼梯(高达19厘米高)、滑坡(高度为35度)、路边和高步(高达20厘米)以及复杂的离散地形。 我们用不到30分钟的真实世界数据来完成这一表现。 最后, 我们展示了我们的政策可以适应视觉场的变化, 以有限的真实/ 数字/ 。