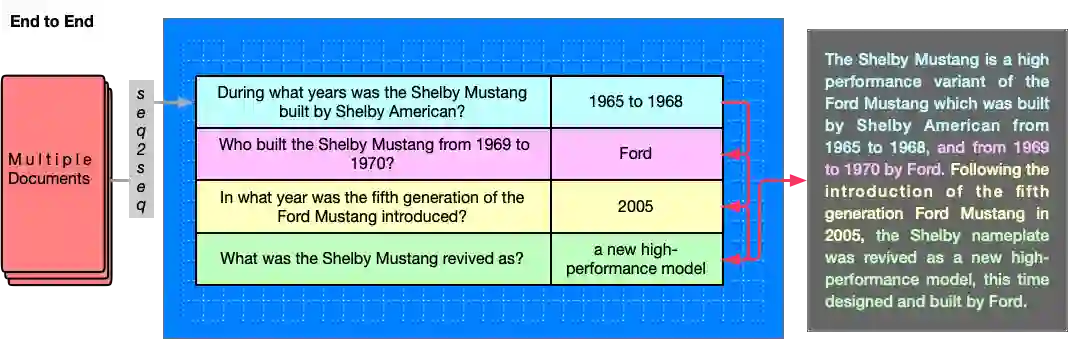

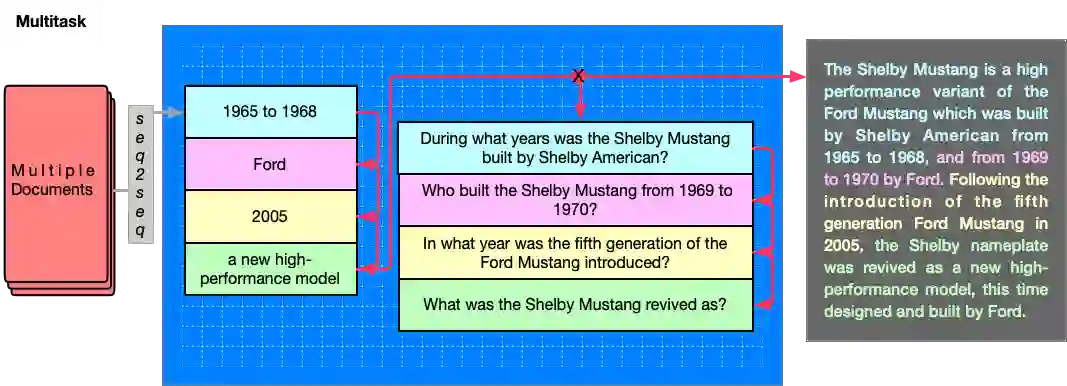

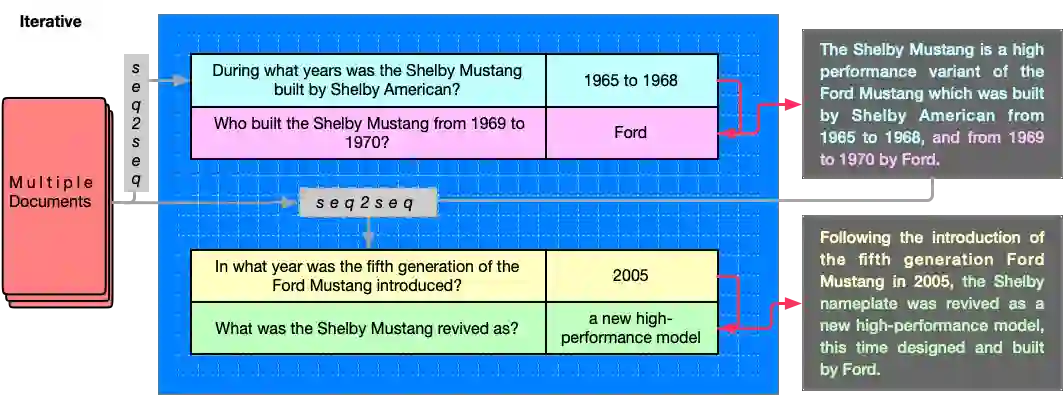

The ability to convey relevant and faithful information is critical for many tasks in conditional generation and yet remains elusive for neural seq-to-seq models whose outputs often reveal hallucinations and fail to correctly cover important details. In this work, we advocate planning as a useful intermediate representation for rendering conditional generation less opaque and more grounded. Our work proposes a new conceptualization of text plans as a sequence of question-answer (QA) pairs. We enhance existing datasets (e.g., for summarization) with a QA blueprint operating as a proxy for both content selection (i.e.,~what to say) and planning (i.e.,~in what order). We obtain blueprints automatically by exploiting state-of-the-art question generation technology and convert input-output pairs into input-blueprint-output tuples. We develop Transformer-based models, each varying in how they incorporate the blueprint in the generated output (e.g., as a global plan or iteratively). Evaluation across metrics and datasets demonstrates that blueprint models are more factual than alternatives which do not resort to planning and allow tighter control of the generation output.

翻译:传递相关和忠实信息的能力对于有条件生成的许多任务至关重要,但对于神经后变等模型来说仍然难以实现,这些模型的产出往往暴露出幻觉,未能正确覆盖重要细节。在这项工作中,我们提倡规划作为有用的中间代言,使有条件的一代不那么不透明和更有根基。我们的工作建议将文本计划的新概念化,作为问答的顺序。我们用QA蓝图加强现有的数据集(例如,总结),作为内容选择(即,说什么)和规划(即,什么顺序)的替代物。我们通过利用最先进的问题生成技术和将投入-输出配对转换成投入-蓝图-打印-输出图普来自动获得蓝图。我们开发了基于变压器的模型,每个模型在如何将蓝图纳入生成的产出(例如,作为全球计划或迭代计划)方面各不相同。跨度和数据集的评价表明,蓝图模型比不诉诸规划和更严格控制产出的替代物更符合事实。