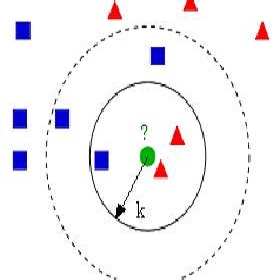

Retrieval augmented methods have shown promising results in various classification tasks. However, existing methods focus on retrieving extra context to enrich the input, which is noise sensitive and non-expandable. In this paper, following this line, we propose a $k$-nearest-neighbor (KNN) -based method for retrieval augmented classifications, which interpolates the predicted label distribution with retrieved instances' label distributions. Different from the standard KNN process, we propose a decoupling mechanism as we find that shared representation for classification and retrieval hurts performance and leads to training instability. We evaluate our method on a wide range of classification datasets. Experimental results demonstrate the effectiveness and robustness of our proposed method. We also conduct extra experiments to analyze the contributions of different components in our model.\footnote{\url{https://github.com/xnliang98/knn-cls-w-decoupling}}

翻译:检索增强分类的解耦表示方法

检索增强模型已经在各种分类任务中取得了很多有希望的结果。然而,现有的方法集中于检索额外的上下文来丰富输入,这些上下文标注是敏感和不可扩展的。在这篇论文中,我们沿着这条线,提出了一种基于k近邻的方法,用于检索增强的分类。该方法插值预测标签分布与检索实例的标签分布。不同于标准的KNN过程,我们提出了一种解耦机制,因为我们发现分类和检索的共享表示会损害性能并导致训练不稳定。我们在各种分类数据集上评估了我们的方法。实验结果证明了我们提出的方法的有效性和鲁棒性。我们还进行了额外的实验来分析我们的模型中不同组件的贡献。