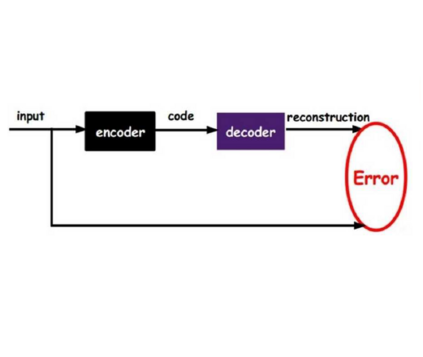

Deep learning models are known to be vulnerable to adversarial examples that are elaborately designed for malicious purposes and are imperceptible to the human perceptual system. Autoencoder, when trained solely over benign examples, has been widely used for (self-supervised) adversarial detection based on the assumption that adversarial examples yield larger reconstruction errors. However, because lacking adversarial examples in its training and the too strong generalization ability of autoencoder, this assumption does not always hold true in practice. To alleviate this problem, we explore how to detect adversarial examples with disentangled label/semantic features under the autoencoder structure. Specifically, we propose Disentangled Representation-based Reconstruction (DRR). In DRR, we train an autoencoder over both correctly paired label/semantic features and incorrectly paired label/semantic features to reconstruct benign and counterexamples. This mimics the behavior of adversarial examples and can reduce the unnecessary generalization ability of autoencoder. We compare our method with the state-of-the-art self-supervised detection methods under different adversarial attacks and different victim models, and it exhibits better performance in various metrics (area under the ROC curve, true positive rate, and true negative rate) for most attack settings. Though DRR is initially designed for visual tasks only, we demonstrate that it can be easily extended for natural language tasks as well. Notably, different from other autoencoder-based detectors, our method can provide resistance to the adaptive adversary.

翻译:深层次的学习模式众所周知,很容易受到为恶意目的精心设计的敌对性实例的伤害,而且对人类感官系统是无法察觉的。自动编码器,如果仅经过纯经良性实例的培训,仅经过非良性实例的训练,便被广泛用于(自我监督的)对抗性检测,其依据的假设是,对抗性实例产生更大的重建错误。然而,由于在培训中缺乏对抗性实例,而且自动编码器过于强的概括化能力,这一假设在实践中并不总是真实的。为了缓解这一问题,我们探索如何在自动coder结构下发现带有分解的标签/语义特征的对抗性实例。具体地说,我们提议进行基于分解性代表制的重建(DRR)。在DRRR中,我们培训一个自动编码器,根据正确配对的标签/语义范例和错误的配对制标签/语义特征来重建良性和反感标本体,这一假设在实践上会模仿性例子,我们的方法与最先进的自我监督的自我监控自我检测方法相比较。在不同的对抗性攻击性C下,不同的直观性攻击性测试方法下,不同性攻击性攻击性攻击性攻击和真实的模型下,可以提供更精确的自我评估性攻击性攻击率,不同的受害者的自我评估率,不同性攻击性攻击性攻击性能的模型和真实性攻击性变化的自我反应模型,在不同的自我反应模型下,不同的自我反应模型下, 。