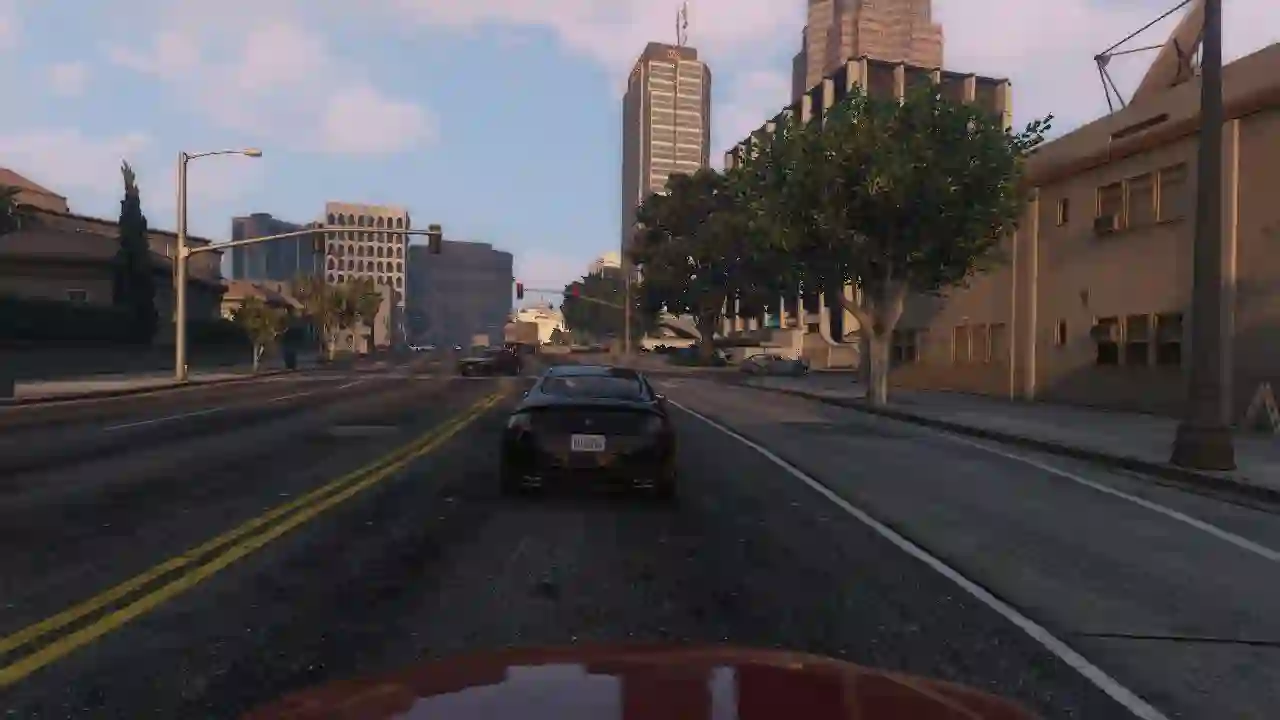

Convolutions on monocular dash cam videos capture spatial invariances in the image plane but do not explicitly reason about distances and depth. We propose a simple transformation of observations into a bird's eye view, also known as plan view, for end-to-end control. We detect vehicles and pedestrians in the first person view and project them into an overhead plan view. This representation provides an abstraction of the environment from which a deep network can easily deduce the positions and directions of entities. Additionally, the plan view enables us to leverage advances in 3D object detection in conjunction with deep policy learning. We evaluate our monocular plan view network on the photo-realistic Grand Theft Auto V simulator. A network using both a plan view and front view causes less than half as many collisions as previous detection-based methods and an order of magnitude fewer collisions than pure pixel-based policies.

翻译:单眼破碎摄像头摄像带的演进过程在图像平面上捕捉了空间差异,但没有明确解释距离和深度。 我们提议将观测结果简单转换成鸟类的眼睛视图,也称为计划视图,用于端到端控制。 我们检测第一个人看到的车辆和行人,并将他们投射到一个高空计划视图中。 这个表达方式提供了环境的抽象化,深层网络可以很容易地从中推断出实体的位置和方向。 此外, 该计划视图使我们能够在深度政策学习的同时利用三维对象探测的进展。 我们评估了我们光真真人大鼠自动模拟器的单眼视图网络。 一个使用计划视图和前视的网络造成的碰撞比以往的探测方法少一半,碰撞的幅度比纯像素政策少一倍。