ICML'21 | 五篇图神经网络论文精选

国际机器学习大会( International Conference on Machine Learning, ICML)是由国际机器学习学会(IMLS)主办的年度机器学习国际顶级会议,位列CCF-A,2021年论文接收率为21.48%。今天为大家带来ICML 2021五篇关于图神经网络过平滑问题与深度图神经网络与的文章分享。

1研究背景

深度图神经网络的训练过程非常艰难,需要大量的计算开销和存储空间。此外,深度图神经网络不仅容易带来梯度消失和过度拟合问题,还存在过平滑问题:图神经网络的训练过程中,随着网络层数的增加和迭代次数的增加,每个节点的隐层表征会趋于收敛到同一向量并逐渐变得难以区分,从而导致性能急剧下降。(注:过平滑问题不仅存在于深度图神经网络,普通的浅层图神经网络也会存在这个问题)

2Oversmoothing (过平滑问题)

1GRAND: Graph Neural Diffusion

作者:Benjamin P. Chamberlain, James Rowbottom, Maria Gorinova, Stefan Webb, Emanuele Rossi and Michael M. Bronstein

单位:Twitter 等

论文链接:http://proceedings.mlr.press/v139/chamberlain21a.html

Highlight: We present Graph Neural Diffusion (GRAND) that approaches deep learning on graphs as a continuous diffusion process and treats Graph Neural Networks (GNNs) as discretisations of an underlying PDE.

2Directional Graph Networks

作者:Dominique Beaini, Saro Passaro, Vincent Letourneau, William L. Hamilton, Gabriele Corso and Pietro Lio

单位:InVivo AI & 英国剑桥大学等

论文链接:http://proceedings.mlr.press/v139/beani21a.html

代码链接:https://github.com/Saro00/DGN

Highlight: To overcome the limitation of oversmoothing, we propose the first globally consistent anisotropic kernels for GNNs, allowing for graph convolutions that are defined according to topologicaly-derived directional flows.

3Improving Breadth-Wise Backpropagation in Graph Neural Networks Helps Learning Long-Range Dependencies

作者:Denis Lukovnikov and Asja Fischer

单位:Ruhr University Bochum

论文链接:http://proceedings.mlr.press/v139/lukovnikov21a.html

Highlight: In this work, we focus on the ability of graph neural networks (GNNs) to learn long-range patterns in graphs with edge features.

3Depth(深度图神经网络)

4Optimization of Graph Neural Networks: Implicit Acceleration by Skip Connections and More Depth

作者:Lukovnikov, Denis and Fischer and Asja

单位:MIT & 马里兰大学 & 哈佛

论文链接:http://proceedings.mlr.press/v139/xu21k.html

Highlight: We take the first step towards analyzing GNN training by studying the gradient dynamics of GNNs.

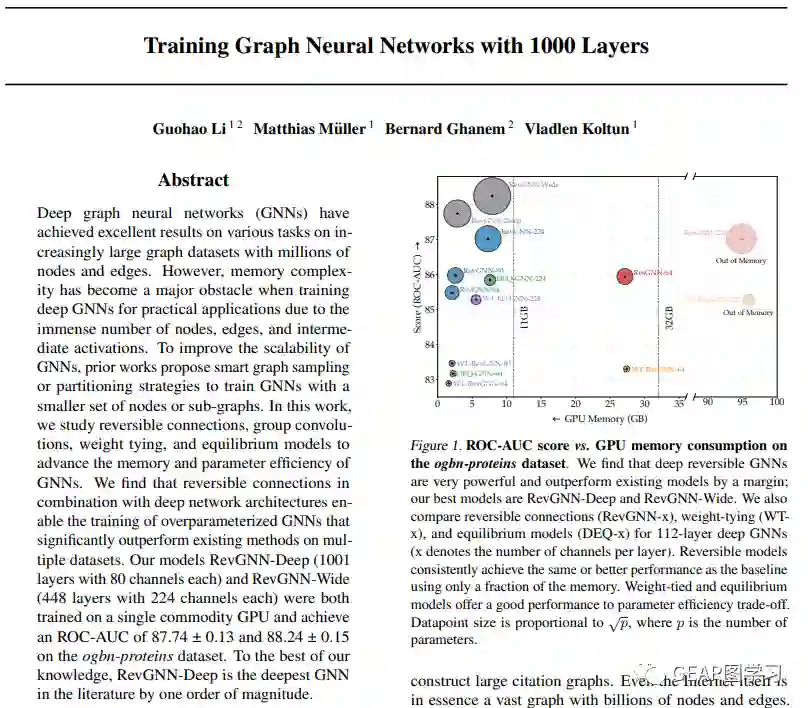

5Training Graph Neural Networks with 1000 Layers

作者:Li, Guohao and M{"u}ller, Matthias and Ghanem, Bernard and Koltun, Vladlen

单位:Intel Labs & King Abdullah University等

论文链接:http://proceedings.mlr.press/v139/li21o.html

Highlight: In this work, we study reversible connections, group convolutions, weight tying, and equilibrium models to advance the memory and parameter efficiency of GNNs.