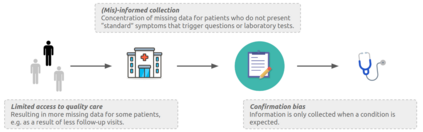

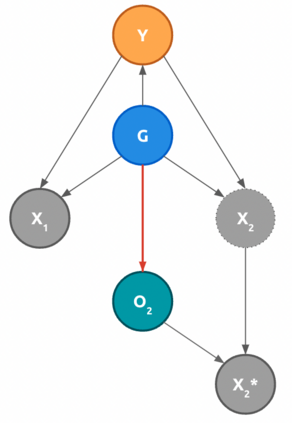

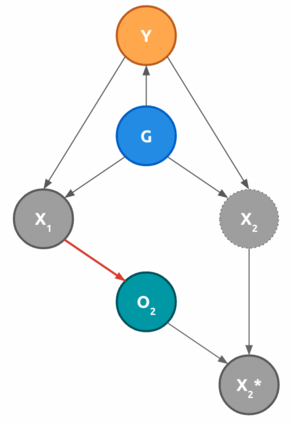

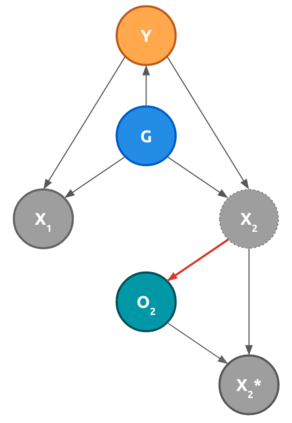

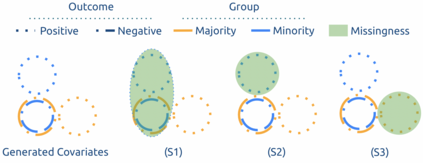

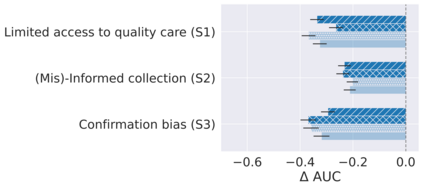

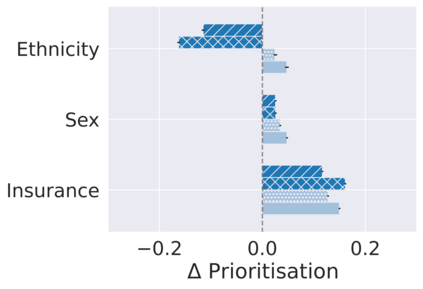

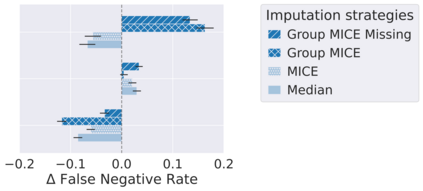

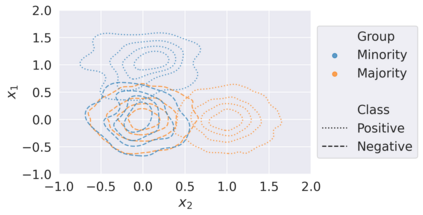

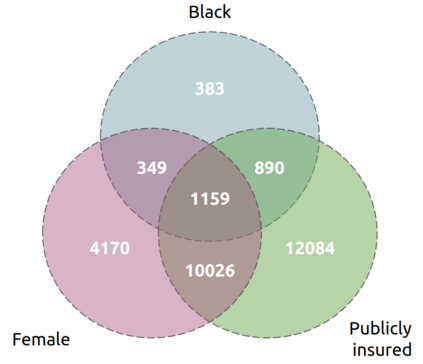

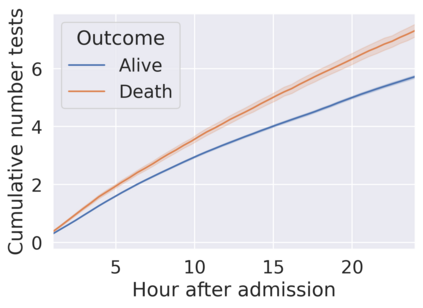

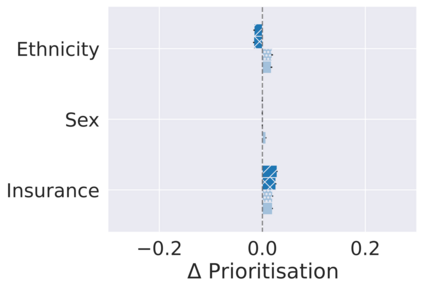

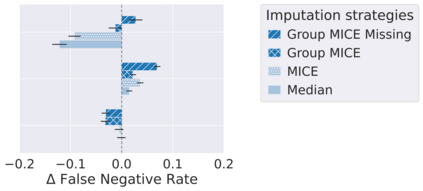

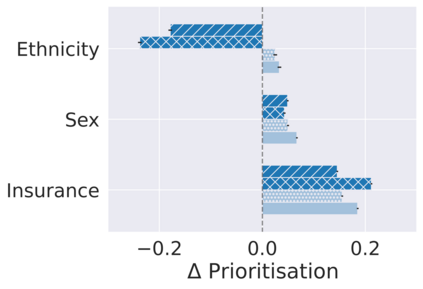

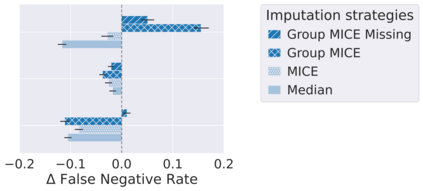

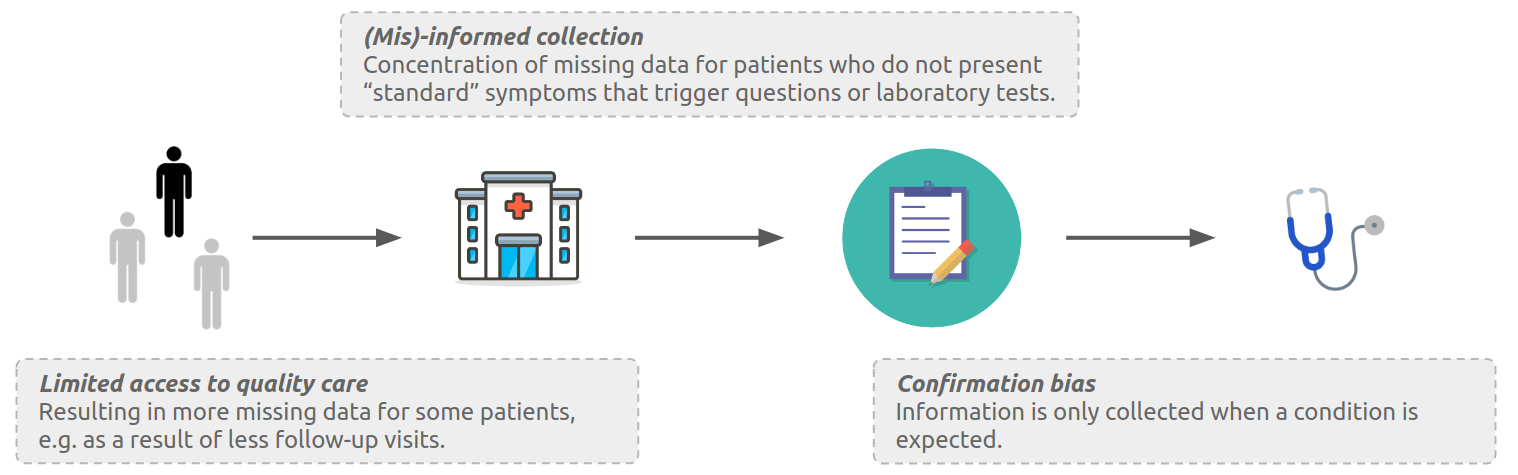

Biases have marked medical history, leading to unequal care affecting marginalised groups. The patterns of missingness in observational data often reflect these group discrepancies, but the algorithmic fairness implications of group-specific missingness are not well understood. Despite its potential impact, imputation is too often an overlooked preprocessing step. When explicitly considered, attention is placed on overall performance, ignoring how this preprocessing can reinforce group-specific inequities. Our work questions this choice by studying how imputation affects downstream algorithmic fairness. First, we provide a structured view of the relationship between clinical presence mechanisms and group-specific missingness patterns. Then, through simulations and real-world experiments, we demonstrate that the imputation choice influences marginalised group performance and that no imputation strategy consistently reduces disparities. Importantly, our results show that current practices may endanger health equity as similarly performing imputation strategies at the population level can affect marginalised groups differently. Finally, we propose recommendations for mitigating inequities that may stem from a neglected step of the machine learning pipeline.

翻译:双轨制具有明显的医学历史特征,导致对边缘化群体的照料不平等。观察数据的缺失模式往往反映了这些群体差异,但群体特有缺失的算法公正性影响却不十分清楚。尽管其潜在影响,但估算往往是一个被忽视的预处理步骤。当明确考虑时,关注的是整体性能,忽视预处理如何加剧群体特有不平等。我们的工作通过研究估算法如何影响下游算法公正来质疑这一选择。首先,我们提供了临床存在机制和群体特有缺失模式之间关系的结构化观点。然后,通过模拟和现实世界实验,我们证明估算选择会影响边缘化群体的业绩,而没有估算战略会不断减少差异。重要的是,我们的结果表明,目前的做法可能会危及健康公平,因为类似地在人口层面实施估算战略可能会对边缘化群体产生不同的影响。最后,我们提出了减轻不平等的建议,这些建议可能源于机器学习管道的忽视步骤。