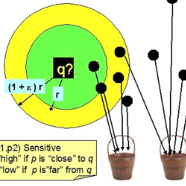

Learning from set-structured data is an essential problem with many applications in machine learning and computer vision. This paper focuses on non-parametric and data-independent learning from set-structured data using approximate nearest neighbor (ANN) solutions, particularly locality-sensitive hashing. We consider the problem of set retrieval from an input set query. Such retrieval problem requires: 1) an efficient mechanism to calculate the distances/dissimilarities between sets, and 2) an appropriate data structure for fast nearest neighbor search. To that end, we propose Sliced-Wasserstein set embedding as a computationally efficient "set-2-vector" mechanism that enables downstream ANN, with theoretical guarantees. The set elements are treated as samples from an unknown underlying distribution, and the Sliced-Wasserstein distance is used to compare sets. We demonstrate the effectiveness of our algorithm, denoted as Set-LOcality Sensitive Hashing (SLOSH), on various set retrieval datasets and compare our proposed embedding with standard set embedding approaches, including Generalized Mean (GeM) embedding/pooling, Featurewise Sort Pooling (FSPool), and Covariance Pooling and show consistent improvement in retrieval results. The code for replicating our results is available here: \href{https://github.com/mint-vu/SLOSH}{https://github.com/mint-vu/SLOSH}.

翻译:从固定结构数据中学习是机器学习和计算机视觉中许多应用中的一个基本问题。 本文侧重于使用近近近邻( ANN) 的解决方案, 特别是地点敏感的散列, 从固定结构数据中进行非参数和数据独立的学习。 我们考虑从输入组查询中进行集合检索的问题。 这种检索问题要求:(1) 建立一个高效机制, 计算各组之间的距离/ 差异, 2 建立一个适当的数据结构, 用于快速近邻搜索。 为此, 我们提议将剪切- Wasserstein 设置嵌入为一种计算高效的“ 设置-2- Victor” 机制, 使下游ANN 能够有理论保证。 设定元素被作为来自未知基本分布的样本处理, 并且使用 Clit- Wasserstein 距离来比较各组。 我们展示了我们的算法的有效性, 以Set- locality 敏感散列( SLOSH) 的标记, 将我们提议的嵌入与标准集的方法进行比较, 包括 Genalization (GM) 嵌入/ 和连接/ Coofitting- courting 。