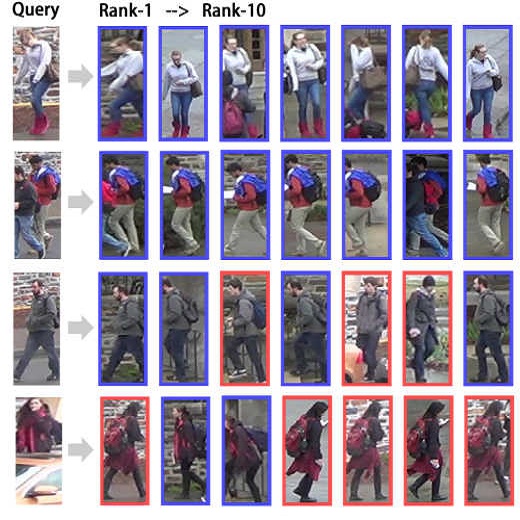

Text-to-image person retrieval aims to identify the target person based on a given textual description query. The primary challenge is to learn the mapping of visual and textual modalities into a common latent space. Prior works have attempted to address this challenge by leveraging separately pre-trained unimodal models to extract visual and textual features. However, these approaches lack the necessary underlying alignment capabilities required to match multimodal data effectively. Besides, these works use prior information to explore explicit part alignments, which may lead to the distortion of intra-modality information. To alleviate these issues, we present IRRA: a cross-modal Implicit Relation Reasoning and Aligning framework that learns relations between local visual-textual tokens and enhances global image-text matching without requiring additional prior supervision. Specifically, we first design an Implicit Relation Reasoning module in a masked language modeling paradigm. This achieves cross-modal interaction by integrating the visual cues into the textual tokens with a cross-modal multimodal interaction encoder. Secondly, to globally align the visual and textual embeddings, Similarity Distribution Matching is proposed to minimize the KL divergence between image-text similarity distributions and the normalized label matching distributions. The proposed method achieves new state-of-the-art results on all three public datasets, with a notable margin of about 3%-9% for Rank-1 accuracy compared to prior methods.

翻译:文本到图像的人物检索旨在根据给定的文本描述查询识别目标人物。主要挑战是学习将视觉和文本模态映射到一个共同的潜在空间。过去的研究试图通过利用分别预训练的单模型从视觉和文本中提取特征来解决这一挑战。然而,这些方法缺乏必要的底层对齐能力以有效匹配多模态数据。此外,这些研究使用先前的信息来探索显式的部分对齐,这可能导致内模态信息的扭曲。为了缓解这些问题,我们提出了IRRA:一种交叉模态隐式关系推理和对齐框架,通过学习局部视觉-文本令牌之间的关系来增强全局图像-文本匹配,而无需额外的先验监督。具体而言,我们首先设计了一个隐式关系推理模块,在蒙版语言建模范式中实现跨模态交互,通过集成交叉模态多模态交互编码器将视觉线索与文本令牌相结合。其次,为了全局对齐视觉和文本嵌入,提出了类似度分布匹配来最小化图像-文本相似度分布与标准化标签匹配分布之间的KL散度。所提出的方法在所有三个公共数据集上实现了新的最优结果,与之前的方法相比,Rank-1准确度获得了约3%-9%的显着优势。