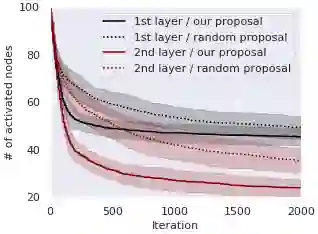

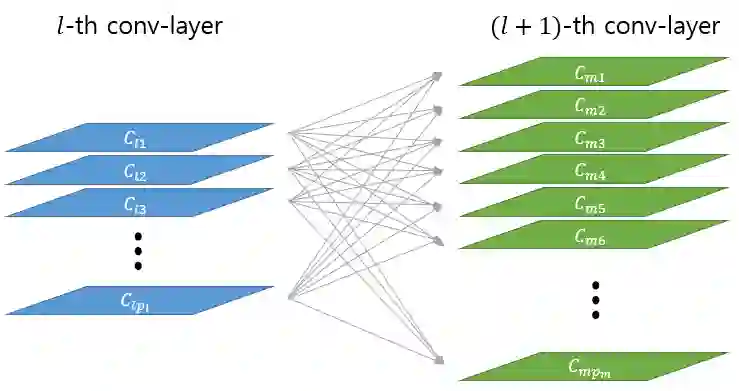

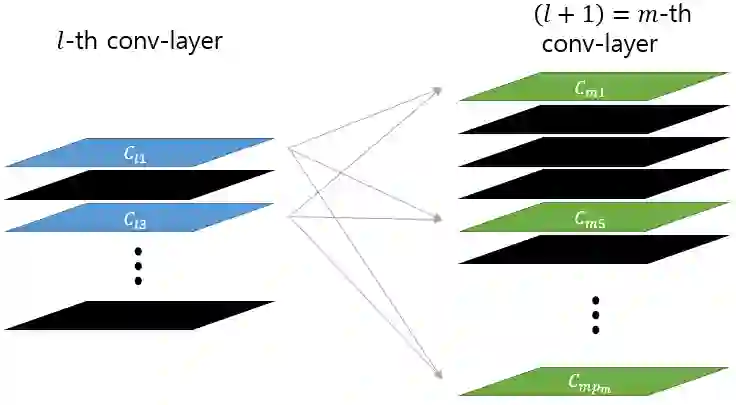

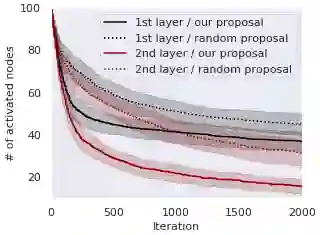

As data size and computing power increase, the architectures of deep neural networks (DNNs) have been getting more complex and huge, and thus there is a growing need to simplify such complex and huge DNNs. In this paper, we propose a novel sparse Bayesian neural network (BNN) which searches a good DNN with an appropriate complexity. We employ the masking variables at each node which can turn off some nodes according to the posterior distribution to yield a nodewise sparse DNN. We devise a prior distribution such that the posterior distribution has theoretical optimalities (i.e. minimax optimality and adaptiveness), and develop an efficient MCMC algorithm. By analyzing several benchmark datasets, we illustrate that the proposed BNN performs well compared to other existing methods in the sense that it discovers well condensed DNN architectures with similar prediction accuracy and uncertainty quantification compared to large DNNs.

翻译:随着数据大小和计算功率的增加,深神经网络的结构变得日益复杂和庞大,因此越来越需要简化如此复杂和庞大的DNN。在本文中,我们提议建立一个新颖的稀疏的Bayesian神经网络(BNN),以寻找具有适当复杂性的好DNN。我们在每个节点使用掩码变量,根据后继分布关闭一些节点,以产生一个有节点的稀疏DNN。我们设计了一种先前的分布方法,使后天分布具有理论上的最佳性(即微小最大最佳性和适应性),并开发了高效的MCMCMC算法。我们通过分析几个基准数据集,我们说明拟议的BNN与其它现有方法相比表现良好,因为它发现与大型DNN的预测准确性和不确定性量化相近于大型 DNN的精密凝固的DNN结构。