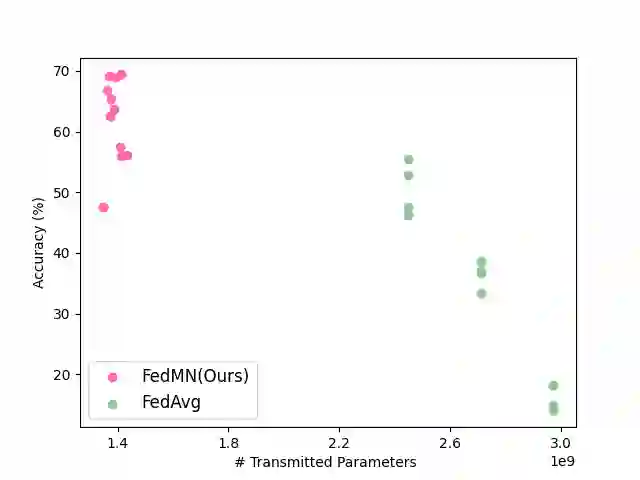

Personalized Federated Learning (PFL) which collaboratively trains a federated model while considering local clients under privacy constraints has attracted much attention. Despite its popularity, it has been observed that existing PFL approaches result in sub-optimal solutions when the joint distribution among local clients diverges. To address this issue, we present Federated Modular Network (FedMN), a novel PFL approach that adaptively selects sub-modules from a module pool to assemble heterogeneous neural architectures for different clients. FedMN adopts a light-weighted routing hypernetwork to model the joint distribution on each client and produce the personalized selection of the module blocks for each client. To reduce the communication burden in existing FL, we develop an efficient way to interact between the clients and the server. We conduct extensive experiments on the real-world test beds and the results show both the effectiveness and efficiency of the proposed FedMN over the baselines.

翻译:联邦个人学习联合会(FPL)在考虑当地客户隐私受限的情况下合作培训一个联合型号的同时,对联合会个人学习联合会(PFL)进行了合作培训,这引起了人们的极大关注。尽管它受到欢迎,但人们注意到,现有的PFL方法在当地客户之间的联合分布出现差异时,会产生非最佳的解决方案。为了解决这一问题,我们介绍了Fedal Modular网络(FedMN),这是一种新型的PFL方法,它从一个模块库中灵活选择子模块,为不同的客户组合异质神经结构。FedMN采用一个轻量级的超网络,以模拟每个客户的联合分布,并为每个客户制作个性化的模块块选择。为了减轻现有FL的通信负担,我们开发了客户与服务器之间互动的有效方式。我们在现实世界测试床上进行了广泛的实验,结果显示拟议的FDMN(FMN)在基线上的效率和效力。