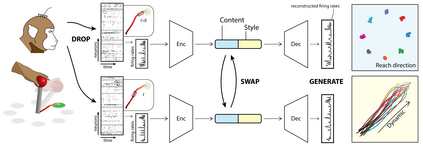

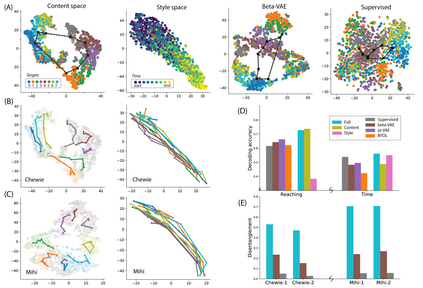

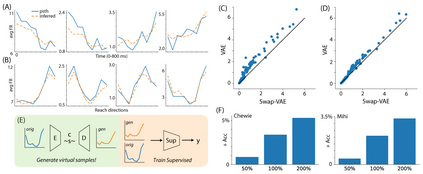

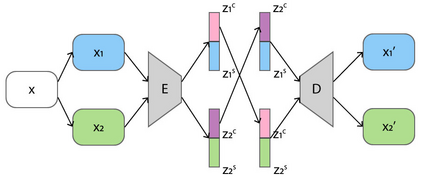

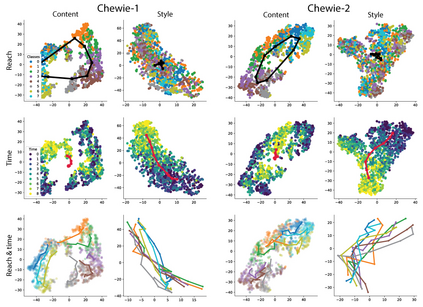

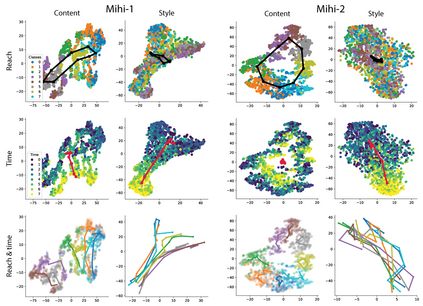

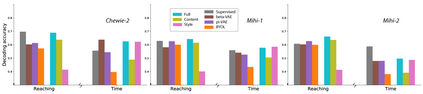

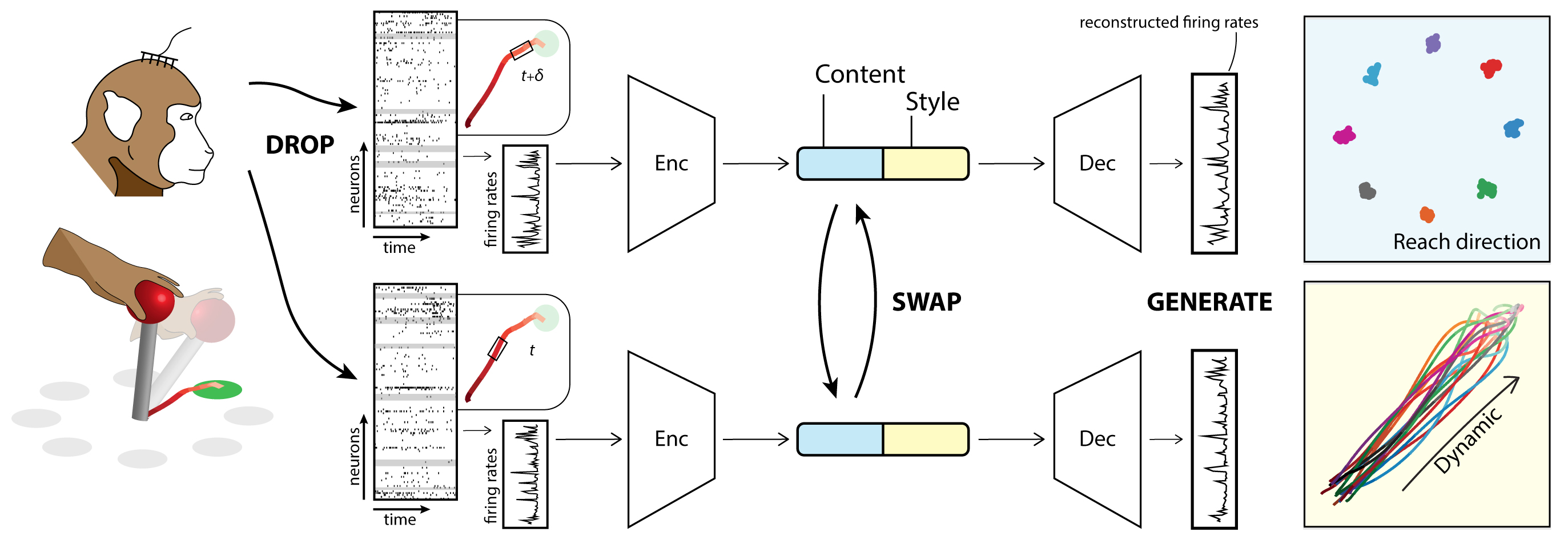

Meaningful and simplified representations of neural activity can yield insights into how and what information is being processed within a neural circuit. However, without labels, finding representations that reveal the link between the brain and behavior can be challenging. Here, we introduce a novel unsupervised approach for learning disentangled representations of neural activity called Swap-VAE. Our approach combines a generative modeling framework with an instance-specific alignment loss that tries to maximize the representational similarity between transformed views of the input (brain state). These transformed (or augmented) views are created by dropping out neurons and jittering samples in time, which intuitively should lead the network to a representation that maintains both temporal consistency and invariance to the specific neurons used to represent the neural state. Through evaluations on both synthetic data and neural recordings from hundreds of neurons in different primate brains, we show that it is possible to build representations that disentangle neural datasets along relevant latent dimensions linked to behavior.

翻译:神经活动的有意义和简化的表达方式可以产生对神经电路中处理信息的方式和内容的洞察力。 但是,如果没有标签, 发现显示大脑与行为之间关联的表达方式可能具有挑战性。 在这里, 我们引入了一种新的不受监督的方法来学习神经活动分解的表达方式, 叫做 Swap- VAE 。 我们的方法将基因模型框架和具体实例的匹配损失结合起来, 试图最大限度地扩大输入( 脑状态) 转变观点之间的表达相似性。 这些转变( 或扩大) 观点是通过及时丢弃神经元和喷洒样本来创建的, 而这些转变( 或增强的) 观点会直接导致网络形成一个既保持时间一致性又保持对用来代表神经状态的特定神经元的不一致性的表达方式。 通过对合成数据以及来自不同大脑中数百个神经元的神经记录的神经记录进行评估, 我们表明, 有可能在与行为相关的潜在维度上建立分离神经数据的表达方式。