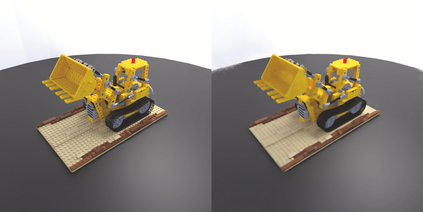

Neural scene representations, such as Neural Radiance Fields (NeRF), are based on training a multilayer perceptron (MLP) using a set of color images with known poses. An increasing number of devices now produce RGB-D(color + depth) information, which has been shown to be very important for a wide range of tasks. Therefore, the aim of this paper is to investigate what improvements can be made to these promising implicit representations by incorporating depth information with the color images. In particular, the recently proposed Mip-NeRF approach, which uses conical frustums instead of rays for volume rendering, allows one to account for the varying area of a pixel with distance from the camera center. The proposed method additionally models depth uncertainty. This allows to address major limitations of NeRF-based approaches including improving the accuracy of geometry, reduced artifacts, faster training time, and shortened prediction time. Experiments are performed on well-known benchmark scenes, and comparisons show improved accuracy in scene geometry and photometric reconstruction, while reducing the training time by 3 - 5 times.

翻译:神经光谱场(Neoral Radiance Fields (NERF))等神经场景示意图的基础是使用一组已知外形的彩色图像对多层光谱(MLP)进行培训。越来越多的设备现在产生RGB-D(颜色+深度)信息,这已证明对一系列广泛的任务非常重要。因此,本文件的目的是调查通过将深度信息与彩色图像结合起来,对这些有希望的隐含表示可以作出哪些改进。特别是,最近提出的Mip-NeRF 方法使用锥形透镜而不是射线来进行体积成像,使一个人能够计算与摄影中心相距遥远的像素的不同区域。拟议的方法是额外的模型深度不确定性。这可以解决基于NERF方法的主要局限性,包括提高地理测量的准确性、减少人工制品、缩短培训时间和缩短预测时间。实验是在众所周知的基准场景上进行的,比较表明现场几何和光度重建的准确性提高了,同时将培训时间缩短3-5次。