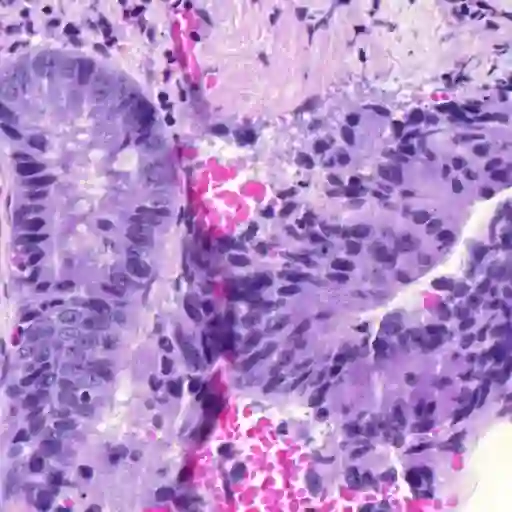

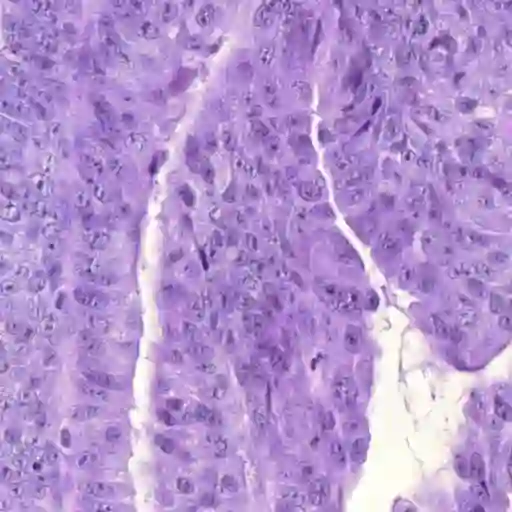

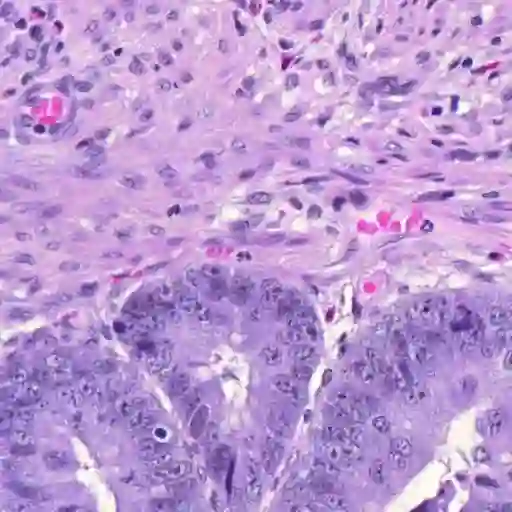

Classical multiple instance learning (MIL) methods are often based on the identical and independent distributed assumption between instances, hence neglecting the potentially rich contextual information beyond individual entities. On the other hand, Transformers with global self-attention modules have been proposed to model the interdependencies among all instances. However, in this paper we question: Is global relation modeling using self-attention necessary, or can we appropriately restrict self-attention calculations to local regimes in large-scale whole slide images (WSIs)? We propose a general-purpose local attention graph-based Transformer for MIL (LA-MIL), introducing an inductive bias by explicitly contextualizing instances in adaptive local regimes of arbitrary size. Additionally, an efficiently adapted loss function enables our approach to learn expressive WSI embeddings for the joint analysis of multiple biomarkers. We demonstrate that LA-MIL achieves state-of-the-art results in mutation prediction for gastrointestinal cancer, outperforming existing models on important biomarkers such as microsatellite instability for colorectal cancer. This suggests that local self-attention sufficiently models dependencies on par with global modules. Our implementation will be published.

翻译:经典多实例学习(MIL)方法往往基于不同实例之间相同和独立分布的假设,从而忽略了个别实体之外的潜在丰富背景信息。另一方面,提出了具有全球自省模块的变异器,以模拟所有实例之间的相互依存性。然而,在本文件中,我们提出疑问:使用自省模式进行全球关系建模是否必要,或者我们能否适当地将自我关注计算限制在大规模整体幻灯片图像中的地方制度中?我们建议为MIL(LA-MIL)采用通用的当地注意图示变异器(LA-MIL),通过在任意大小的适应性地方制度中明确将各种情况背景化,引入一种诱导偏向性偏向性。此外,高效调整的损失功能使我们能够学习表达WSI嵌入的方法,用于对多个生物标志进行联合分析。我们证明LA-MIL在胃癌的突变预测中取得了最新的结果,超过了诸如彩色癌症微型卫星不稳定性等重要生物标志的现有模型。这将表明,当地自我保护模式充分依赖全球模块。