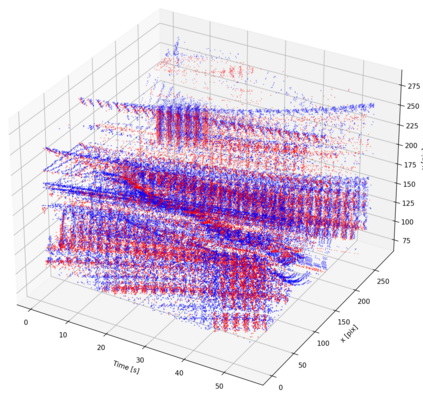

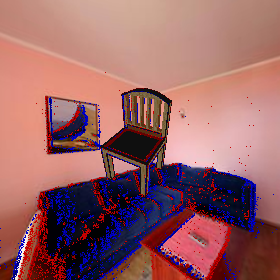

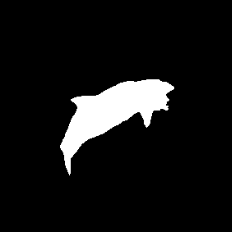

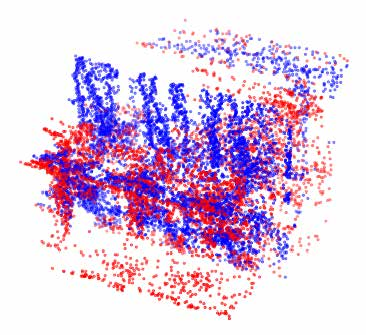

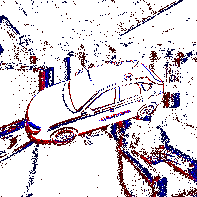

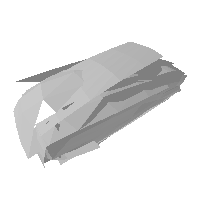

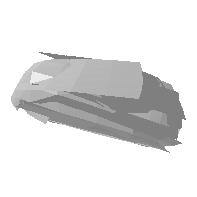

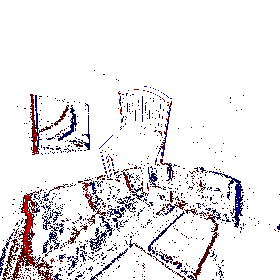

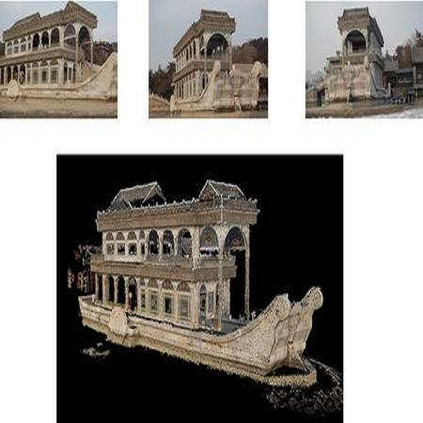

3D shape reconstruction is a primary component of augmented/virtual reality. Despite being highly advanced, existing solutions based on RGB, RGB-D and Lidar sensors are power and data intensive, which introduces challenges for deployment in edge devices. We approach 3D reconstruction with an event camera, a sensor with significantly lower power, latency and data expense while enabling high dynamic range. While previous event-based 3D reconstruction methods are primarily based on stereo vision, we cast the problem as multi-view shape from silhouette using a monocular event camera. The output from a moving event camera is a sparse point set of space-time gradients, largely sketching scene/object edges and contours. We first introduce an event-to-silhouette (E2S) neural network module to transform a stack of event frames to the corresponding silhouettes, with additional neural branches for camera pose regression. Second, we introduce E3D, which employs a 3D differentiable renderer (PyTorch3D) to enforce cross-view 3D mesh consistency and fine-tune the E2S and pose network. Lastly, we introduce a 3D-to-events simulation pipeline and apply it to publicly available object datasets and generate synthetic event/silhouette training pairs for supervised learning.

翻译:3D 形状重建是增强/ 虚拟现实的主要组成部分。 尽管以 RGB、 RGB- D 和 Lidar 传感器为高度先进, 现有解决方案基于 RGB、 RGB- D 和 Lidar 传感器是动力和数据密集, 给边缘装置的部署带来挑战。 我们用一个事件相机、 一个电力、 延缓度和数据成本大大降低的传感器来进行 3D 重建 。 虽然先前的事件3D 重建方法主要基于立体影像, 但是我们主要基于立体影像, 我们用单体事件摄像头将问题投向侧面图像。 移动事件相机的输出是一组空间时空梯度稀少的点, 主要是素描场/ 弹边和等。 我们首先推出一个事件到太阳的神经网络模块, 将一系列的事件框架转换到相应的圆形轮廓中,, 并增加摄像头的神经分支 。 其次, 我们引入 E3D, 使用 3D 不同的制模器( PyTorch3D) 来强制执行三维系的一致性和微调的 E2Sevenal- train- triutal- triutal 和制成平台网络 数据网络 。