CVPR2019 | 15篇论文速递(涵盖目标检测、语义分割和姿态估计等方向)

【导读】CVPR 2019 接收论文列表已经出来了,但只是一些索引号,所以并没有完整的论文合集。CVer 最近也在整理收集,今天一文涵盖15篇 CVPR 2019 论文速递,内容涵盖目标检测、语义分割和姿态估计等方向。

姿态估计

[1] CVPR 2019 Pose estimation文章,目前SOTA,已经开源

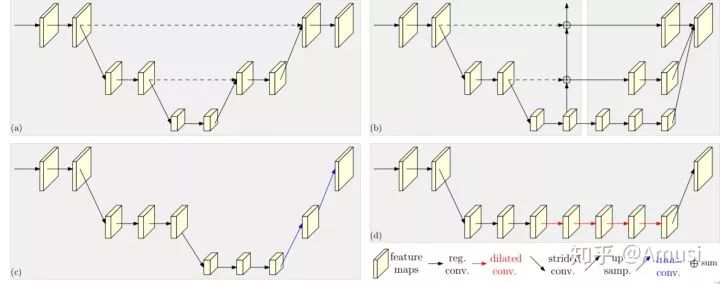

论文题目:Deep High-Resolution Representation Learning for Human Pose Estimation

作者:Ke Sun, Bin Xiao, Dong Liu, Jingdong Wang

论文链接:https://arxiv.org/abs/1902.09212

代码链接:https://github.com/leoxiaobin/deep-high-resolution-net.pytorch

摘要:This is an official pytorch implementation of Deep High-Resolution Representation Learning for Human Pose Estimation. In this work, we are interested in the human pose estimation problem with a focus on learning reliable high-resolution representations. Most existing methods recover high-resolution representations from low-resolution representations produced by a high-to-low resolution network. Instead, our proposed network maintains high-resolution representations through the whole process. We start from a high-resolution subnetwork as the first stage, gradually add high-to-low resolution subnetworks one by one to form more stages, and connect the mutli-resolution subnetworks in parallel. We conduct repeated multi-scale fusions such that each of the high-to-low resolution representations receives information from other parallel representations over and over, leading to rich high-resolution representations. As a result, the predicted keypoint heatmap is potentially more accurate and spatially more precise. We empirically demonstrate the effectiveness of our network through the superior pose estimation results over two benchmark datasets: the COCO keypoint detection dataset and the MPII Human Pose dataset.

视频目标分割

[2] CVPR2019 VOS文章

论文题目:FEELVOS: Fast End-to-End Embedding Learning for Video Object Segmentation

作者:Paul Voigtlaender, Yuning Chai, Florian Schroff, Hartwig Adam, Bastian Leibe, Liang-Chieh Chen

论文链接:https://arxiv.org/abs/1902.09513

摘要:Many of the recent successful methods for video object segmentation (VOS) are overly complicated, heavily rely on fine-tuning on the first frame, and/or are slow, and are hence of limited practical use. In this work, we propose FEELVOS as a simple and fast method which does not rely on fine-tuning. In order to segment a video, for each frame FEELVOS uses a semantic pixel-wise embedding together with a global and a local matching mechanism to transfer information from the first frame and from the previous frame of the video to the current frame. In contrast to previous work, our embedding is only used as an internal guidance of a convolutional network. Our novel dynamic segmentation head allows us to train the network, including the embedding, end-to-end for the multiple object segmentation task with a cross entropy loss. We achieve a new state of the art in video object segmentation without fine-tuning on the DAVIS 2017 validation set with a J&F measure of 69.1%.

行为识别

[3] CVPR2019 Action Recognition文章

论文题目:An Attention Enhanced Graph Convolutional LSTM Network for Skeleton-Based Action Recognition

作者:Chenyang Si, Wentao Chen, Wei Wang, Liang Wang, Tieniu Tan

论文链接:https://arxiv.org/abs/1902.09130

摘要:Skeleton-based action recognition is an important task that requires the adequate understanding of movement characteristics of a human action from the given skeleton sequence. Recent studies have shown that exploring spatial and temporal features of the skeleton sequence is vital for this task. Nevertheless, how to effectively extract discriminative spatial and temporal features is still a challenging problem. In this paper, we propose a novel Attention Enhanced Graph Convolutional LSTM Network (AGC-LSTM) for human action recognition from skeleton data. The proposed AGC-LSTM can not only capture discriminative features in spatial configuration and temporal dynamics but also explore the co-occurrence relationship between spatial and temporal domains. We also present a temporal hierarchical architecture to increases temporal receptive fields of the top AGC-LSTM layer, which boosts the ability to learn the high-level semantic representation and significantly reduces the computation cost. Furthermore, to select discriminative spatial information, the attention mechanism is employed to enhance information of key joints in each AGC-LSTM layer. Experimental results on two datasets are provided: NTU RGB+D dataset and Northwestern-UCLA dataset. The comparison results demonstrate the effectiveness of our approach and show that our approach outperforms the state-of-the-art methods on both datasets.

目标检测

[4] CVPR2019 检测新文

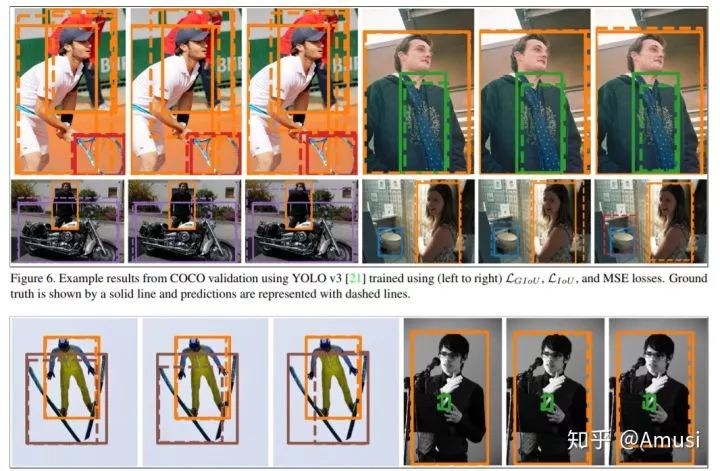

论文题目:Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression

作者:Hamid Rezatofighi, Nathan Tsoi, JunYoung Gwak, Amir Sadeghian, Ian Reid, Silvio Savarese

论文链接:https://arxiv.org/abs/1902.09630

摘要:Intersection over Union (IoU) is the most popular evaluation metric used in the object detection benchmarks. However, there is a gap between optimizing the commonly used distance losses for regressing the parameters of a bounding box and maximizing this metric value. The optimal objective for a metric is the metric itself. In the case of axis-aligned 2D bounding boxes, it can be shown that IoU can be directly used as a regression loss. However, IoU has a plateau making it infeasible to optimize in the case of non-overlapping bounding boxes. In this paper, we address the weaknesses of IoU by introducing a generalized version as both a new loss and a new metric. By incorporating this generalized IoU (GIoU) as a loss into the state-of-the art object detection frameworks, we show a consistent improvement on their performance using both the standard, IoU based, and new, GIoU based, performance measures on popular object detection benchmarks such as PASCAL VOC and MS COCO.

图像分类

[5] CVPR2019 分类新文

论文题目:Learning a Deep ConvNet for Multi-label Classification with Partial Labels

作者:Thibaut Durand, Nazanin Mehrasa, Greg Mori

论文链接:https://arxiv.org/abs/1902.09720

摘要:Deep ConvNets have shown great performance for single-label image classification (e.g. ImageNet), but it is necessary to move beyond the single-label classification task because pictures of everyday life are inherently multi-label. Multi-label classification is a more difficult task than single-label classification because both the input images and output label spaces are more complex. Furthermore, collecting clean multi-label annotations is more difficult to scale-up than single-label annotations. To reduce the annotation cost, we propose to train a model with partial labels i.e. only some labels are known per image. We first empirically compare different labeling strategies to show the potential for using partial labels on multi-label datasets. Then to learn with partial labels, we introduce a new classification loss that exploits the proportion of known labels per example. Our approach allows the use of the same training settings as when learning with all the annotations. We further explore several curriculum learning based strategies to predict missing labels. Experiments are performed on three large-scale multi-label datasets: MS COCO, NUS-WIDE and Open Images.

3D目标检测

[6] CVPR2019 3D detection新文

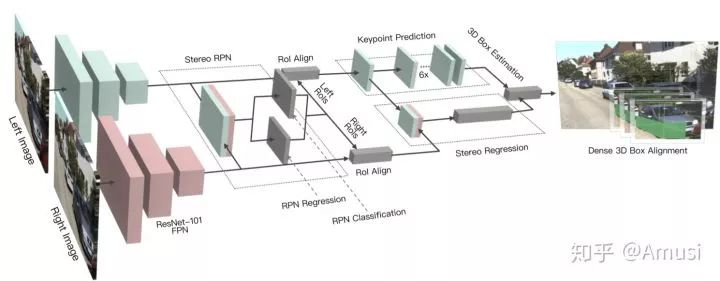

论文题目:Stereo R-CNN based 3D Object Detection for Autonomous Driving

作者:Peiliang Li, Xiaozhi Chen, Shaojie Shen

论文链接:https://arxiv.org/abs/1902.09738

摘要:We propose a 3D object detection method for autonomous driving by fully exploiting the sparse and dense, semantic and geometry information in stereo imagery. Our method, called Stereo R-CNN, extends Faster R-CNN for stereo inputs to simultaneously detect and associate object in left and right images. We add extra branches after stereo Region Proposal Network (RPN) to predict sparse keypoints, viewpoints, and object dimensions, which are combined with 2D left-right boxes to calculate a coarse 3D object bounding box. We then recover the accurate 3D bounding box by a region-based photometric alignment using left and right RoIs. Our method does not require depth input and 3D position supervision, however, outperforms all existing fully supervised image-based methods. Experiments on the challenging KITTI dataset show that our method outperforms the state-of-the-art stereo-based method by around 30% AP on both 3D detection and 3D localization tasks. Code will be made publicly available.

三维重建

[7] CVPR2019 3D Reconstruction新文

论文题目:Single-Image Piece-wise Planar 3D Reconstruction via Associative Embedding

作者:Zehao Yu, Jia Zheng, Dongze Lian, Zihan Zhou, Shenghua Gao

论文链接:https://arxiv.org/abs/1902.09777

代码链接:https://github.com/svip-lab/PlanarReconstruction

摘要:Single-image piece-wise planar 3D reconstruction aims to simultaneously segment plane instances and recover 3D plane parameters from an image. Most recent approaches leverage convolutional neural networks (CNNs) and achieve promising results. However, these methods are limited to detecting a fixed number of planes with certain learned order. To tackle this problem, we propose a novel two-stage method based on associative embedding, inspired by its recent success in instance segmentation. In the first stage, we train a CNN to map each pixel to an embedding space where pixels from the same plane instance have similar embeddings. Then, the plane instances are obtained by grouping the embedding vectors in planar regions via an efficient mean shift clustering algorithm. In the second stage, we estimate the parameter for each plane instance by considering both pixel-level and instance-level consistencies. With the proposed method, we are able to detect an arbitrary number of planes. Extensive experiments on public datasets validate the effectiveness and efficiency of our method. Furthermore, our method runs at 30 fps at the testing time, thus could facilitate many real-time applications such as visual SLAM and human-robot interaction.

点云分割

[8] CVPR2019 点云分割新文

论文题目:Associatively Segmenting Instances and Semantics in Point Clouds

作者:Xinlong Wang, Shu Liu, Xiaoyong Shen, Chunhua Shen, Jiaya Jia

论文链接:https://arxiv.org/abs/1902.09852

代码链接:https://github.com/WXinlong/ASIS

摘要:A 3D point cloud describes the real scene precisely and intuitively.To date how to segment diversified elements in such an informative 3D scene is rarely discussed. In this paper, we first introduce a simple and flexible framework to segment instances and semantics in point clouds simultaneously. Then, we propose two approaches which make the two tasks take advantage of each other, leading to a win-win situation. Specifically, we make instance segmentation benefit from semantic segmentation through learning semantic-aware point-level instance embedding. Meanwhile, semantic features of the points belonging to the same instance are fused together to make more accurate per-point semantic predictions. Our method largely outperforms the state-of-the-art method in 3D instance segmentation along with a significant improvement in 3D semantic segmentation.

3D 人体姿态估计

[9] CVPR2019 3D人体姿态估计新文

论文题目:RepNet: Weakly Supervised Training of an Adversarial Reprojection Network for 3D Human Pose Estimation

作者:Bastian Wandt, Bodo Rosenhahn

论文链接:https://arxiv.org/abs/1902.09868

摘要:This paper addresses the problem of 3D human pose estimation from single images. While for a long time human skeletons were parameterized and fitted to the observation by satisfying a reprojection error, nowadays researchers directly use neural networks to infer the 3D pose from the observations. However, most of these approaches ignore the fact that a reprojection constraint has to be satisfied and are sensitive to overfitting. We tackle the overfitting problem by ignoring 2D to 3D correspondences. This efficiently avoids a simple memorization of the training data and allows for a weakly supervised training. One part of the proposed reprojection network (RepNet) learns a mapping from a distribution of 2D poses to a distribution of 3D poses using an adversarial training approach. Another part of the network estimates the camera. This allows for the definition of a network layer that performs the reprojection of the estimated 3D pose back to 2D which results in a reprojection loss function. Our experiments show that RepNet generalizes well to unknown data and outperforms state-of-the-art methods when applied to unseen data. Moreover, our implementation runs in real-time on a standard desktop PC.

3D 人脸

[10] CVPR2019 3D Face新文

论文题目:Disentangled Representation Learning for 3D Face Shape

作者:Zi-Hang Jiang, Qianyi Wu, Keyu Chen, Juyong Zhang

论文链接:https://arxiv.org/abs/1902.09887

摘要:In this paper, we present a novel strategy to design disentangled 3D face shape representation. Specifically, a given 3D face shape is decomposed into identity part and expression part, which are both encoded and decoded in a nonlinear way. To solve this problem, we propose an attribute decomposition framework for 3D face mesh. To better represent face shapes which are usually nonlinear deformed between each other, the face shapes are represented by a vertex based deformation representation rather than Euclidean coordinates. The experimental results demonstrate that our method has better performance than existing methods on decomposing the identity and expression parts. Moreover, more natural expression transfer results can be achieved with our method than existing methods.

视频描述

[11] CVPR2019 Video Caption新文

论文题目:Spatio-Temporal Dynamics and Semantic Attribute Enriched Visual Encoding for Video Captioning

作者:Nayyer Aafaq, Naveed Akhtar, Wei Liu, Syed Zulqarnain Gilani, Ajmal Mian

论文链接:https://arxiv.org/abs/1902.10322

摘要:Automatic generation of video captions is a fundamental challenge in computer vision. Recent techniques typically employ a combination of Convolutional Neural Networks (CNNs) and Recursive Neural Networks (RNNs) for video captioning. These methods mainly focus on tailoring sequence learning through RNNs for better caption generation, whereas off-the-shelf visual features are borrowed from CNNs. We argue that careful designing of visual features for this task is equally important, and present a visual feature encoding technique to generate semantically rich captions using Gated Recurrent Units (GRUs). Our method embeds rich temporal dynamics in visual features by hierarchically applying Short Fourier Transform to CNN features of the whole video. It additionally derives high level semantics from an object detector to enrich the representation with spatial dynamics of the detected objects. The final representation is projected to a compact space and fed to a language model. By learning a relatively simple language model comprising two GRU layers, we establish new state-of-the-art on MSVD and MSR-VTT datasets for METEOR and ROUGE_L metrics.

语义分割

[12] CVPR2019 弱监督语义分割新文

论文题目:FickleNet: Weakly and Semi-supervised Semantic Image Segmentation using Stochastic Inference

作者:Jungbeom Lee, Eunji Kim, Sungmin Lee, Jangho Lee, Sungroh Yoon

论文链接:https://arxiv.org/abs/1902.10421

摘要:The main obstacle to weakly supervised semantic image segmentation is the difficulty of obtaining pixel-level information from coarse image-level annotations. Most methods based on image-level annotations use localization maps obtained from the classifier, but these only focus on the small discriminative parts of objects and do not capture precise boundaries. FickleNet explores diverse combinations of locations on feature maps created by generic deep neural networks. It selects hidden units randomly and then uses them to obtain activation scores for image classification. FickleNet implicitly learns the coherence of each location in the feature maps, resulting in a localization map which identifies both discriminative and other parts of objects. The ensemble effects are obtained from a single network by selecting random hidden unit pairs, which means that a variety of localization maps are generated from a single image. Our approach does not require any additional training steps and only adds a simple layer to a standard convolutional neural network; nevertheless it outperforms recent comparable techniques on the Pascal VOC 2012 benchmark in both weakly and semi-supervised settings.

视频处理

[13] CVPR2019 视频处理新文

论文题目:Single-frame Regularization for Temporally Stable CNNs

作者:Gabriel Eilertsen, Rafał K. Mantiuk, Jonas Unger

论文链接:https://arxiv.org/abs/1902.10424

摘要:Convolutional neural networks (CNNs) can model complicated non-linear relations between images. However, they are notoriously sensitive to small changes in the input. Most CNNs trained to describe image-to-image mappings generate temporally unstable results when applied to video sequences, leading to flickering artifacts and other inconsistencies over time. In order to use CNNs for video material, previous methods have relied on estimating dense frame-to-frame motion information (optical flow) in the training and/or the inference phase, or by exploring recurrent learning structures. We take a different approach to the problem, posing temporal stability as a regularization of the cost function. The regularization is formulated to account for different types of motion that can occur between frames, so that temporally stable CNNs can be trained without the need for video material or expensive motion estimation. The training can be performed as a fine-tuning operation, without architectural modifications of the CNN. Our evaluation shows that the training strategy leads to large improvements in temporal smoothness. Moreover, in situations where the quantity of training data is limited, the regularization can help in boosting the generalization performance to a much larger extent than what is possible with naïve augmentation strategies.

多视几何

[14] CVPR2019 多视几何新文

论文题目:Recurrent MVSNet for High-resolution Multi-view Stereo Depth Inference

作者:Yao Yao, Zixin Luo, Shiwei Li, Tianwei Shen, Tian Fang, Long Quan

论文链接:https://arxiv.org/abs/1902.10556

代码链接:https://github.com/YoYo000/MVSNet

摘要:Deep learning has recently demonstrated its excellent performance for multi-view stereo (MVS). However, one major limitation of current learned MVS approaches is the scalability: the memory-consuming cost volume regularization makes the learned MVS hard to be applied to high-resolution scenes. In this paper, we introduce a scalable multi-view stereo framework based on the recurrent neural network. Instead of regularizing the entire 3D cost volume in one go, the proposed Recurrent Multi-view Stereo Network (R-MVSNet) sequentially regularizes the 2D cost maps along the depth direction via the gated recurrent unit (GRU). This reduces dramatically the memory consumption and makes high-resolution reconstruction feasible. We first show the state-of-the-art performance achieved by the proposed R-MVSNet on the recent MVS benchmarks. Then, we further demonstrate the scalability of the proposed method on several large-scale scenarios, where previous learned approaches often fail due to the memory constraint.

视频分类

[15] CVPR2019 Video Classification新文

论文题目:Efficient Video Classification Using Fewer Frames

作者:Shweta Bhardwaj, Mukundhan Srinivasan, Mitesh M. Khapra

论文链接:https://arxiv.org/abs/1902.10640

摘要:Recently,there has been a lot of interest in building compact models for video classification which have a small memory footprint (<1 GB). While these models are compact, they typically operate by repeated application of a small weight matrix to all the frames in a video. E.g. recurrent neural network based methods compute a hidden state for every frame of the video using a recurrent weight matrix. Similarly, cluster-and-aggregate based methods such as NetVLAD, have a learnable clustering matrix which is used to assign soft-clusters to every frame in the video. Since these models look at every frame in the video, the number of floating point operations (FLOPs) is still large even though the memory footprint is small. We focus on building compute-efficient video classification models which process fewer frames and hence have less number of FLOPs. Similar to memory efficient models, we use the idea of distillation albeit in a different setting. Specifically, in our case, a compute-heavy teacher which looks at all the frames in the video is used to train a compute-efficient student which looks at only a small fraction of frames in the video. This is in contrast to a typical memory efficient Teacher-Student setting, wherein both the teacher and the student look at all the frames in the video but the student has fewer parameters. Our work thus complements the research on memory efficient video classification. We do an extensive evaluation with three types of models for video classification,viz.(i) recurrent models (ii) cluster-and-aggregate models and (iii) memory-efficient cluster-and-aggregate models and show that in each of these cases, a see-it-all teacher can be used to train a compute efficient see-very-little student. We show that the proposed student network can reduce the inference time by 30% and the number of FLOPs by approximately 90% with a negligible drop in the performance.

本文来自微信公众号“CVer”(微信号:CVerNews),知乎专栏地址:https://zhuanlan.zhihu.com/p/59987434

特别鸣谢 CV_arXiv_Daily 公众号提供的素材,本文介绍的论文已经同步至:https://github.com/zhengzhugithub/CV-arXiv-Daily

通过参与 CVPR 小组的各类活动,如发布泡泡、笔记、帖子和小组成员交流讨论来获得相对应的「研值」,在小组内研值积累排行第一,即有可能获得赞助计划名额。

获得赞助名额的小伙伴,将由 AI 研习社提供往返机票+酒店住宿+注册费用,和 AI 科技评论的记者同行启动赴美之旅,让你无后顾之忧地跟大咖直接面对面!

扫码即刻参与