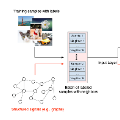

Neural sequence labeling (NSL) aims at assigning labels for input language tokens, which covers a broad range of applications, such as named entity recognition (NER) and slot filling, etc. However, the satisfying results achieved by traditional supervised-based approaches heavily depend on the large amounts of human annotation data, which may not be feasible in real-world scenarios due to data privacy and computation efficiency issues. This paper presents SeqUST, a novel uncertain-aware self-training framework for NSL to address the labeled data scarcity issue and to effectively utilize unlabeled data. Specifically, we incorporate Monte Carlo (MC) dropout in Bayesian neural network (BNN) to perform uncertainty estimation at the token level and then select reliable language tokens from unlabeled data based on the model confidence and certainty. A well-designed masked sequence labeling task with a noise-robust loss supports robust training, which aims to suppress the problem of noisy pseudo labels. In addition, we develop a Gaussian-based consistency regularization technique to further improve the model robustness on Gaussian-distributed perturbed representations. This effectively alleviates the over-fitting dilemma originating from pseudo-labeled augmented data. Extensive experiments over six benchmarks demonstrate that our SeqUST framework effectively improves the performance of self-training, and consistently outperforms strong baselines by a large margin in low-resource scenarios

翻译:神经序列标签(NSL)旨在为输入语言符号指定标签,包括广泛的应用,如名称实体识别(NER)和空档填充等。然而,传统监督方法的满意结果在很大程度上取决于大量人类批注数据,这些数据在现实世界情景中可能由于数据隐私和计算效率问题而不可行。本文介绍了SeqUST,这是NSL为处理标签数据稀缺问题和有效利用未标记数据而建立的具有不确定性的新颖自我培训框架。具体地说,我们将蒙特卡洛(MC)的辍学情况纳入Bayesian神经网络(BNN),以便在象征性水平上进行不确定性估计,然后根据模型信心和确定性从无标签数据中选择可靠的语言标注。精心设计的隐含噪音-扰动损失的任务顺序支持强有力的培训,旨在抑制杂音伪标签问题。此外,我们开发了基于高校的自定义一致性调整技术,以进一步改善高校的低比强度透视神经网络(BNNNN)模型的稳健性度,从而有效地改进了我们从模型中开始的大规模基准的自我定位。