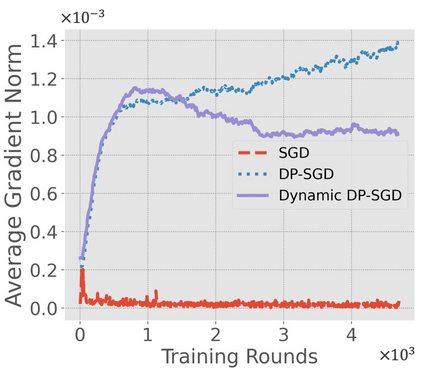

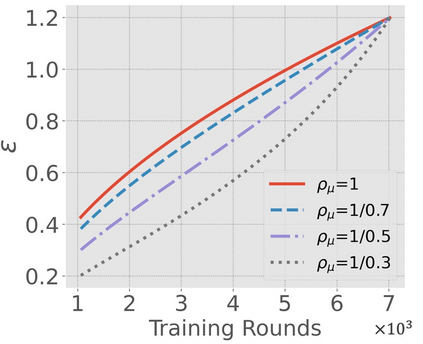

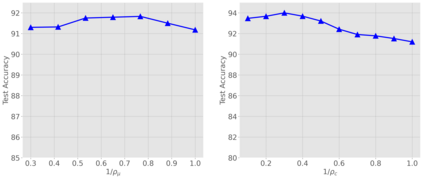

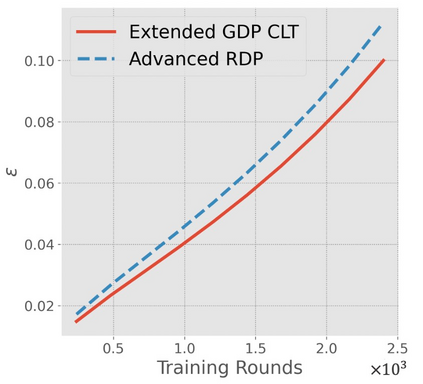

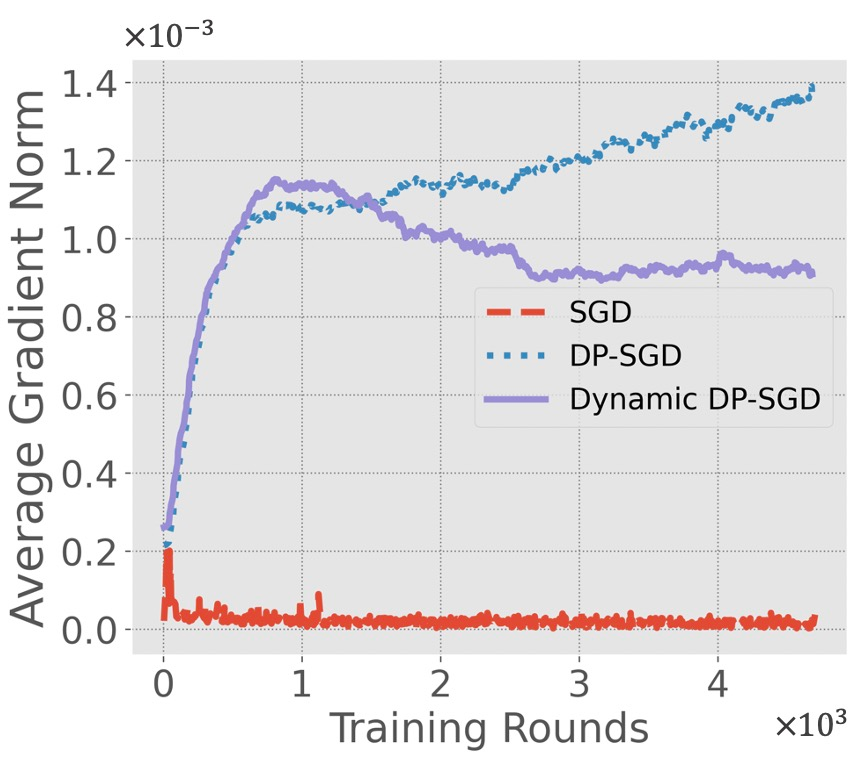

Differentially-Private Stochastic Gradient Descent (DP-SGD) prevents training-data privacy breaches by adding noise to the clipped gradient during SGD training to satisfy the differential privacy (DP) definition. On the other hand, the same clipping operation and additive noise across training steps results in unstable updates and even a ramp-up period, which significantly reduces the model's accuracy. In this paper, we extend the Gaussian DP central limit theorem to calibrate the clipping value and the noise power for each individual step separately. We, therefore, are able to propose the dynamic DP-SGD, which has a lower privacy cost than the DP-SGD during updates until they achieve the same target privacy budget at a target number of updates. Dynamic DP-SGD, in particular, improves model accuracy without sacrificing privacy by gradually lowering both clipping value and noise power while adhering to a total privacy budget constraint. Extensive experiments on a variety of deep learning tasks, including image classification, natural language processing, and federated learning, show that the proposed dynamic DP-SGD algorithm stabilizes updates and, as a result, significantly improves model accuracy in the strong privacy protection region when compared to DP-SGD.

翻译:另一方面,同样的剪切操作和跨培训步骤的添加噪声导致不稳更新,甚至是一个加速期,从而大大降低了模型的准确性。在本文件中,我们扩展了Gaussian DP中心限制理论范围,以分别校正剪切值和每个步骤的噪声。因此,我们能够提出动态DP-SGD, 在更新时,其隐私费用低于DP-SGD,直到在更新的目标数达到同一目标的隐私预算。动态DP-SGD, 特别是,通过逐步降低剪切值和噪音能力,同时坚持完全隐私预算限制,提高模型的准确性,同时提高模型的准确性,同时逐步降低剪切值和噪音能力。关于各种深层学习任务的广泛实验,包括图像分类、自然语言处理和联合学习,表明拟议的DP-SGD动态算法在更新时比DP-SGD更稳定更新,并大大改进了模型的准确性,同时将模型的准确性与DPSGG相比,大大改进了区域。