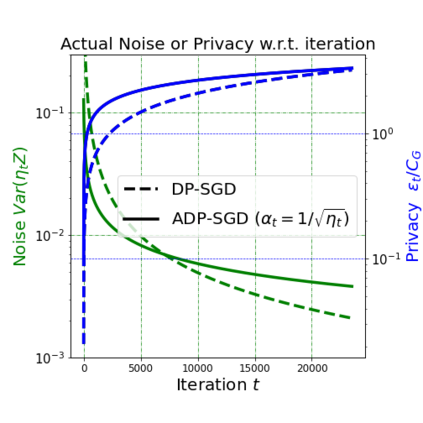

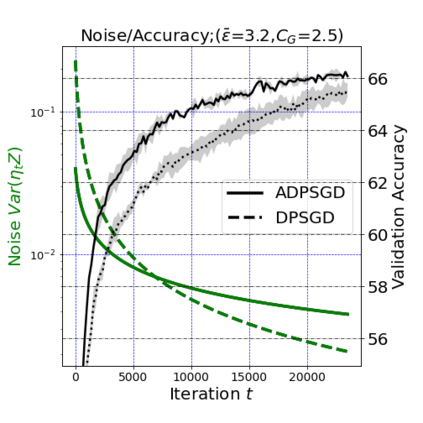

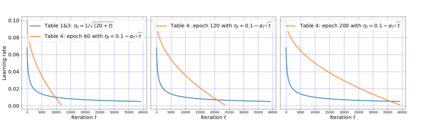

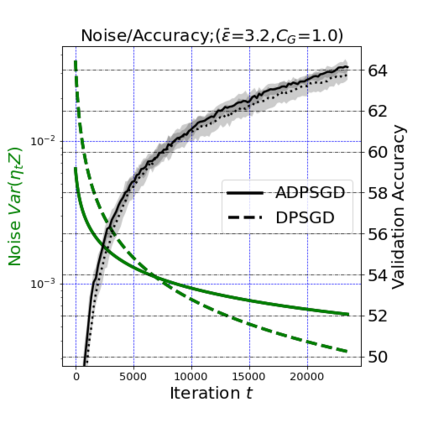

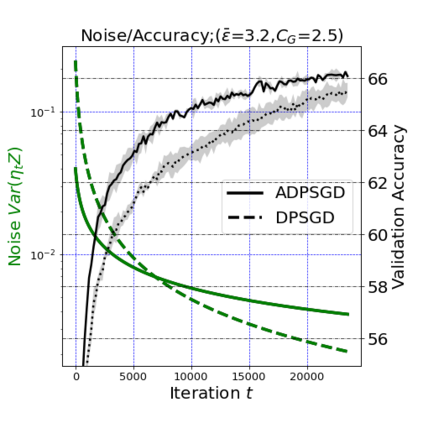

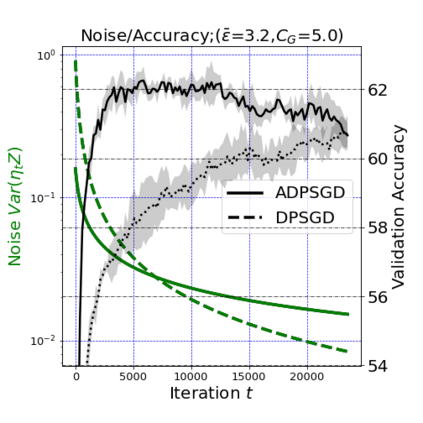

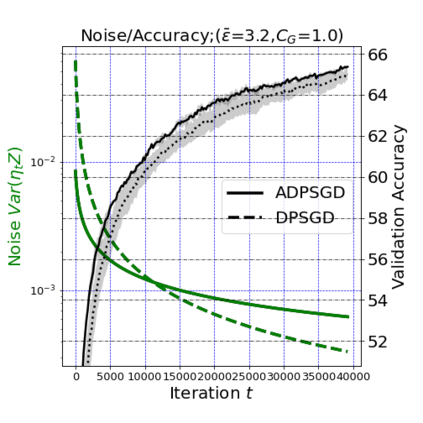

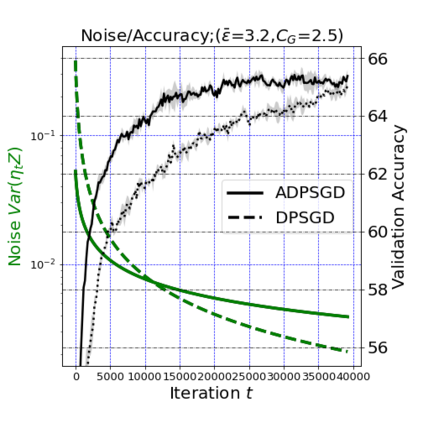

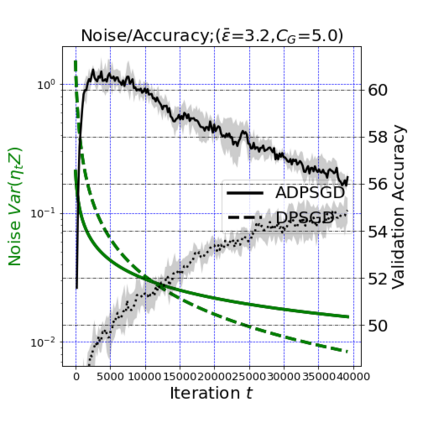

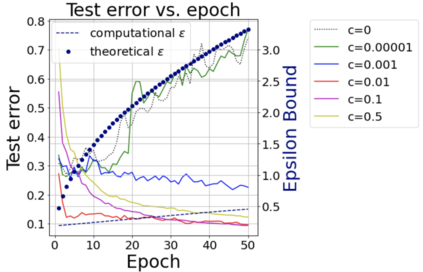

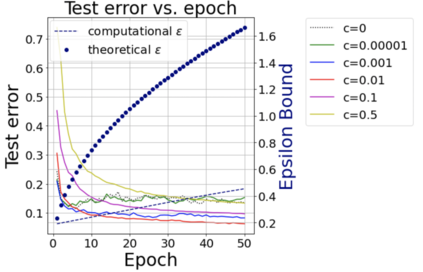

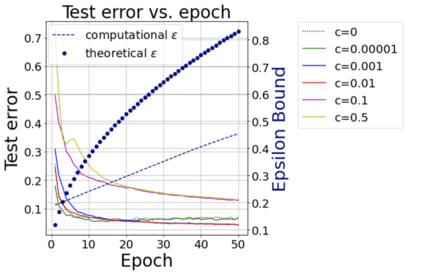

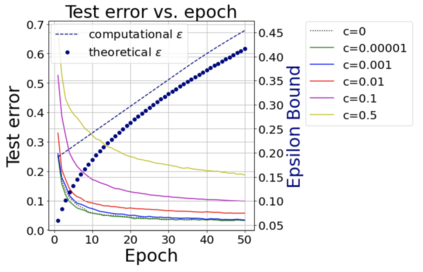

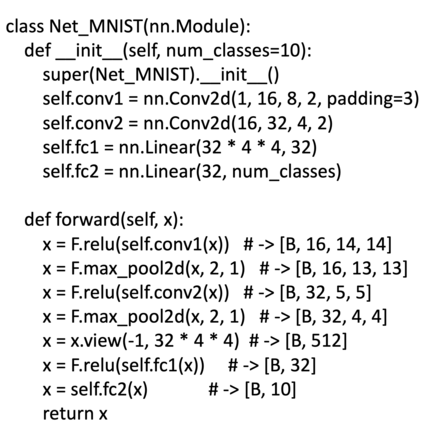

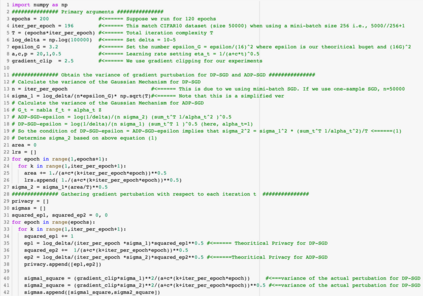

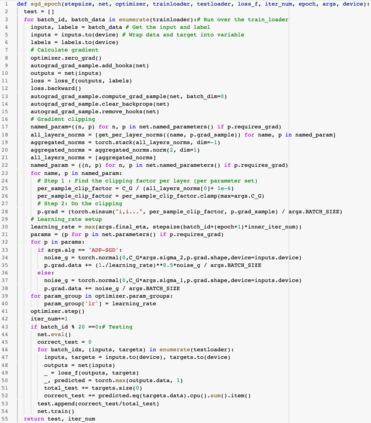

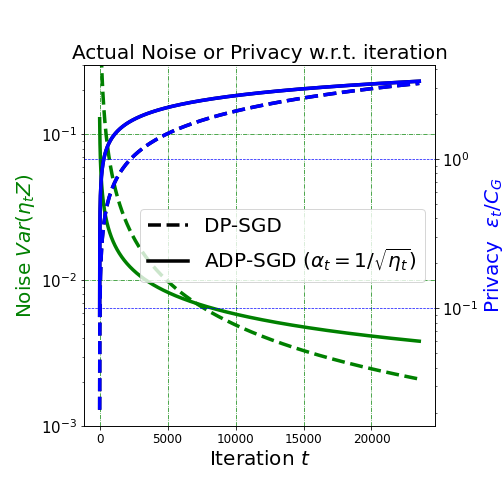

We propose an adaptive (stochastic) gradient perturbation method for differentially private empirical risk minimization. At each iteration, the random noise added to the gradient is optimally adapted to the stepsize; we name this process adaptive differentially private (ADP) learning. Given the same privacy budget, we prove that the ADP method considerably improves the utility guarantee compared to the standard differentially private method in which vanilla random noise is added. Our method is particularly useful for gradient-based algorithms with time-varying learning rates, including variants of AdaGrad (Duchi et al., 2011). We provide extensive numerical experiments to demonstrate the effectiveness of the proposed adaptive differentially private algorithm.

翻译:我们建议了一种适应(随机)梯度扰动方法,用于区分私人经验风险最小化。在每次迭代中,向梯度添加的随机噪音最适宜地适应阶梯化;我们给出了这一过程的适应性差异化(ADP)学习名称。根据同样的隐私预算,我们证明ADP方法与添加香草随机噪声的标准差异化私人方法相比,大大改进了公用事业保障。我们的方法对于具有时间变化式学习率的梯度算法特别有用,包括AdaGrad变量(Duchi等人,2011年)。我们提供了广泛的数字实验,以证明拟议的适应性差异化私人算法的有效性。