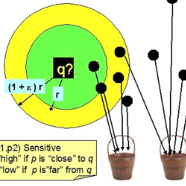

Data valuation is a growing research field that studies the influence of individual data points for machine learning (ML) models. Data Shapley, inspired by cooperative game theory and economics, is an effective method for data valuation. However, it is well-known that the Shapley value (SV) can be computationally expensive. Fortunately, Jia et al. (2019) showed that for K-Nearest Neighbors (KNN) models, the computation of Data Shapley is surprisingly simple and efficient. In this note, we revisit the work of Jia et al. (2019) and propose a more natural and interpretable utility function that better reflects the performance of KNN models. We derive the corresponding calculation procedure for the Data Shapley of KNN classifiers/regressors with the new utility functions. Our new approach, dubbed soft-label KNN-SV, achieves the same time complexity as the original method. We further provide an efficient approximation algorithm for soft-label KNN-SV based on locality sensitive hashing (LSH). Our experimental results demonstrate that Soft-label KNN-SV outperforms the original method on most datasets in the task of mislabeled data detection, making it a better baseline for future work on data valuation.

翻译:----

"数据估值(Data Valuation)"是研究机器学习模型中每个数据点影响的一个研究领域。数据Shapley(Data Shapley)是一种有效的数据估值方法,受合作博弈和经济学的启发。然而,众所周知,Shapley值(SV)的计算可能会很费时间。幸运的是,Jia等(2019)表明,对于K最近邻(KNN)模型,Data Shapley的计算非常简单和高效。在本文中,我们重新审视了Jia等人(2019)的工作,并提出了一种更自然和可解释的效用函数,更好地反映了KNN模型的性能。我们推导了相应的计算程序,适用于具有新效用函数的KNN分类器/回归器的Data Shapley。我们的新方法,称为软标记KNN-SV,实现了与原始方法相同的时间复杂度。我们进一步提供了一种基于局部敏感哈希(Locality Sensitive Hashing,LSH)的软标记KNN-SV的有效近似算法。我们的实验结果表明,软标记KNN-SV在大多数数据集上优于原始方法,在误标记数据检测任务上表现更好,可以成为未来研究数据估值的更好基准。