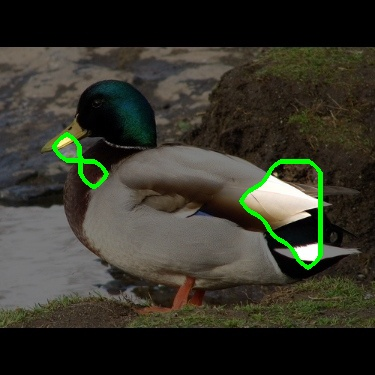

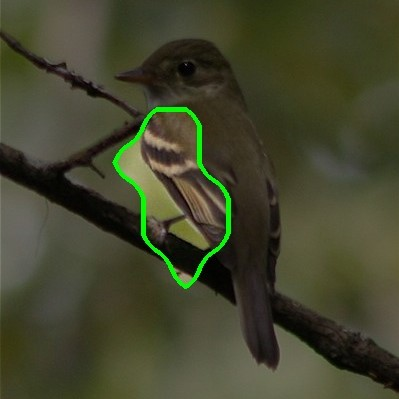

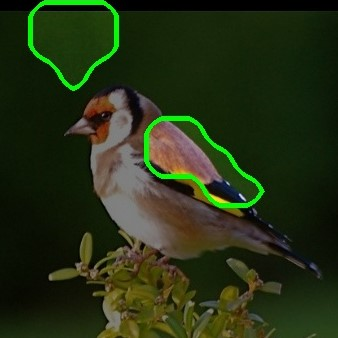

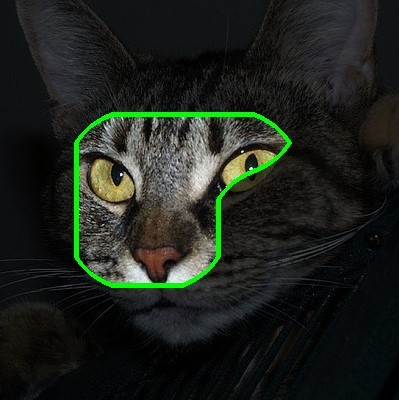

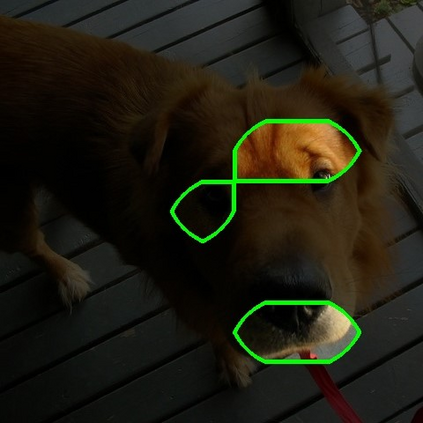

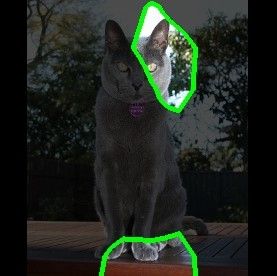

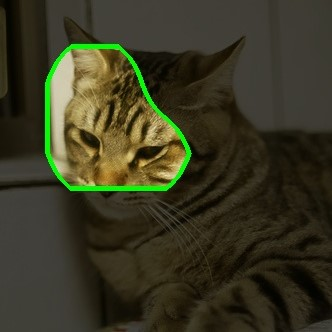

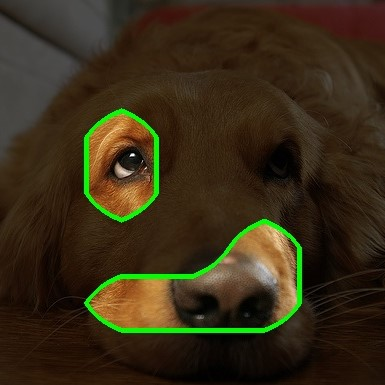

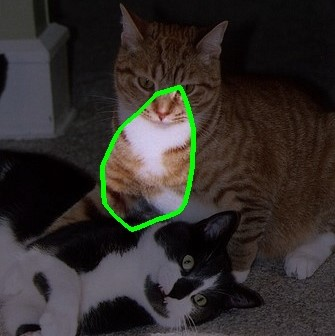

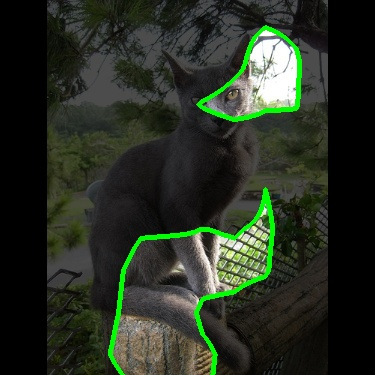

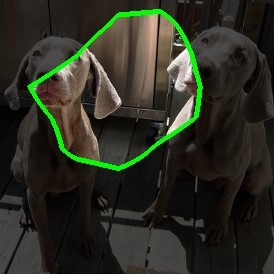

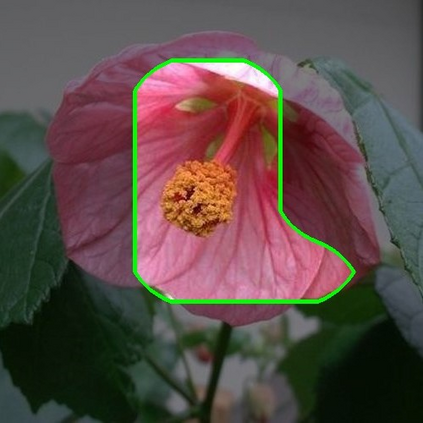

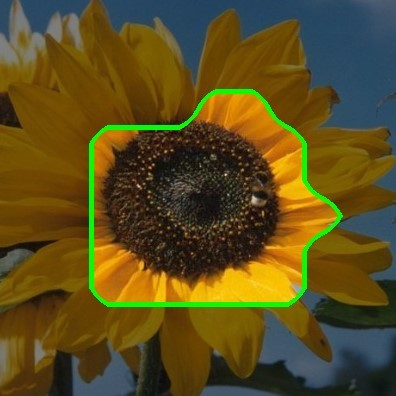

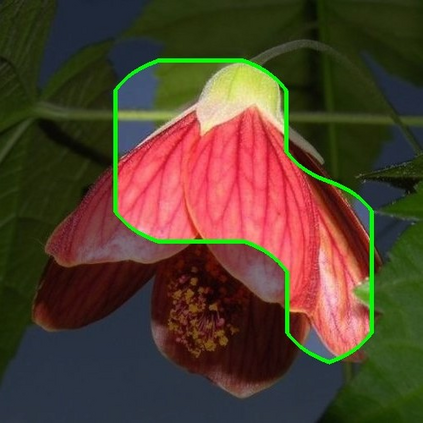

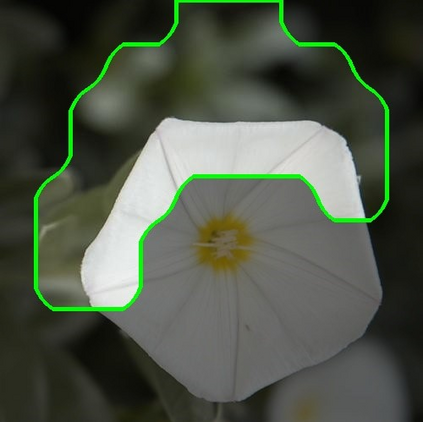

Learning semantically meaningful features is important for Deep Neural Networks to win end-user trust. Attempts to generate post-hoc explanations fall short in gaining user confidence as they do not improve the interpretability of feature representations learned by the models. In this work, we propose Semantic Convolutional Neural Network (SemCNN) that has an additional Concept layer to learn the associations between visual features and word phrases. SemCNN employs an objective function that optimizes for both the prediction accuracy as well as the semantic meaningfulness of the learned feature representations. Further, SemCNN makes its decisions as a weighted sum of the contributions of these features leading to fully interpretable decisions. Experiment results on multiple benchmark datasets demonstrate that SemCNN can learn features with clear semantic meaning and their corresponding contributions to the model decision without compromising prediction accuracy. Furthermore, these learned concepts are transferrable and can be applied to new classes of objects that have similar concepts.

翻译:深神经网络要赢得终端用户的信任,就必须具备具有内在意义的学习特征,深神经网络要想赢得终端用户的信任,要想产生热后解释,则不能获得用户信心,因为它们不能改善模型所学特征表现的可解释性。在这项工作中,我们提议采用具有额外概念层的语义革命神经网络(SemCNN),以学习视觉特征和词句之间的联系。SemCNN使用一个客观功能,既能优化预测准确性,又能优化所学特征表现的语义意义。此外,SemCNN将决定作为这些特征促成完全可解释决定的贡献的加权总和。多个基准数据集的实验结果表明,SemCNN可以学习具有明确语义含义的特征及其对模型决定的相应贡献,同时又不损害预测的准确性。此外,这些学到的概念是可以转移的,可以应用于具有类似概念的新类型的物体。