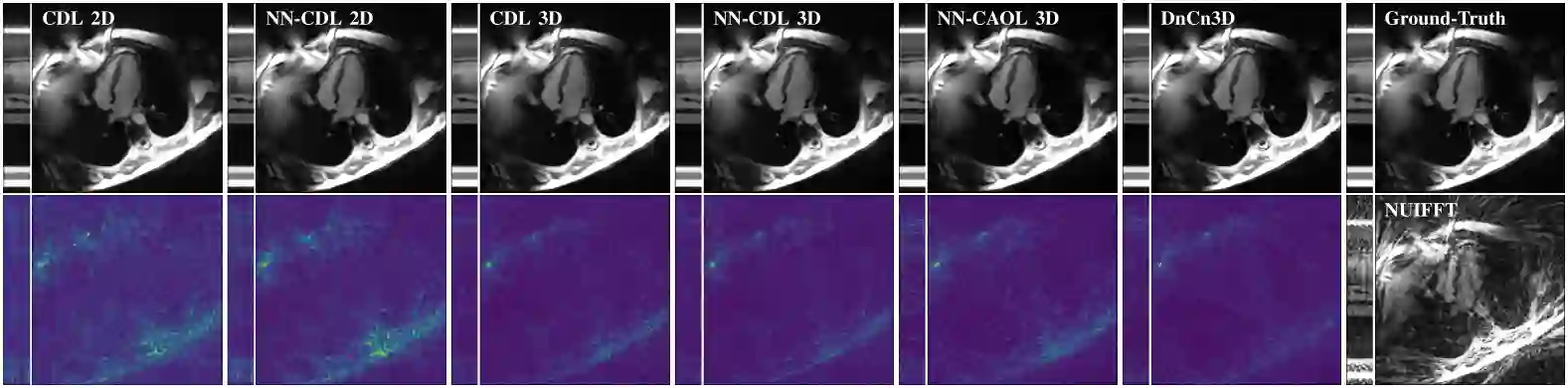

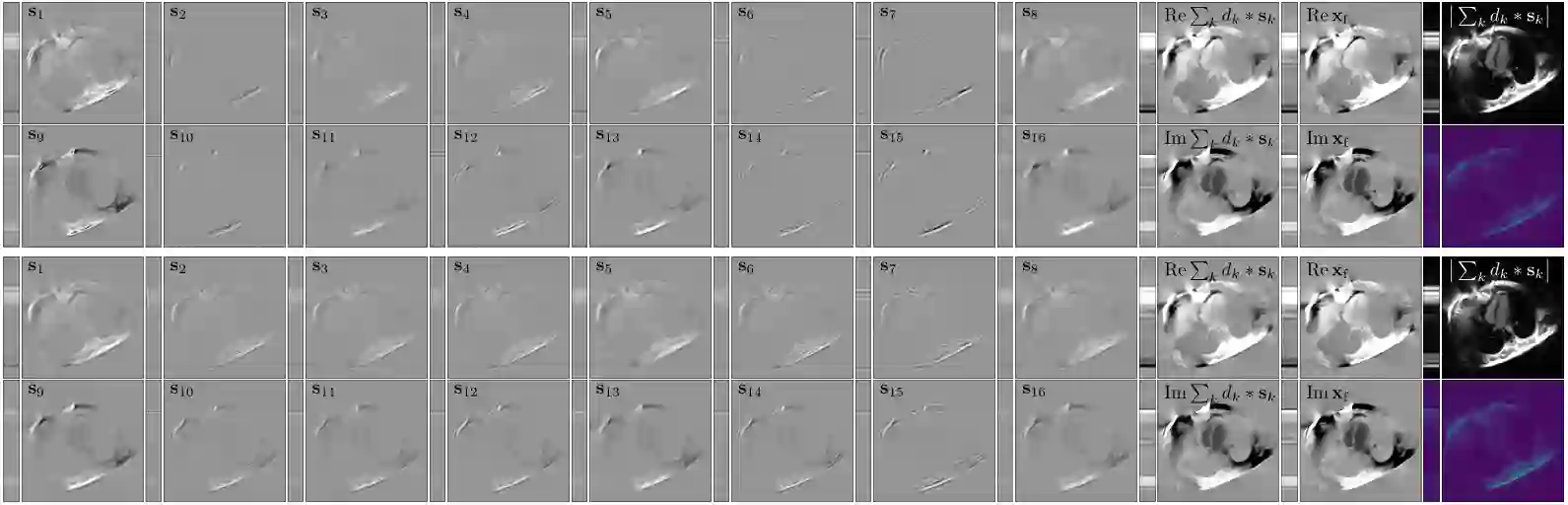

Sparsity-based methods have a long history in the field of signal processing and have been successfully applied to various image reconstruction problems. The involved sparsifying transformations or dictionaries are typically either pre-trained using a model which reflects the assumed properties of the signals or adaptively learned during the reconstruction - yielding so-called blind Compressed Sensing approaches. However, by doing so, the transforms are never explicitly trained in conjunction with the physical model which generates the signals. In addition, properly choosing the involved regularization parameters remains a challenging task. Another recently emerged training-paradigm for regularization methods is to use iterative neural networks (INNs) - also known as unrolled networks - which contain the physical model. In this work, we construct an INN which can be used as a supervised and physics-informed online convolutional dictionary learning algorithm. We evaluated the proposed approach by applying it to a realistic large-scale dynamic MR reconstruction problem and compared it to several other recently published works. We show that the proposed INN improves over two conventional model-agnostic training methods and yields competitive results also compared to a deep INN. Further, it does not require to choose the regularization parameters and - in contrast to deep INNs - each network component is entirely interpretable.

翻译:在信号处理领域,基于分化的方法历史悠久,并成功地应用于各种图像重建问题。所涉及的转换或词典通常要么使用反映信号假设特性的模式,要么先经过训练,要么在重建过程中采用反映信号假设特性的模式,要么在重建过程中适应性地学习----产生所谓的盲制压缩遥感方法。然而,通过这样做,这些变换从未与产生信号的物理模型一起经过明确培训。此外,正确选择所涉的正规化参数仍是一项艰巨的任务。另一个最近出现的正规化方法的培训比方是使用包含物理模型的迭代神经网络(又称为无滚动网络),这是典型的网络。在这项工作中,我们建造了一个INN,可以用作监督和物理上知情的在线革命词典学习算法。我们评估了拟议办法,将它应用到现实的大规模动态MR重建问题,并将其与其他最近出版的作品作比较。我们发现,拟议的INN在两种常规模式培训方法上有所改进,并产生与深层INN网相比具有竞争力的结果。此外,我们完全需要选择每个网络的正规化参数和每个组成部分。