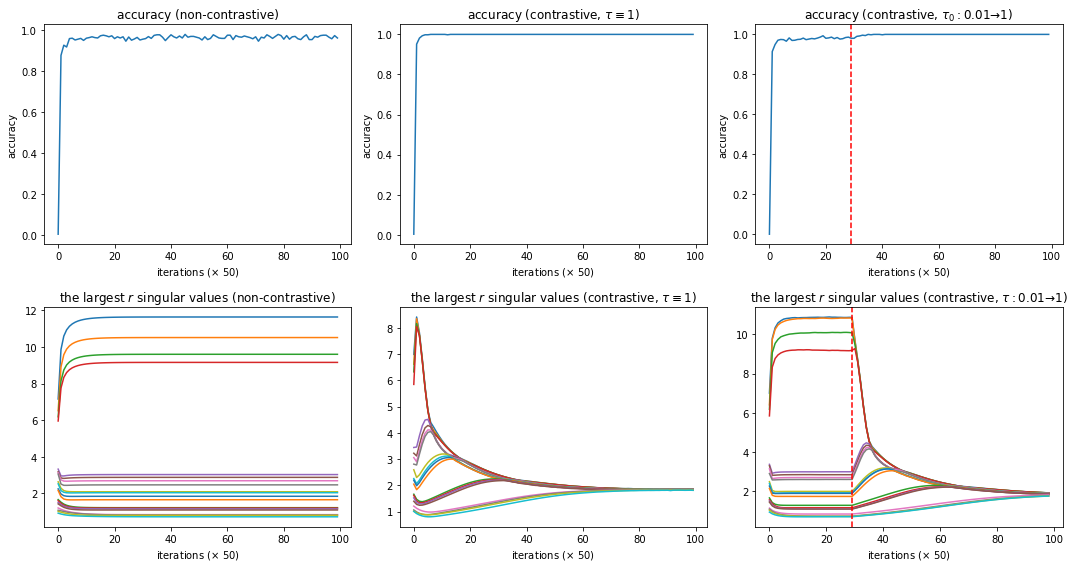

Recently, contrastive learning approaches (e.g., CLIP (Radford et al., 2021)) have received huge success in multimodal learning, where the model tries to minimize the distance between the representations of different views (e.g., image and its caption) of the same data point while keeping the representations of different data points away from each other. However, from a theoretical perspective, it is unclear how contrastive learning can learn the representations from different views efficiently, especially when the data is not isotropic. In this work, we analyze the training dynamics of a simple multimodal contrastive learning model and show that contrastive pairs are important for the model to efficiently balance the learned representations. In particular, we show that the positive pairs will drive the model to align the representations at the cost of increasing the condition number, while the negative pairs will reduce the condition number, keeping the learned representations balanced.

翻译:近来, 对比学习方法(例如,CLIP(Radford等,2021))在多模态学习中获得了巨大的成功,其中模型试图最小化相同数据点的不同视图之间的表征距离(例如,图像及其标题),同时保持不同数据点的表征彼此分开。然而,从理论角度看,我们不清楚对比学习如何有效地从不同的视角学习表征,特别是当数据是非等向的时。在这项工作中,我们分析了一个简单的多模态对比学习模型的训练动态,并展示了对比对对模型有效平衡学习表征至关重要。特别地,我们证明了正样本将带动模型为了对齐学习表征而增加条件数,而负样本将降低条件数,以保持所学表征的平衡。