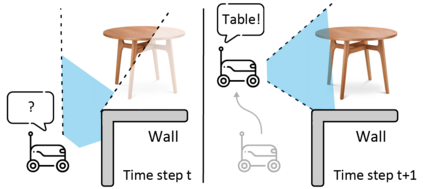

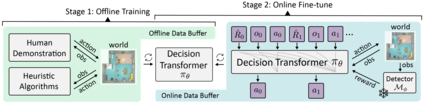

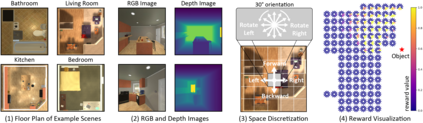

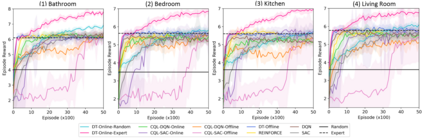

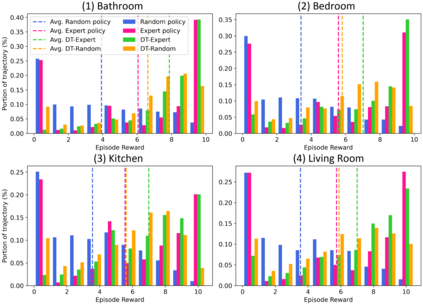

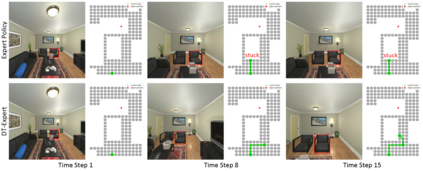

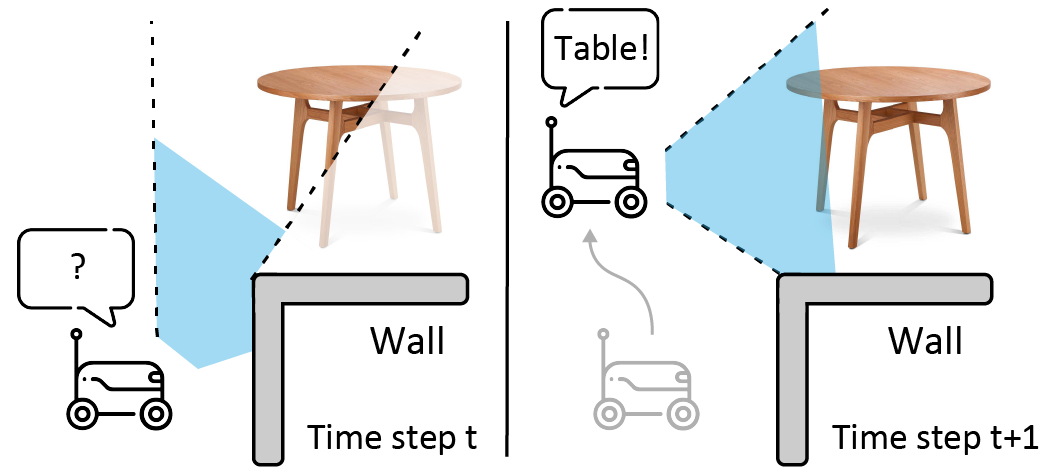

Active perception describes a broad class of techniques that couple planning and perception systems to move the robot in a way to give the robot more information about the environment. In most robotic systems, perception is typically independent of motion planning. For example, traditional object detection is passive: it operates only on the images it receives. However, we have a chance to improve the results if we allow planning to consume detection signals and move the robot to collect views that maximize the quality of the results. In this paper, we use reinforcement learning (RL) methods to control the robot in order to obtain images that maximize the detection quality. Specifically, we propose using a Decision Transformer with online fine-tuning, which first optimizes the policy with a pre-collected expert dataset and then improves the learned policy by exploring better solutions in the environment. We evaluate the performance of proposed method on an interactive dataset collected from an indoor scenario simulator. Experimental results demonstrate that our method outperforms all baselines, including expert policy and pure offline RL methods. We also provide exhaustive analyses of the reward distribution and observation space.

翻译:主动感知描述了一系列广泛的技术, 将机器人的规划和感知系统组合起来, 以便给机器人更多有关环境的信息。 在大多数机器人系统中, 感知通常与运动规划无关。 例如, 传统对象检测是被动的: 它只能根据收到的图像运作。 但是, 如果我们允许计划使用检测信号, 并移动机器人来收集能够最大限度地提高结果质量的视图, 我们有机会改进结果。 在本文中, 我们使用强化学习( RL) 方法来控制机器人, 以便获得能够最大限度地提高检测质量的图像。 具体地说, 我们提议使用一个带有在线微调的“ 决定变换器 ”, 它将首先以预先收集的专家数据集优化政策, 然后通过在环境中探索更好的解决方案来改进学习的政策。 我们评估了从室内情景模拟器中收集的互动数据集的功能。 实验结果显示, 我们的方法超越了所有基线, 包括专家政策和纯离线 RL 方法。 我们还提供了对奖赏分布和观测空间的详尽分析 。