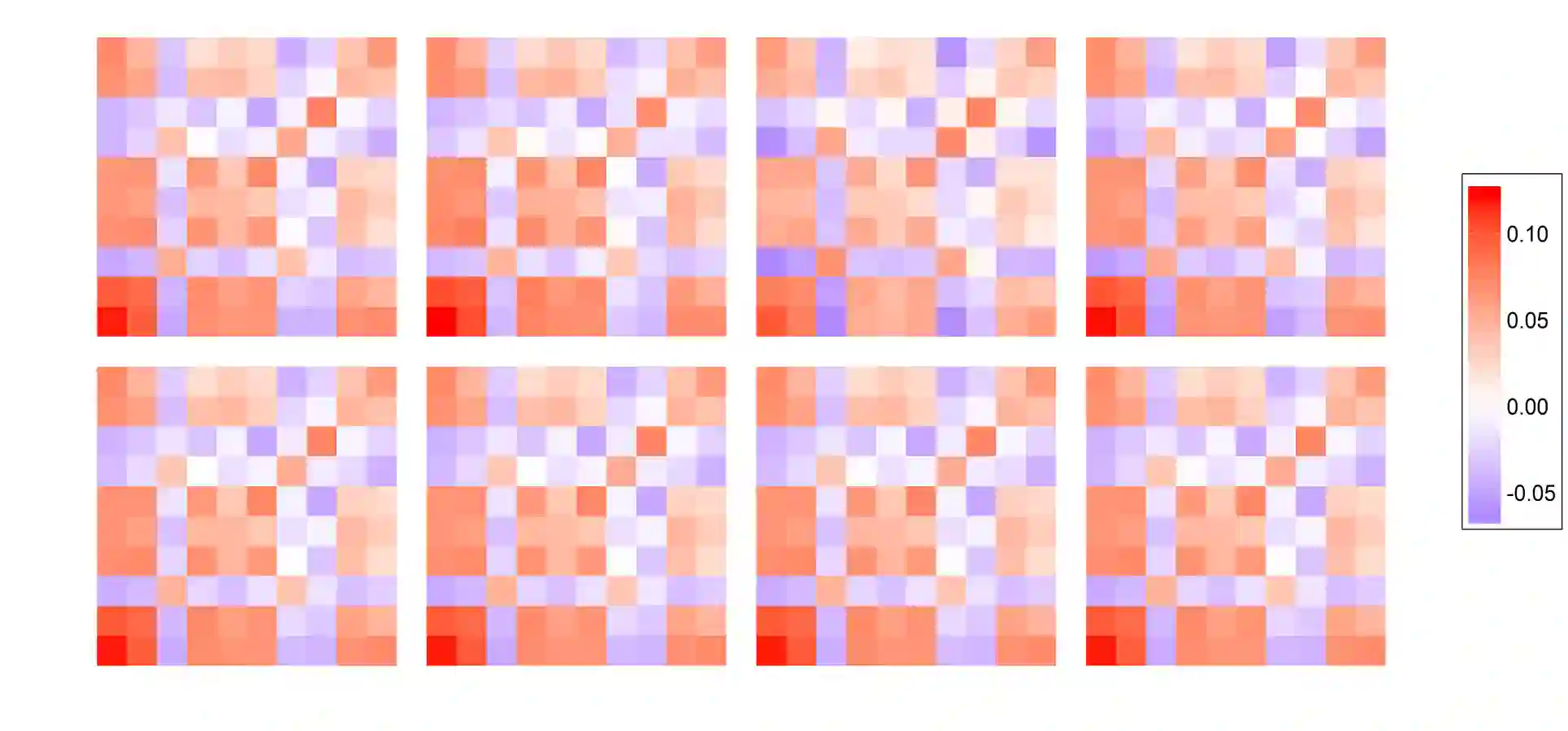

Principal component analysis (PCA) is a versatile tool to reduce the dimensionality which has wide applications in statistics and machine learning community. It is particularly useful to model data in high-dimensional scenarios where the number of variables $p$ is comparable to, or much larger than the sample size $n$. Despite extensive literature on this topic, researchers have focused on modeling static principal eigenvectors or subspaces, which are not suitable for stochastic processes that are dynamic in nature. To characterize the change in the whole course of high-dimensional data collection, we propose a unified framework to directly estimate dynamic principal subspaces spanned by leading eigenvectors of covariance matrices. We formulate an optimization problem by combining the local linear smoothing and regularization penalty together with the orthogonality constraint, which can be effectively solved by the proximal gradient method for manifold optimization. We show that our method is suitable for high-dimensional data observed under both common and irregular designs. In addition, theoretical properties of the estimators are investigated under $l_q (0 \leq q \leq 1)$ sparsity. Extensive experiments demonstrate the effectiveness of the proposed method in both simulated and real data examples.

翻译:主要元件分析(PCA)是用于减少在统计和机器学习界中广泛应用的维度的多功能工具,在变量数量可与或大大大于抽样规模的美元等高维假设情况下,在高维假设情景中,对数据进行模型化,尤其有用。尽管关于这个专题的大量文献,研究人员仍然侧重于对静态主要偏移器或子空间进行模型化,这些模型不适合具有动态性质的随机过程。为了说明高维数据收集整个过程的变化特点,我们提出了一个统一框架,直接估计由变异矩阵的主要天体构成的动态主要次空间。我们通过将局部线性平滑和整顿处罚与正向限制结合起来来形成优化问题,这可以通过用于多元优化的准轴梯度方法有效解决。我们表明,我们的方法适合在普通和不规则设计下观测的高维数据。此外,估计器的理论特性是在 $_q (0\leq) q q\leq\leq q 1) 和模拟数据模型中的拟议模型示例。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem