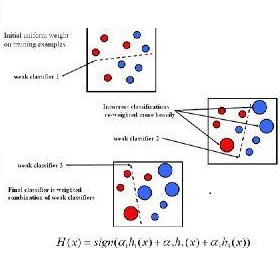

Annotating object ground truth in videos is vital for several downstream tasks in robot perception and machine learning, such as for evaluating the performance of an object tracker or training an image-based object detector. The accuracy of the annotated instances of the moving objects on every image frame in a video is crucially important. Achieving that through manual annotations is not only very time consuming and labor intensive, but is also prone to high error rate. State-of-the-art annotation methods depend on manually initializing the object bounding boxes only in the first frame and then use classical tracking methods, e.g., adaboost, or kernelized correlation filters, to keep track of those bounding boxes. These can quickly drift, thereby requiring tedious manual supervision. In this paper, we propose a new annotation method which leverages a combination of a learning-based detector (SSD) and a learning-based tracker (RE$^3$). Through this, we significantly reduce annotation drifts, and, consequently, the required manual supervision. We validate our approach through annotation experiments using our proposed annotation method and existing baselines on a set of drone video frames. Source code and detailed information on how to run the annotation program can be found at https://github.com/robot-perception-group/smarter-labelme

翻译:在视频中说明物体地面真相对于机器人感知和机器学习方面的一些下游任务至关重要,例如,对于评价对象跟踪器的性能或培训基于图像的物体探测器来说。视频中每个图像框移动物体的附加说明实例的准确性至关重要。通过人工说明实现这一点不仅耗时费时费力,而且容易发生高误差率。州级注解方法取决于仅在第一个框架中手工初始化物体捆绑框,然后使用典型的跟踪方法,例如,adaboost,或内核化相关过滤器,以跟踪这些捆绑框。这些可以快速漂移,从而需要烦琐的手工监督。在本文中,我们提出一种新的注解方法,利用基于学习的探测器(SSD)和基于学习的追踪器(RE$%3美元)的组合。通过这种方法,我们大大减少了批注流,然后使用经典的跟踪方法,例如,adaboost,或内核化的关联过滤器过滤器过滤器过滤器,以确认我们的做法。我们用我们提议的注式实验方法快速流动,因此需要手动的手动的手动监督。在这个文件中,需要使用详细的手动程序设置的源码,可以将一个源/机定位定位标标定位定位定位定位定位定位,在一个数据库上找到一个数据库。