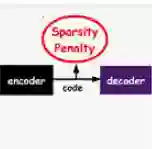

Embedding models for entities and relations are extremely useful for recovering missing facts in a knowledge base. Intuitively, a relation can be modeled by a matrix mapping entity vectors. However, relations reside on low dimension sub-manifolds in the parameter space of arbitrary matrices---for one reason, composition of two relations $\boldsymbol{M}_1,\boldsymbol{M}_2$ may match a third $\boldsymbol{M}_3$ (e.g. composition of relations currency_of_country and country_of_film usually matches currency_of_film_budget), which imposes compositional constraints to be satisfied by the parameters (i.e. $\boldsymbol{M}_1\cdot \boldsymbol{M}_2\approx \boldsymbol{M}_3$). In this paper we investigate a dimension reduction technique by training relations jointly with an autoencoder, which is expected to better capture compositional constraints. We achieve state-of-the-art on Knowledge Base Completion tasks with strongly improved Mean Rank, and show that joint training with an autoencoder leads to interpretable sparse codings of relations, helps discovering compositional constraints and benefits from compositional training. Our source code is released at github.com/tianran/glimvec.

翻译:实体和关系的嵌入模型对于在知识库中恢复缺失的事实极为有用。 直观地说, 一种关系可以通过矩阵映射实体矢量来建模。 但是, 关系存在于任意矩阵参数空间参数的低维子维质上, 因为一个原因, 两个关系的组成可能匹配第三个 $\boldsymbol{M ⁇ 1,\\boldsymbol{M ⁇ 2$2$ 。 在本文中, 我们通过与自动编码公司联合培训关系来调查一个减少维度的技术, 预计这将更好地捕捉构成制约。 我们在知识库中实现状态- 艺术限制, 以参数( 即$\ boldsymbol{M\\\\\\\\\\ cdot\ boldsymbol{M ⁇ 2\ boldsymbol{M ⁇ 3$ ) 。 。 。 在完成这些参数时要满足构成限制需要满足的参数( $\ boldsymbolbol comber com) 。, 将使得我们能够通过联合培训/ com real deal com reviewd com relation relation