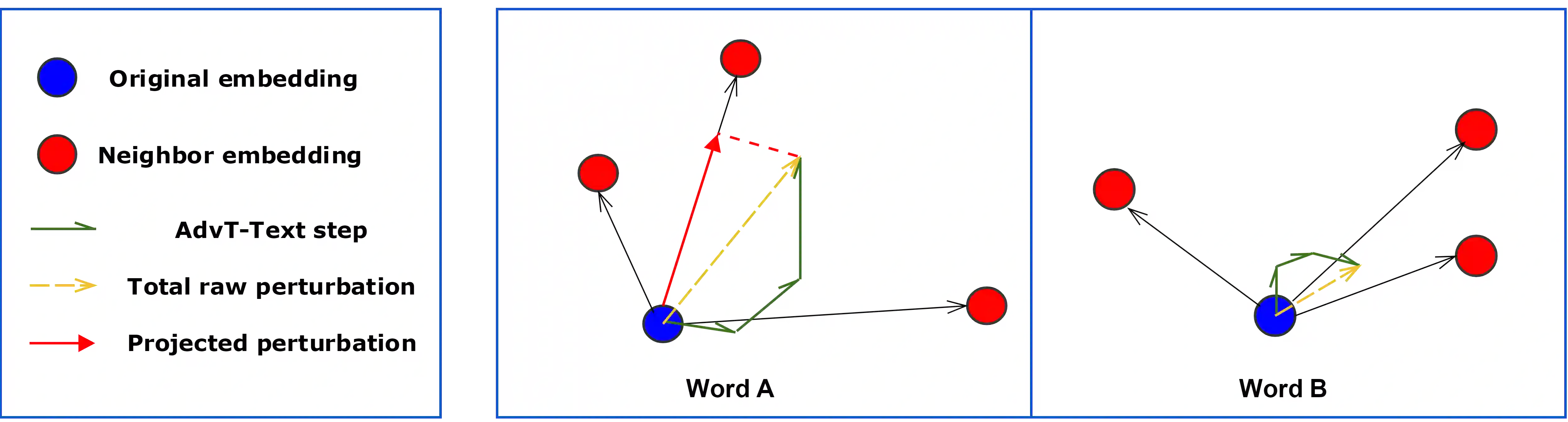

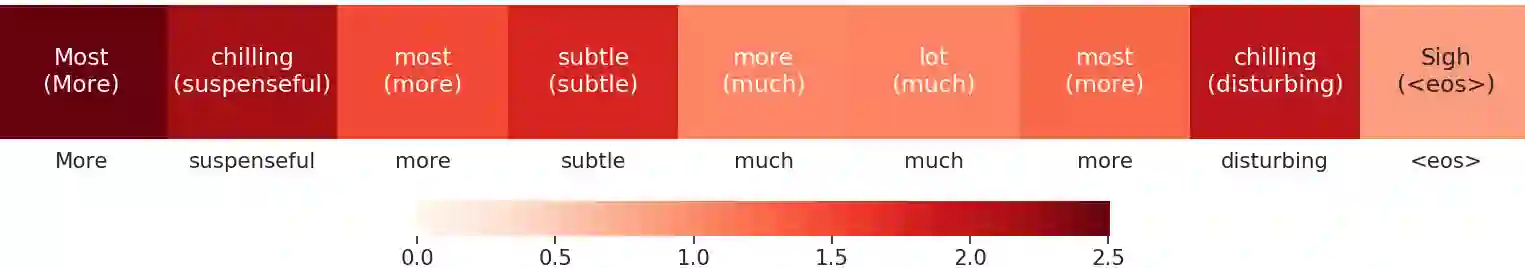

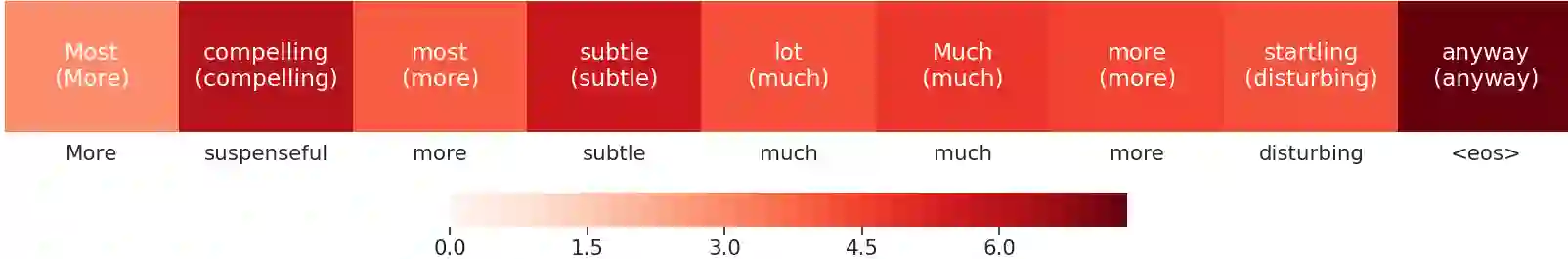

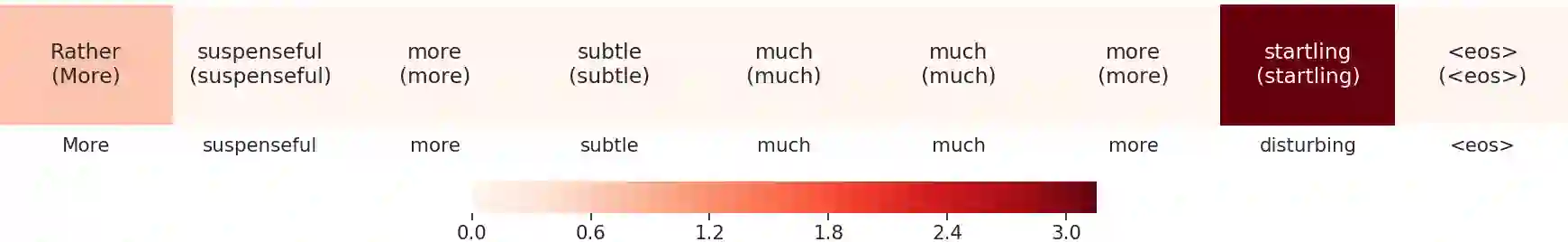

Generating high-quality and interpretable adversarial examples in the text domain is a much more daunting task than it is in the image domain. This is due partly to the discrete nature of text, partly to the problem of ensuring that the adversarial examples are still probable and interpretable, and partly to the problem of maintaining label invariance under input perturbations. In order to address some of these challenges, we introduce sparse projected gradient descent (SPGD), a new approach to crafting interpretable adversarial examples for text. SPGD imposes a directional regularization constraint on input perturbations by projecting them onto the directions to nearby word embeddings with highest cosine similarities. This constraint ensures that perturbations move each word embedding in an interpretable direction (i.e., towards another nearby word embedding). Moreover, SPGD imposes a sparsity constraint on perturbations at the sentence level by ignoring word-embedding perturbations whose norms are below a certain threshold. This constraint ensures that our method changes only a few words per sequence, leading to higher quality adversarial examples. Our experiments with the IMDB movie review dataset show that the proposed SPGD method improves adversarial example interpretability and likelihood (evaluated by average per-word perplexity) compared to state-of-the-art methods, while suffering little to no loss in training performance.

翻译:在文本领域产生高质量和可解释的对抗性实例是一项比图像领域更艰巨的任务,其部分原因在于文本的离散性质,部分是由于确保对抗性实例仍然有可能并可以解释的问题,部分是由于在投入扰动下保持标签的偏差问题。为了应对其中一些挑战,我们引入了稀疏预测的梯度偏移(SPGD),这是为文本设计可解释的对抗性实例的一种新方法。SPGD通过投射它们到附近嵌入具有最高共振性相似性的单词的方向对输入扰动施加了方向性规范性制约。这一制约确保了每个词的扰动将嵌入一个可解释的方向(即接近的单词嵌入另一个词)。此外,SPGDD对句层的扰动性限制是,我们忽略了字性扰动性扰动的规范低于某种阈值的临界值。这一制约确保我们的方法在顺序上仅改变几个词,导致更高的反调示例。我们与IMDB进行实验时,通过每度分析平均风险性分析数据方法,从而显示每期的状态。