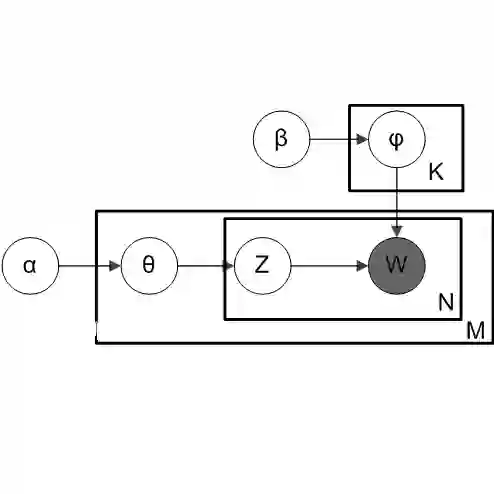

Latent Dirichlet allocation (LDA) is widely used for unsupervised topic modelling on sets of documents. No temporal information is used in the model. However, there is often a relationship between the corresponding topics of consecutive tokens. In this paper, we present an extension to LDA that uses a Markov chain to model temporal information. We use this new model for acoustic unit discovery from speech. As input tokens, the model takes a discretised encoding of speech from a vector quantised (VQ) neural network with 512 codes. The goal is then to map these 512 VQ codes to 50 phone-like units (topics) in order to more closely resemble true phones. In contrast to the base LDA, which only considers how VQ codes co-occur within utterances (documents), the Markov chain LDA additionally captures how consecutive codes follow one another. This extension leads to an increase in cluster quality and phone segmentation results compared to the base LDA. Compared to a recent vector quantised neural network approach that also learns 50 units, the extended LDA model performs better in phone segmentation but worse in mutual information.

翻译:远程 Dirichlet 分配 (LDA) 被广泛用于各组文档上不受监督的专题建模 。 模型中没有使用时间信息 。 但是, 经常在连续标志的相应主题之间有某种关系 。 在本文中, 我们向 LDA 展示了使用 Markov 链来模拟时间信息的 LDA 扩展 。 我们使用这一新模型来从语音中发现声音单位 。 作为输入符号, 该模型使用由 矢量 量 神经网络的语音分解编码 512 码 。 然后, 目标是将这512 VQ 代码映射成 50 个类似电话的单位( 主题), 以便更接近真实的电话 。 与 基本 LDA 相比, 仅考虑 VQ 代码在语句中共同运行的方式( 文档), Markov LDA 链 额外捕捉到连续代码如何相继。 此扩展后, 与基 LDA 相比, 组质量和电话分解的结果会提高 。 与最近的矢量网络方法相比, LDA 模型在手机分解方式也更好, 。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem