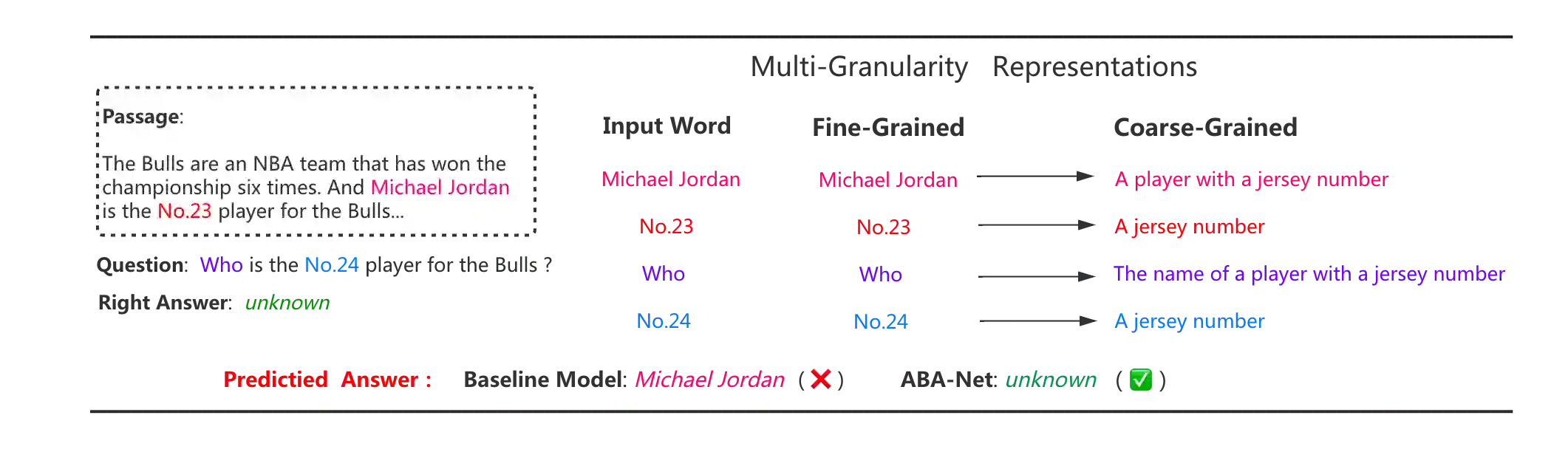

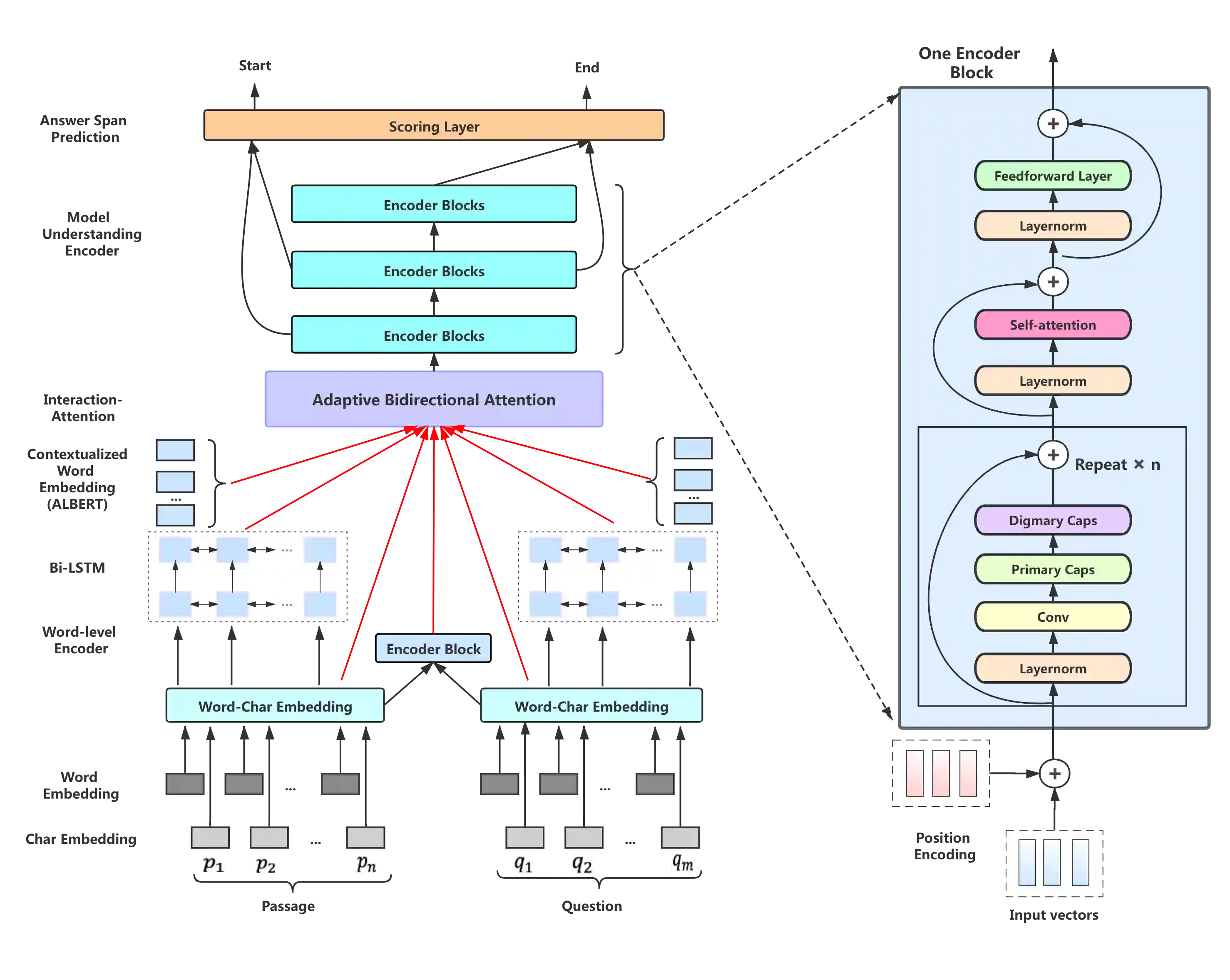

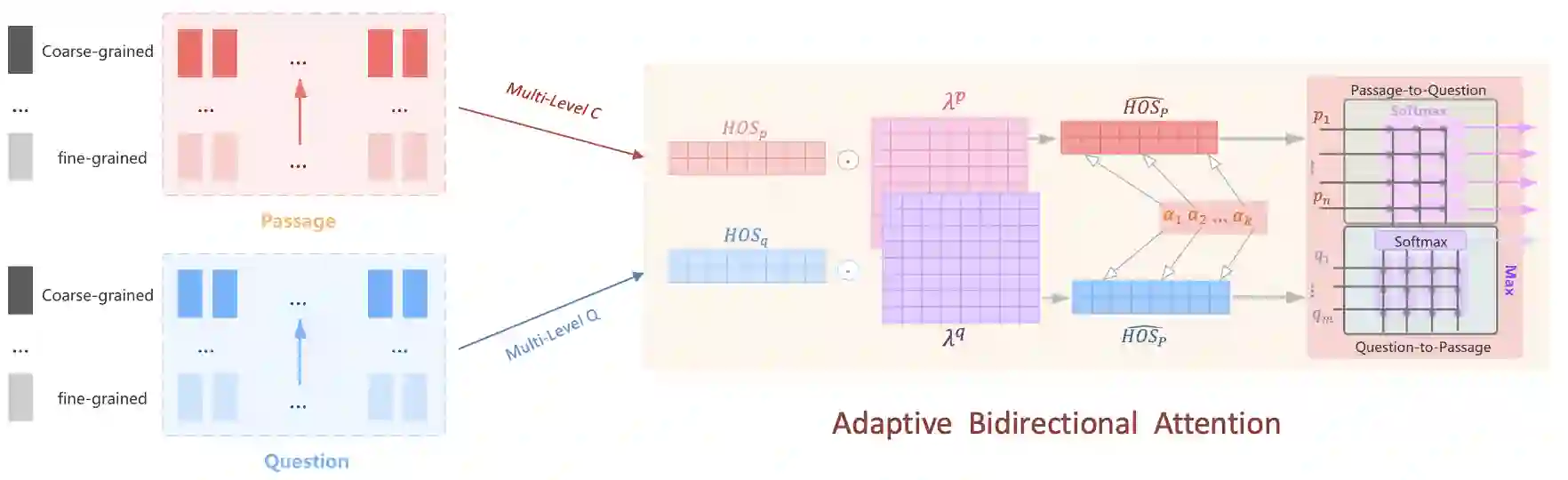

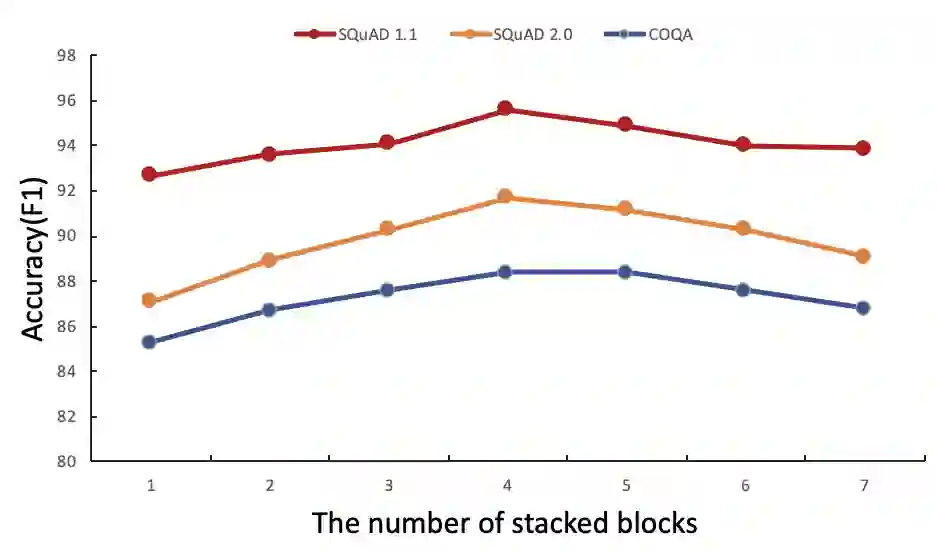

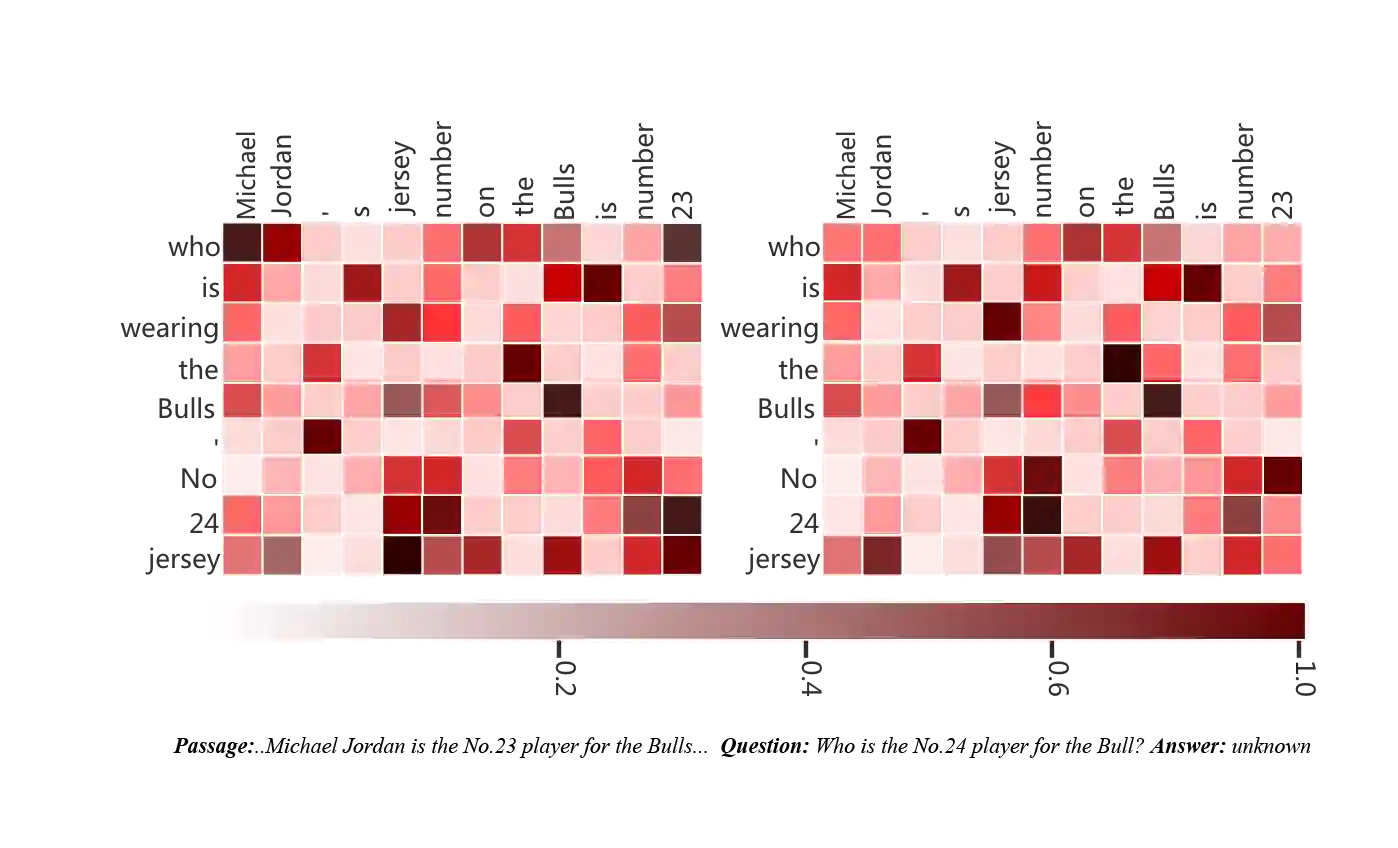

Recently, the attention-enhanced multi-layer encoder, such as Transformer, has been extensively studied in Machine Reading Comprehension (MRC). To predict the answer, it is common practice to employ a predictor to draw information only from the final encoder layer which generates the coarse-grained representations of the source sequences, i.e., passage and question. The analysis shows that the representation of source sequence becomes more coarse-grained from finegrained as the encoding layer increases. It is generally believed that with the growing number of layers in deep neural networks, the encoding process will gather relevant information for each location increasingly, resulting in more coarse-grained representations, which adds the likelihood of similarity to other locations (referring to homogeneity). Such phenomenon will mislead the model to make wrong judgement and degrade the performance. In this paper, we argue that it would be better if the predictor could exploit representations of different granularity from the encoder, providing different views of the source sequences, such that the expressive power of the model could be fully utilized. To this end, we propose a novel approach called Adaptive Bidirectional Attention-Capsule Network (ABA-Net), which adaptively exploits the source representations of different levels to the predictor. Furthermore, due to the better representations are at the core for boosting MRC performance, the capsule network and self-attention module are carefully designed as the building blocks of our encoders, which provides the capability to explore the local and global representations, respectively. Experimental results on three benchmark datasets, i.e., SQuAD 1.0, SQuAD 2.0 and COQA, demonstrate the effectiveness of our approach. In particular, we set the new state-of-the-art performance on the SQuAD 1.0 dataset

翻译:最近,在Machine Read Convention(MRC)中,人们广泛研究了诸如变形器等关注增强的多层编码器。为了预测答案,通常的做法是使用预测器从最后的编码器层中提取信息,从而产生源序列粗化的表达方式,即通道和问题。分析表明,源序列的表示方式随着编码层的增加而从细微变色中变得更加粗糙。人们普遍认为,随着深层神经网络中层的增加,编码过程将越来越多地为每个地点收集相关信息,从而导致更粗化的表示方式,从而增加与其他地点相似的可能性(指同质)。 这种现象将误导模型,做出错误的判断,并降低性能。 在本文中,我们提出,如果预测器能够利用从编码层中不同颗粒的表达方式,提供对源序列的不同看法,从而可以越来越多地为每个地点收集相关的信息。 编码过程将使得模型的表达力变得更清晰, 质量的表示方式将增加与其他地点相似性能(指同质) 。