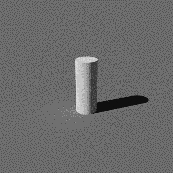

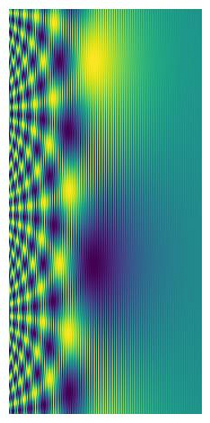

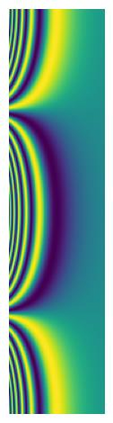

In this work, we focus on outdoor lighting estimation by aggregating individual noisy estimates from images, exploiting the rich image information from wide-angle cameras and/or temporal image sequences. Photographs inherently encode information about the scene's lighting in the form of shading and shadows. Recovering the lighting is an inverse rendering problem and as that ill-posed. Recent work based on deep neural networks has shown promising results for single image lighting estimation, but suffers from robustness. We tackle this problem by combining lighting estimates from several image views sampled in the angular and temporal domain of an image sequence. For this task, we introduce a transformer architecture that is trained in an end-2-end fashion without any statistical post-processing as required by previous work. Thereby, we propose a positional encoding that takes into account the camera calibration and ego-motion estimation to globally register the individual estimates when computing attention between visual words. We show that our method leads to improved lighting estimation while requiring less hyper-parameters compared to the state-of-the-art.

翻译:在这项工作中,我们侧重于户外照明估计,将图像中的个别噪音估计汇总起来,利用宽角相机和/或时间图像序列的丰富图像信息。照片本身以阴影和阴影的形式将现场照明信息编码起来。恢复照明是一个反向问题,也是这种错误。基于深神经网络的近期工作显示单一图像照明估计有希望的结果,但有强健性。我们通过将图像序列的角形和时空域中抽样的若干图像视图的照明估计合并来解决这个问题。我们为此任务引入了一种变压器结构,这种变压器是在没有以前工作所要求的任何统计后处理的情况下,以端至端方式培训的。我们据此提出一种定位编码,其中考虑到相机校准和自我感测,以便在计算视觉字眼之间的注意时,将个人估计进行全球登记。我们的方法可以改进照明估计,同时比国家技术更不需要超参数。