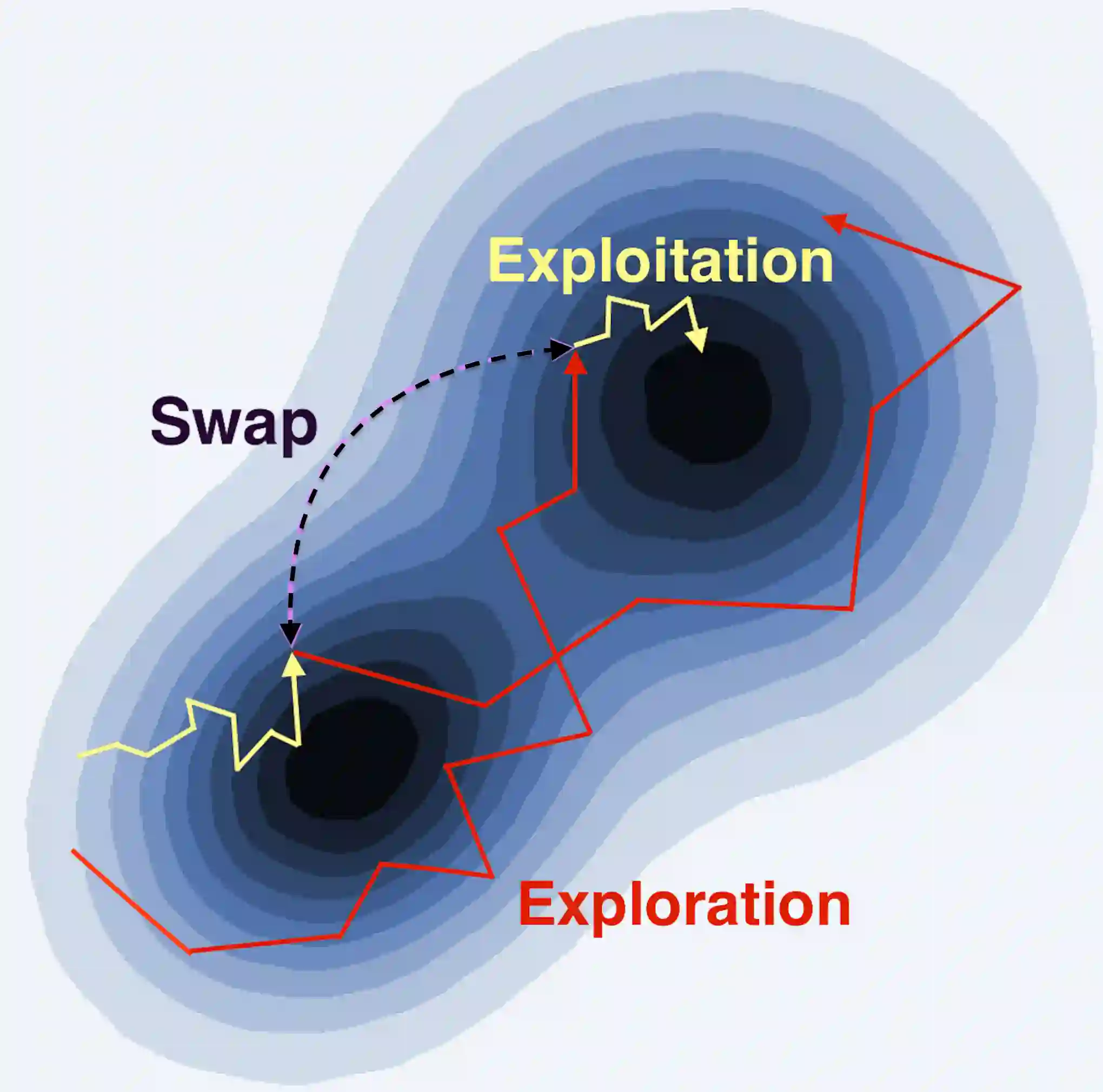

Replica exchange Monte Carlo (reMC), also known as parallel tempering, is an important technique for accelerating the convergence of the conventional Markov Chain Monte Carlo (MCMC) algorithms. However, such a method requires the evaluation of the energy function based on the full dataset and is not scalable to big data. The na\"ive implementation of reMC in mini-batch settings introduces large biases, which cannot be directly extended to the stochastic gradient MCMC (SGMCMC), the standard sampling method for simulating from deep neural networks (DNNs). In this paper, we propose an adaptive replica exchange SGMCMC (reSGMCMC) to automatically correct the bias and study the corresponding properties. The analysis implies an acceleration-accuracy trade-off in the numerical discretization of a Markov jump process in a stochastic environment. Empirically, we test the algorithm through extensive experiments on various setups and obtain the state-of-the-art results on CIFAR10, CIFAR100, and SVHN in both supervised learning and semi-supervised learning tasks.

翻译:复制交换蒙特卡洛(ReMC),又称平行调温,是加速常规的马克夫链链蒙特卡洛(MCMC)算法汇合的重要方法,然而,这一方法要求根据完整的数据集对能源功能进行评估,而不能对大数据进行缩放。在微型批量环境中对ReMC的大规模实施带来了巨大的偏差,这不能直接扩展到从深神经网络中模拟的随机梯度 MCMC(SGMCMC)这一标准取样方法。在本文中,我们建议采用适应性复制交换SGMC(RESGMC)自动纠正偏差并研究相应的属性。分析意味着在随机环境中对Markov跳跃进程进行数字分解化的加速精确交易。我们很生动地通过对各种设置进行广泛的实验来测试算法,并获得CIFAR10、CIFAR100和SVHN在受监督的学习和半监督的学习任务中的最新结果。