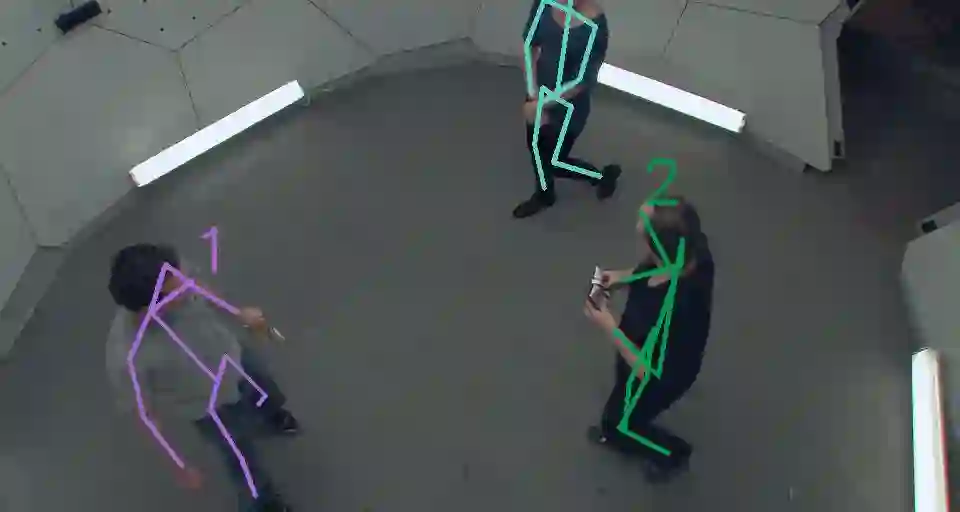

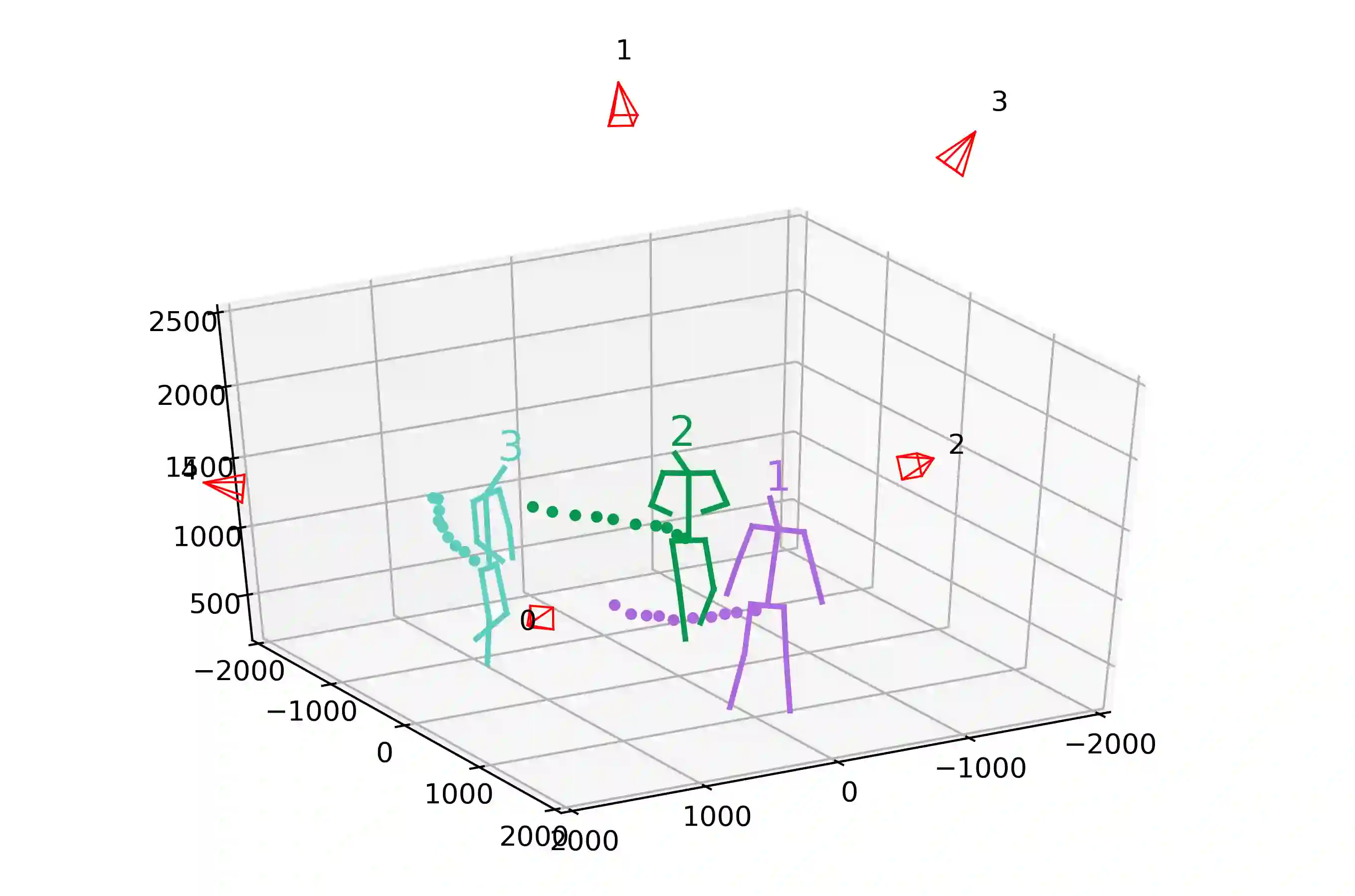

We present VoxelTrack for multi-person 3D pose estimation and tracking from a few cameras which are separated by wide baselines. It employs a multi-branch network to jointly estimate 3D poses and re-identification (Re-ID) features for all people in the environment. In contrast to previous efforts which require to establish cross-view correspondence based on noisy 2D pose estimates, it directly estimates and tracks 3D poses from a 3D voxel-based representation constructed from multi-view images. We first discretize the 3D space by regular voxels and compute a feature vector for each voxel by averaging the body joint heatmaps that are inversely projected from all views. We estimate 3D poses from the voxel representation by predicting whether each voxel contains a particular body joint. Similarly, a Re-ID feature is computed for each voxel which is used to track the estimated 3D poses over time. The main advantage of the approach is that it avoids making any hard decisions based on individual images. The approach can robustly estimate and track 3D poses even when people are severely occluded in some cameras. It outperforms the state-of-the-art methods by a large margin on three public datasets including Shelf, Campus and CMU Panoptic.

翻译:我们为多人 3D 提供VoxelTracack, 与由宽度基线分隔的几台照相机进行估计和跟踪。它使用多部门网络,共同估计环境中所有人3D 配置和再识别(Re-ID)特征。与以前根据噪音 2D 配置的估计数建立交叉视图通信的努力相比,它直接估计和跟踪3D 3D 配置,由多视图像构建的基于 3D voxel 的表达式组成。我们首先通过常规 voxel 将3D 空间分解开来,并计算每个 voxel 的特性矢量矢量,方法是通过平均从所有观点中逆向预测的身体联合热量图。我们估计3D 3D 配置来自 voxel 的表示式,方法是预测每个 voxel 是否包含一个特定的机构联合体。同样,它为每个用于跟踪估计 3D 配置基于多维x 图像的3D, 这种方法的主要优点是避免根据个人图像做出任何硬决定。这个方法可以稳健地估计和跟踪 3D 3D 姿势, 即使人们在大型的离心架上, 3 平层 。