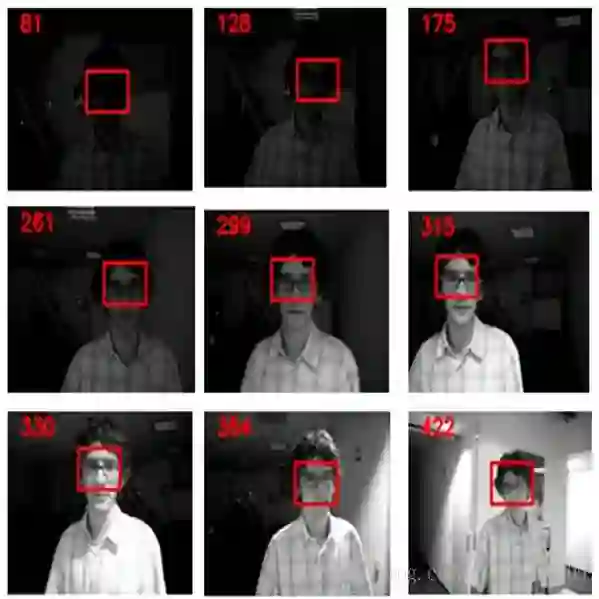

Visual object tracking (VOT) has been widely adopted in mission-critical applications, such as autonomous driving and intelligent surveillance systems. In current practice, third-party resources such as datasets, backbone networks, and training platforms are frequently used to train high-performance VOT models. Whilst these resources bring certain convenience, they also introduce new security threats into VOT models. In this paper, we reveal such a threat where an adversary can easily implant hidden backdoors into VOT models by tempering with the training process. Specifically, we propose a simple yet effective few-shot backdoor attack (FSBA) that optimizes two losses alternately: 1) a \emph{feature loss} defined in the hidden feature space, and 2) the standard \emph{tracking loss}. We show that, once the backdoor is embedded into the target model by our FSBA, it can trick the model to lose track of specific objects even when the \emph{trigger} only appears in one or a few frames. We examine our attack in both digital and physical-world settings and show that it can significantly degrade the performance of state-of-the-art VOT trackers. We also show that our attack is resistant to potential defenses, highlighting the vulnerability of VOT models to potential backdoor attacks.

翻译:视觉物体跟踪(VOT)在任务关键应用中被广泛采用,例如自主驾驶和智能监测系统。在目前的做法中,第三方资源,如数据集、主干网络和培训平台,经常用于培训高性能VOT模型。虽然这些资源带来一定的便利,但也给VOT模型带来新的安全威胁。在本文中,我们揭示出这样的威胁,即对手可以很容易地通过与培训过程相调和的方式将隐藏的后门嵌入VOT模型中。具体地说,我们提出一种简单而有效的后门小投射式攻击(FSBA),以其他方式优化两种损失:1)在隐藏的地物空间中定义的\emph{地形损失}和2)标准的\emph{轨迹损失模式。我们显示,一旦我们的FOSBA将后门嵌入目标模型,它就可以欺骗特定物体的跟踪,即使每只出现在一个或几个框架之内。我们既在数字又物理世界环境中进行的攻击(FSBA),并显示它能够显著地降低我们攻击后方防御性攻击的可能性。