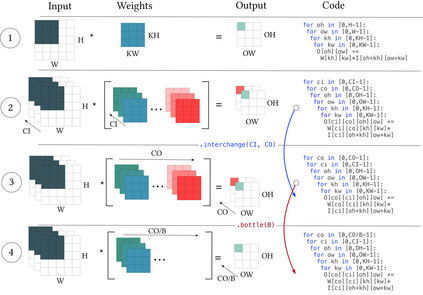

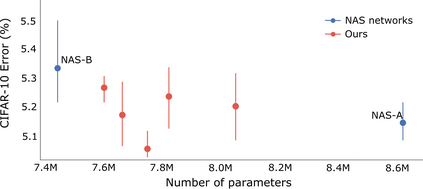

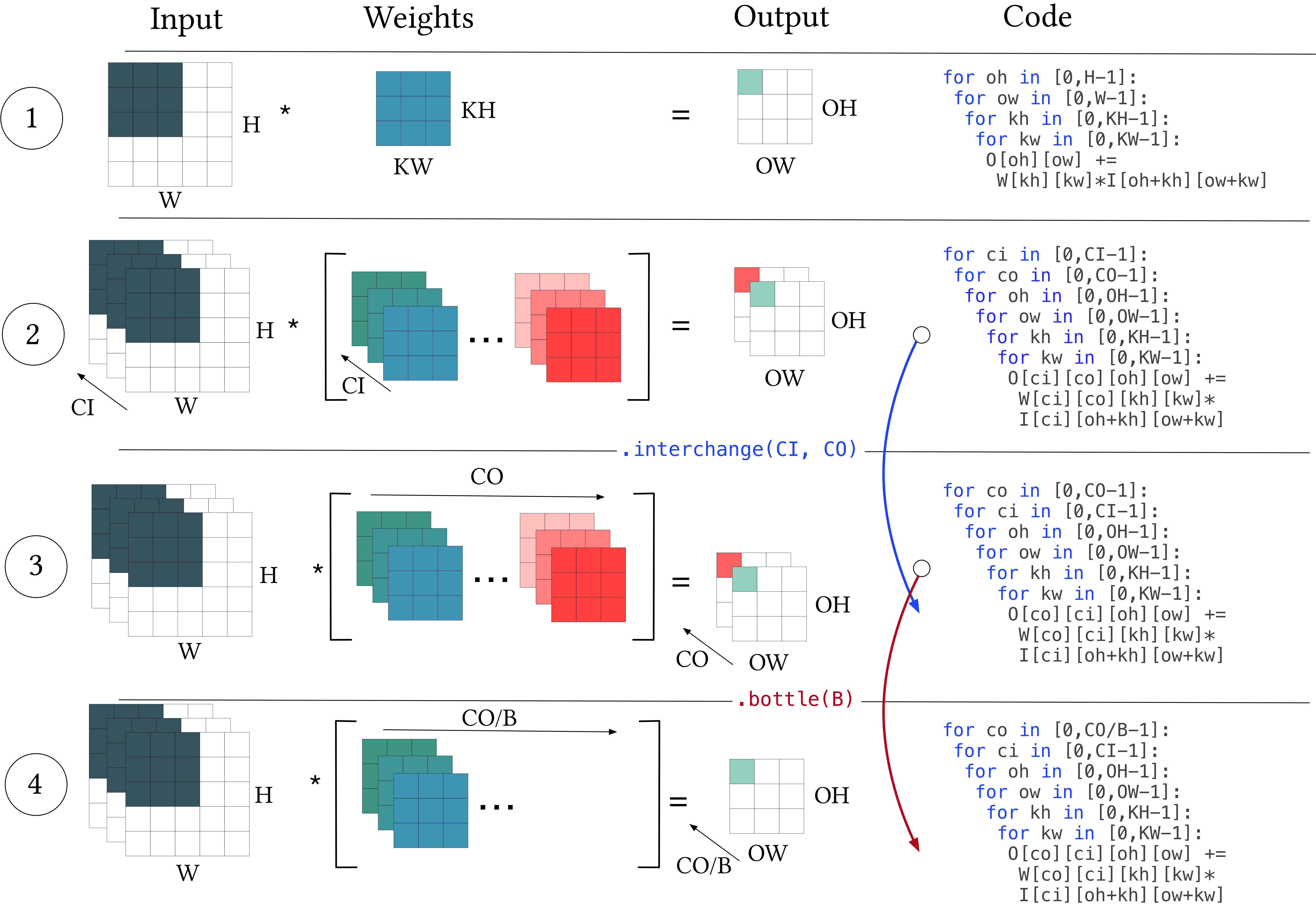

Improving the performance of deep neural networks (DNNs) is important to both the compiler and neural architecture search (NAS) communities. Compilers apply program transformations in order to exploit hardware parallelism and memory hierarchy. However, legality concerns mean they fail to exploit the natural robustness of neural networks. In contrast, NAS techniques mutate networks by operations such as the grouping or bottlenecking of convolutions, exploiting the resilience of DNNs. In this work, we express such neural architecture operations as program transformations whose legality depends on a notion of representational capacity. This allows them to be combined with existing transformations into a unified optimization framework. This unification allows us to express existing NAS operations as combinations of simpler transformations. Crucially, it allows us to generate and explore new tensor convolutions. We prototyped the combined framework in TVM and were able to find optimizations across different DNNs, that significantly reduce inference time - over 3$\times$ in the majority of cases. Furthermore, our scheme dramatically reduces NAS search time. Code is available at~\href{https://github.com/jack-willturner/nas-as-program-transformation-exploration}{this https url}.

翻译:改善深层神经网络(DNNS)的性能对于编译者和神经结构搜索(NAS)社区都很重要。 编译者应用程序转换来利用硬件平行和记忆等级体系。 但是,合法性问题意味着他们不能利用神经网络的自然稳健性。 相反,NAS技术通过诸如组合或瓶颈革命等操作来改造网络,利用DNNS的复原力。 在这项工作中,我们表达了像程序转换这样的神经结构操作,其合法性取决于代表能力的概念。 这使得它们能够与现有的转换相结合,形成一个统一的优化框架。 这种统一使我们能够将现有的NAS操作作为更简单的转换的组合来表达。 显而易见,它使我们能够生成和探索新的数以方革命。 我们在TVM中设计了联合框架,并且能够在不同的DNNS中找到优化,在多数情况下大大缩短了推算时间 - 超过3美元。 此外,我们的计划大大缩短了NAS搜索时间。 NAS搜索时间。 代码可以在 affirmuras- maxirmas/ arrusmaximation.