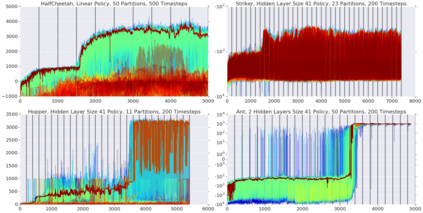

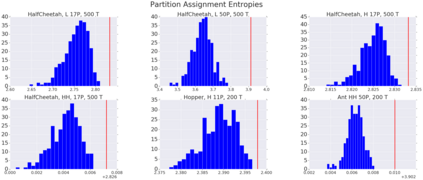

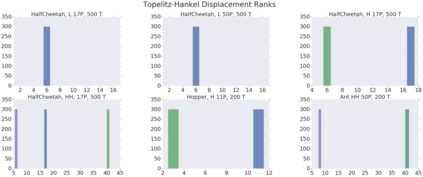

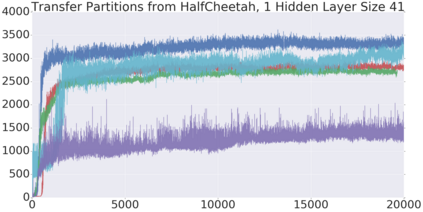

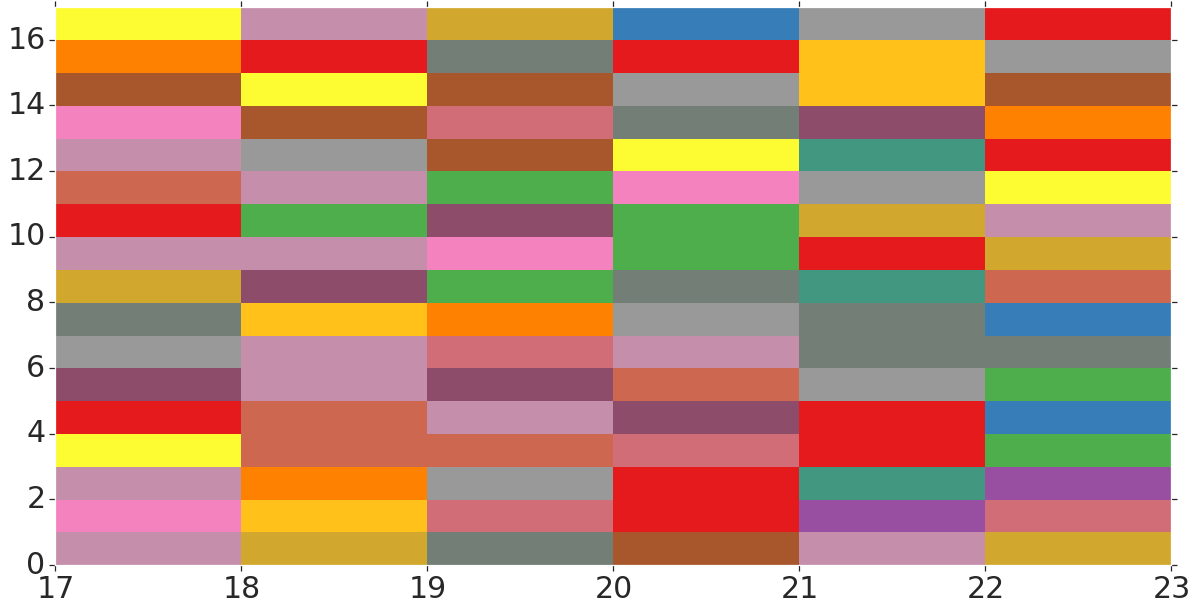

We present a neural architecture search algorithm to construct compact reinforcement learning (RL) policies, by combining ENAS and ES in a highly scalable and intuitive way. By defining the combinatorial search space of NAS to be the set of different edge-partitionings (colorings) into same-weight classes, we represent compact architectures via efficient learned edge-partitionings. For several RL tasks, we manage to learn colorings translating to effective policies parameterized by as few as $17$ weight parameters, providing >90% compression over vanilla policies and 6x compression over state-of-the-art compact policies based on Toeplitz matrices, while still maintaining good reward. We believe that our work is one of the first attempts to propose a rigorous approach to training structured neural network architectures for RL problems that are of interest especially in mobile robotics with limited storage and computational resources.

翻译:我们提出了一个神经结构搜索算法来构建紧凑强化学习(RL)政策,将ENAS和ES结合成高度可伸缩和直观的方法。通过将NAS的组合搜索空间定义为不同边缘分布(彩色)的一组相同重量级,我们通过高效的学习边缘分割来代表紧凑结构。对于一些RL任务,我们设法学会了将彩色转化为有效的政策参数,其重量参数只有17美元,根据托普利茨矩阵,为香草政策提供大于90%的压缩,对最新紧凑政策进行6x压缩,同时仍然保持良好的奖励。 我们认为,我们的工作是首次尝试提出严格的方法来培训结构型神经网络结构结构,解决RL问题,特别是储存和计算资源有限的流动机器人的问题。