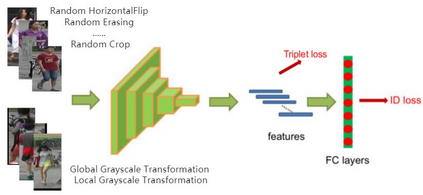

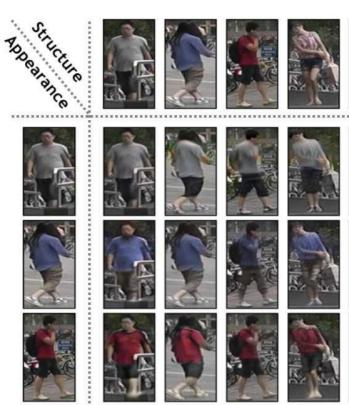

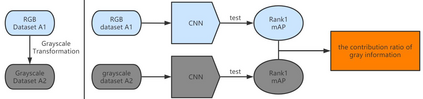

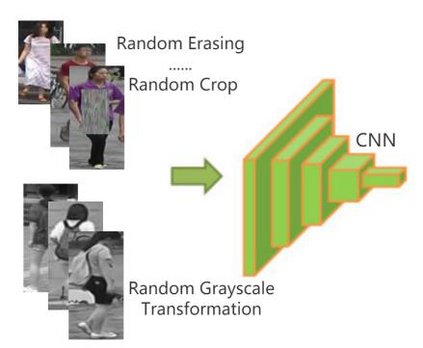

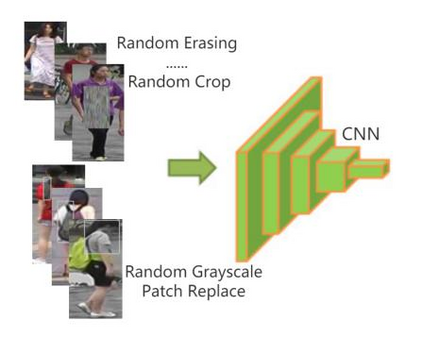

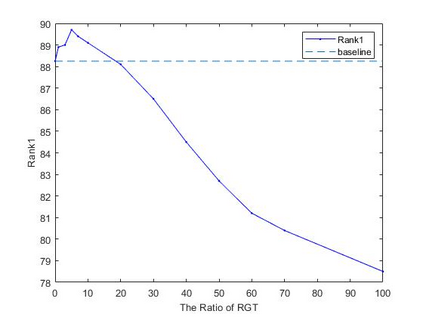

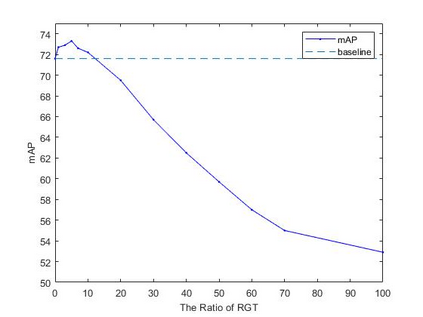

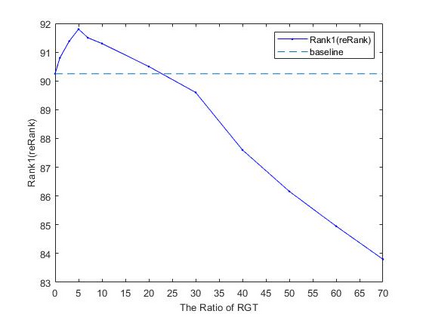

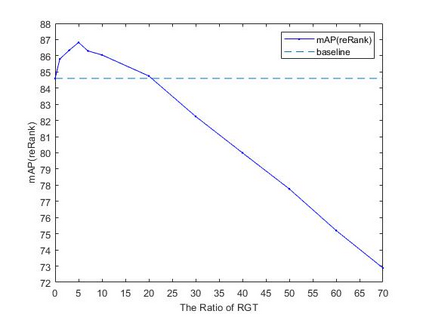

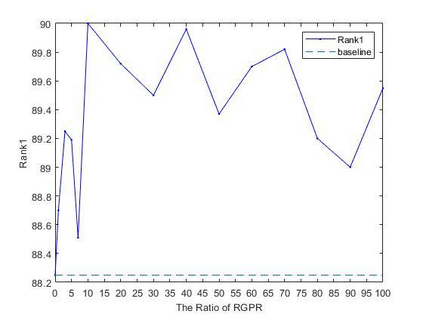

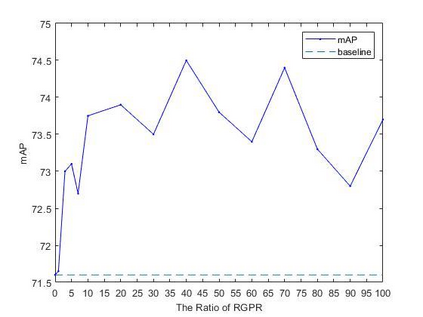

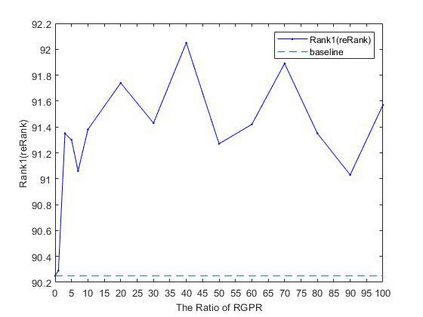

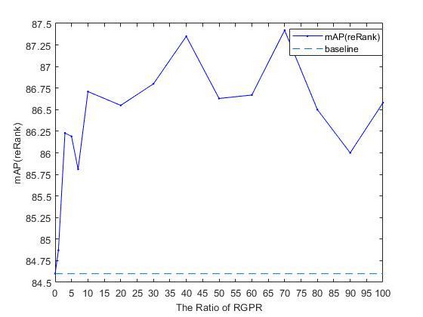

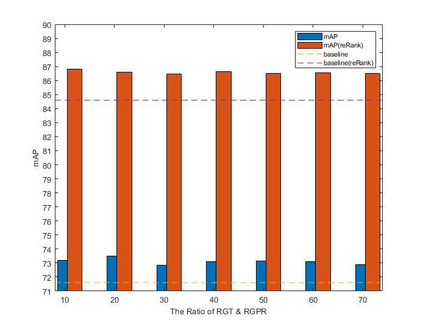

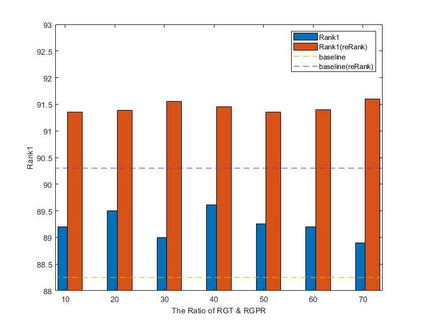

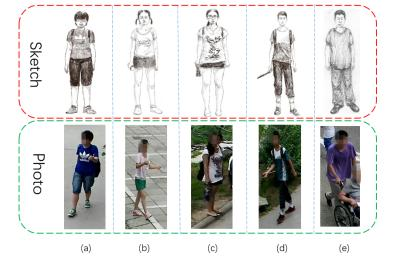

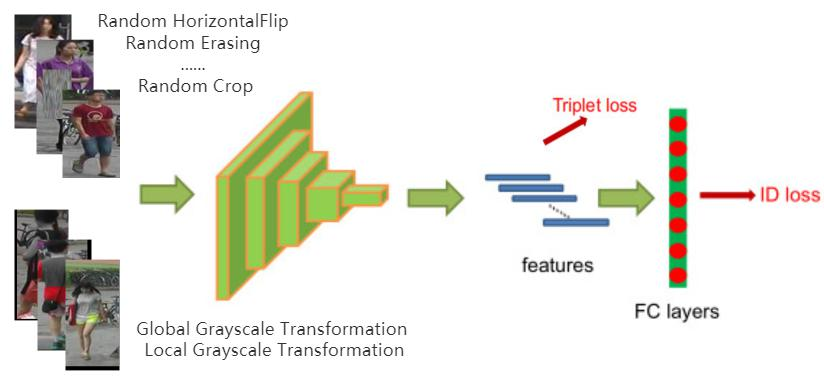

This paper proposes a general multi-modal data learning method, which includes Global Homogeneous Transformation, Local Homogeneous Transformation and their combination. During ReID model training, on the one hand, it randomly selected a rectangular area in the RGB image and replace its color with the same rectangular area in corresponding homogeneous image, thus it generate a training image with different homogeneous areas; On the other hand, it convert an image into a homogeneous image. These two methods help the model to directly learn the relationship between different modalities in the Special ReID task. In single-modal ReID tasks, it can be used as an effective data augmentation. The experimental results show that our method achieves a performance improvement of up to 3.3% in single modal ReID task, and performance improvement in the Sketch Re-identification more than 8%. In addition, our experiments also show that this method is also very useful in adversarial training for adversarial defense. It can help the model learn faster and better from adversarial examples.

翻译:本文提出一个通用的多模式数据学习方法, 包括全球同质变换、 本地同质变换及其组合。 在 ReID 模型培训中, 一方面, 它随机选择了 RGB 图像中的矩形区域, 并以对应的同质图像中相同的矩形区域替换其颜色, 从而生成了不同同质区域的培训图像; 另一方面, 它将图像转换成同质图像。 这两种方法帮助模型直接学习特殊 ReID 任务中不同模式之间的关系。 在单式重现任务中, 它可以用作有效的数据增强。 实验结果显示, 我们的方法在单一模式重置任务中取得了高达3.3%的性能改进, 并在Scletch 重新定位中取得了超过8%的性能改进。 此外, 我们的实验还表明, 这种方法在对抗性辩护的对抗性训练中也非常有用。 它可以帮助模型从对抗性实例中学习更快和更好。