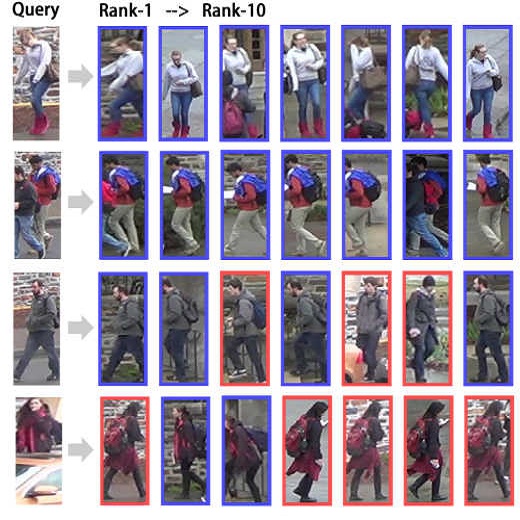

Person identification in the wild is very challenging due to great variation in poses, face quality, clothes, makeup and so on. Traditional research, such as face recognition, person re-identification, and speaker recognition, often focuses on a single modal of information, which is inadequate to handle all the situations in practice. Multi-modal person identification is a more promising way that we can jointly utilize face, head, body, audio features, and so on. In this paper, we introduce iQIYI-VID, the largest video dataset for multi-modal person identification. It is composed of 600K video clips of 5,000 celebrities. These video clips are extracted from 400K hours of online videos of various types, ranging from movies, variety shows, TV series, to news broadcasting. All video clips pass through a careful human annotation process, and the error rate of labels is lower than 0.2%. We evaluated the state-of-art models of face recognition, person re-identification, and speaker recognition on the iQIYI-VID dataset. Experimental results show that these models are still far from being perfect for task of person identification in the wild. We further demonstrate that a simple fusion of multi-modal features can improve person identification considerably. We have released the dataset online to promote multi-modal person identification research.

翻译:野外的人的身份识别由于面容、面容、服装、化妆等差异很大而非常具有挑战性。传统研究,如面部识别、个人重新身份和语音识别等,往往侧重于单一的信息模式,这种模式不足以处理实际中的所有情况。多式个人识别是一个更有希望的方式,我们可以共同利用面部、头部、身体、音频特征等。在本文中,我们引入了iQIYI-VID,这是多模式人身份识别的最大视频数据集。它由5,000名名名人600K视频剪辑组成。这些视频剪辑是从400K小时的各类在线视频中提取的,从电影、各种节目、电视系列到新闻广播等。所有视频剪辑都经过仔细的人类笔记过程,而且标签的错误率低于0.2%。我们评估了脸识别、人重新识别和语音识别iQIYI-VID数据集的最新模型。实验结果显示,这些模型仍然远远不能完美地完成多种身份识别任务,从电影、多样性、电视系列、电视系列到新闻广播。我们可以进一步展示一个简单的在线身份识别模型。