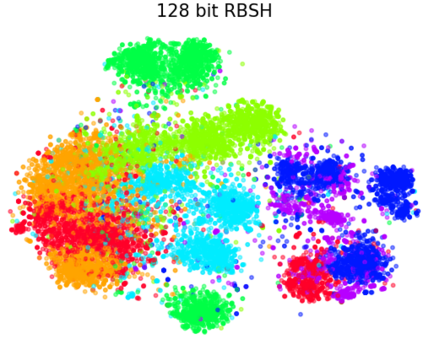

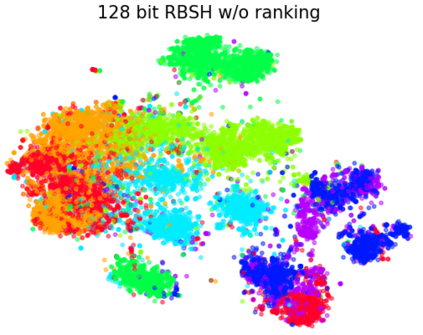

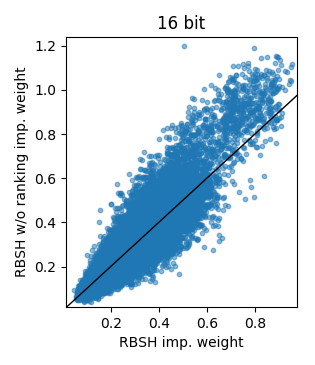

Fast similarity search is a key component in large-scale information retrieval, where semantic hashing has become a popular strategy for representing documents as binary hash codes. Recent advances in this area have been obtained through neural network based models: generative models trained by learning to reconstruct the original documents. We present a novel unsupervised generative semantic hashing approach, \textit{Ranking based Semantic Hashing} (RBSH) that consists of both a variational and a ranking based component. Similarly to variational autoencoders, the variational component is trained to reconstruct the original document conditioned on its generated hash code, and as in prior work, it only considers documents individually. The ranking component solves this limitation by incorporating inter-document similarity into the hash code generation, modelling document ranking through a hinge loss. To circumvent the need for labelled data to compute the hinge loss, we use a weak labeller and thus keep the approach fully unsupervised. Extensive experimental evaluation on four publicly available datasets against traditional baselines and recent state-of-the-art methods for semantic hashing shows that RBSH significantly outperforms all other methods across all evaluated hash code lengths. In fact, RBSH hash codes are able to perform similarly to state-of-the-art hash codes while using 2-4x fewer bits.

翻译:快速相似搜索是大规模信息检索中的一个关键组成部分, 语义散列已成为以二进制散列代码代表文件的流行战略。 这一领域最近的进展是通过神经网络模型获得的: 通过学习重建原始文档而培训的基因模型。 我们提出了一个新颖的未经监督的基因型语义散列方法,\ textit{ Ranking 基础语义散列} (RBSH), 包括一个变异和分级部分。 与变异自动编码一样, 变异组件经过培训, 以重建以生成的散记代码为条件的原始文档。 与先前的工作一样, 该领域的最近进步是通过神经网络模型模型模型模型模型模型模型模型。 为了避免需要贴标签的数据来计算链条损失, 我们使用一个薄弱的标签工具, 从而保持该方法不受完全监控。 对四种公开数据集进行广泛的实验性评估, 以生成的散列代码为条件, 并且与先前的工作一样, 它只考虑文件的单个文件。 排序部分显示, 将其它的代号都大大地用于Srmax 。